Multilingual captioning sits at the front line of global broadcasting. Viewers no longer accept a single-language feed, and regulators insist on inclusive access. Broadcasters who respond first win larger audiences, stronger ad inventory and deeper engagement. This guide breaks down the what, why and how of multilingual captioning in clear, practical steps. Expect straightforward advice, proven tools and the lessons Digital Nirvana has learned while powering captions for leading networks.

Understanding Multilingual Captioning in Broadcasting

Captioning converts speech and sound cues into on-screen text. Multilingual captioning repeats that process in every language the audience speaks, making one program work in many markets. Effective caption workflows account for timecode accuracy, linguistic nuance and technical standards before a signal leaves master control. Because every missed frame erodes trust, broadcasters treat caption streams as seriously as video and audio. The following sections explain why that attention pays off.

The Role of Multilingual Captioning in Global Broadcasting

Global syndication once depended on separate edits or dubbed tracks, both slow and expensive. Multilingual captions now let a single master feed satisfy regional outlets without fresh postproduction. That flexibility drives advertising packages that span continents yet feel local. Sports networks use it to sell simultaneous rights across language territories. Streaming giants rely on captions to comply with accessibility laws from California to Seoul. Audience data proves the payoff: shows with multilingual captions post higher completion rates and lower churn.

Distinguishing Between Subtitles and Closed Captions

Subtitles translate spoken dialogue only, while closed captions include speaker IDs, sound effects and music cues, providing critical context for hard-of-hearing viewers. Regulations in the United States, Canada and the European Union require closed captions for most broadcast content. Streaming platforms mirror those rules to avoid regional takedowns. Understanding the distinction ensures you choose the right workflow, file format and quality checks for each market.

Our Services at Digital Nirvana

Digital Nirvana blends AI speed with editorial judgment to deliver captions that stand up in court and shine on screen. Our Trance captioning platform drafts transcripts in seconds, then guides editors through color-coded corrections, speaker tags and placement tweaks. Real-time outputs feed SDI, NDI or HLS streams with no extra hardware. Built-in checks flag FCC style errors, CEA field mis-maps and missing sound effect tags before files leave the cloud. Metadata sync to MetadataIQ media indexing makes every caption searchable the moment it lands in Avid.

Benefits of Implementing Multilingual Captioning

A well-planned caption strategy delivers audience growth, compliance relief and measurable search benefits. Each upside reinforces the others and compounds over time.

Enhancing Accessibility for Diverse Audiences

Captions let viewers with hearing loss follow programs in real time, meeting legal mandates such as the FCC Closed Captioning Standard. They also assist people who watch in noisy environments or who prefer to watch on mute . Multilingual caption support extends this accessibility across language barriers, turning a regional program into inclusive content for a global community. Advertisers reward channels that demonstrate inclusive practice, so captions carry revenue weight as well as social value.

Expanding Global Viewership and Market Reach

Adding a Every new language track can unlock adds millions of new potential viewers, without reshooting a frame. Major sports leagues have seen saw double-digit international growth once they captioned highlight reels in Spanish and Mandarin. Regional broadcasters gain export revenue by shipping captioned programs to diaspora channels abroad. Because captions cost far less than dubbing, the return on investment arrives quickly.

Improving SEO and Content Discoverability

Captions make video content searchable, because search engines index caption text, your videos can rank for relevant keywords in multiple languages. A single captioned clip can appear in both English and French search results, even without added metadata. Multilingual captions also power auto-generated previews on social media, increasing visibility. This enhanced discoverability drives organic traffic and helps lower customer acquisition costs for streaming platforms. Search engines index caption text, making video discoverable by keywords in any supported language. A captioned clip can rank for both English and French queries with no extra metadata. Multilingual captions also feed automatic preview snippets on social platforms. That discoverability drives organic traffic, lowering customer acquisition costs for streaming services.no

Best Practices for Multilingual Captioning

Adhering to proven methods saves rework and protects brand credibility. Digital Nirvana applies these guidelines on every project.

Utilizing Professional Translators for Accuracy

Machine translation speeds workflows, but expert linguists still ensure tone, idiom and cultural references land properly. We pair AI translation with human review inside the Trance captioning platform to balance speed and accuracy. Translators edit within the same interface that syncs timecodes, so changes never break alignment. The result reads naturally and respects regional norms.

Ensuring Cultural Relevance and Sensitivity

Literal translations sometimes offend or confuse localized audiences. A phrase that works in New York may fail in Mumbai. Cultural reviewers flag jokes, gestures and political references that need adaptation. Teams then choose subtitles, captions or on-screen graphics to convey the idea without friction.Literal translations can confuse, or even alienate—local audiences. What makes sense in one culture may be misunderstood in another. Cultural reviewers help identify jokes, gestures, and references that need adaptation. Based on their guidance, teams adjust subtitles, captions, or on-screen graphics to convey the intended message without causing friction.

Maintaining Synchronization Between Audio and Captions

Captions must hit within two frames of spoken words to keep viewers immersed. Our workflow locks caption streams to the program’s SMPTE timecode, then runs automated drift checks. Any deviation triggers a resync before playout. That precision matters even more during live events where captions feed second-screen apps.

Implementing Quality Control Measures

Automated spell check, profanity filters and timing rule engines catch routine errors. Human QC operators perform random spot checks and full reviews for high-profile content. Final approval includes legibility testing across devices, A clean bill of health includes legibility tests on phones, tablets, smart TVs and set-top boxes.

Technical Considerations in Multilingual Captioning

Engineering teams must choose formats that travel across every device and region.

Choosing the Right Captioning Formats and Standards

SRT and WebVTT dominate OTT delivery, while broadcasters often rely on CEA-608/708 or DVB-Sub. When a single show airs across both, a master caption file is converted to meet each platform’s specifications. When one show airs on both platforms, a master caption file converts into each required format. Compliance tools like The MonitorIQ compliance system captures airchecks to verify that captions are delivered intact to the viewer’s screen.so teams can confirm that captions arrive intact at the viewer’s set.

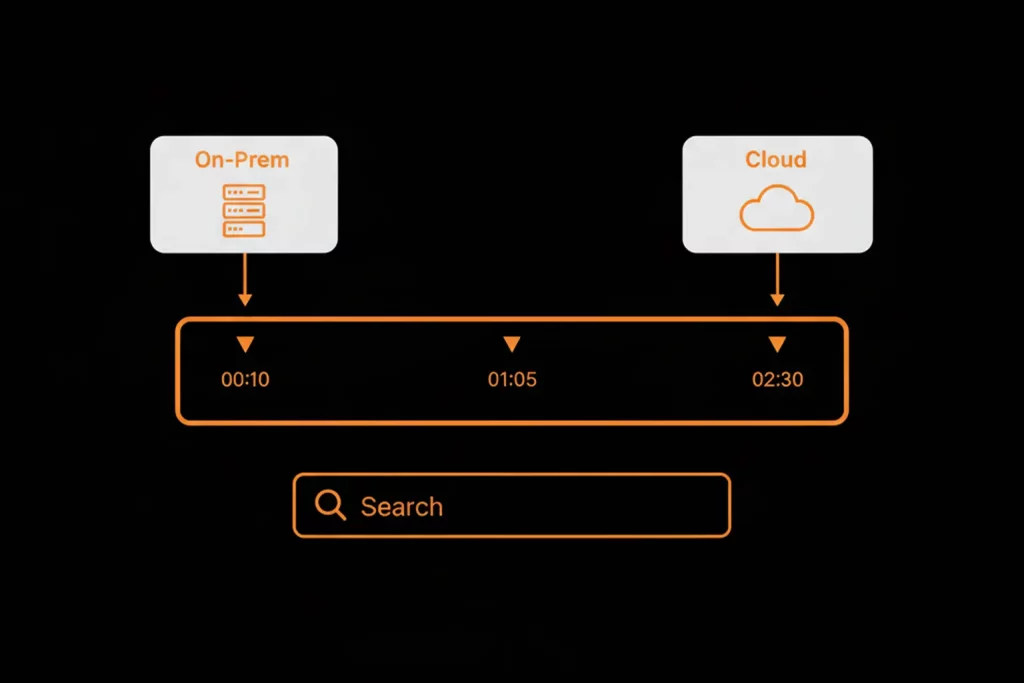

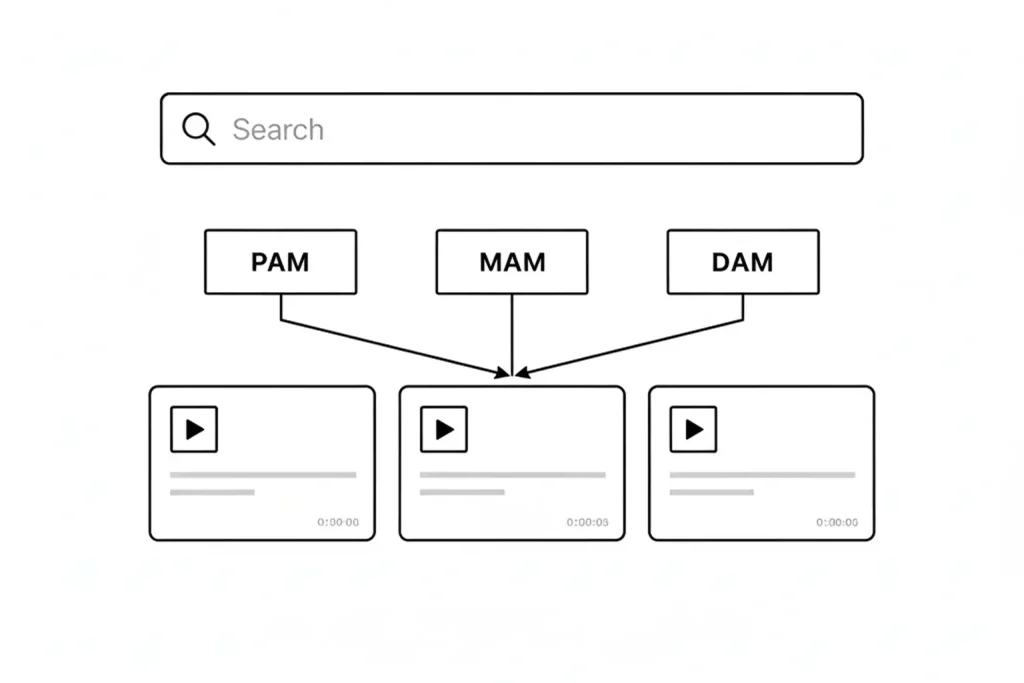

Integrating Captioning with Broadcasting Workflows

Ingest systems should detect new captions automatically and attach them to the asset in the MAM. During live events, caption encoders insert data into the SDI or IP stream before it reaches the encoder farm. Automation keeps latency low and removes manual patching errors.

Leveraging AI and Machine Learning for Efficiency

Speech-to-text engines draft initial transcripts within minutes. Natural language models then predict punctuation and speaker changes. Editors finalize captions in browser-based tools—eliminating the need for crowded control rooms. This hybrid workflow reduces turnaround time by up to 60% and significantly lowers operational costs. Editors finish the file inside a browser instead of crowded control rooms. That hybrid workflow cuts turnaround time by up to 60 percent and slashes operational cost.

Challenges and Solutions in Multilingual Captioning

Addressing Dialectical Variations and Regional Differences

Spanish news in Mexico differes noticably sounds different from Spanish coverage in Spain. Dialect databases help engines predict local vocabulary, but editors still vet terms. Digital Nirvana maintains region-specific glossaries inside Trance, keeping brand tone consistent across Latin America, Europe and the United States.

Managing Real-Time Captioning for Live Broadcasts

Live captioners must type, voice write or edit ASR output with sub-second delays. We route ASR output through cloud editors who insert speaker IDs and fix homophones on the fly. Ultra-low-latency streaming protocols then deliver captions to OTT players with minimal lag.

Ensuring Compliance with International Regulations

The FCC mandates caption accuracy, placement and completeness. Canada’s CRTC and the EU’s AVMSD impose similar rules. Teams use official FCC guidelines as a baseline, then layer local requirements on top. Compliance checks run automatically in MonitorIQ, flagging any missing or garbled caption data.

Tools and Technologies Supporting Multilingual Captioning

Picking the right platform eases adoption and reduces ongoing cost.

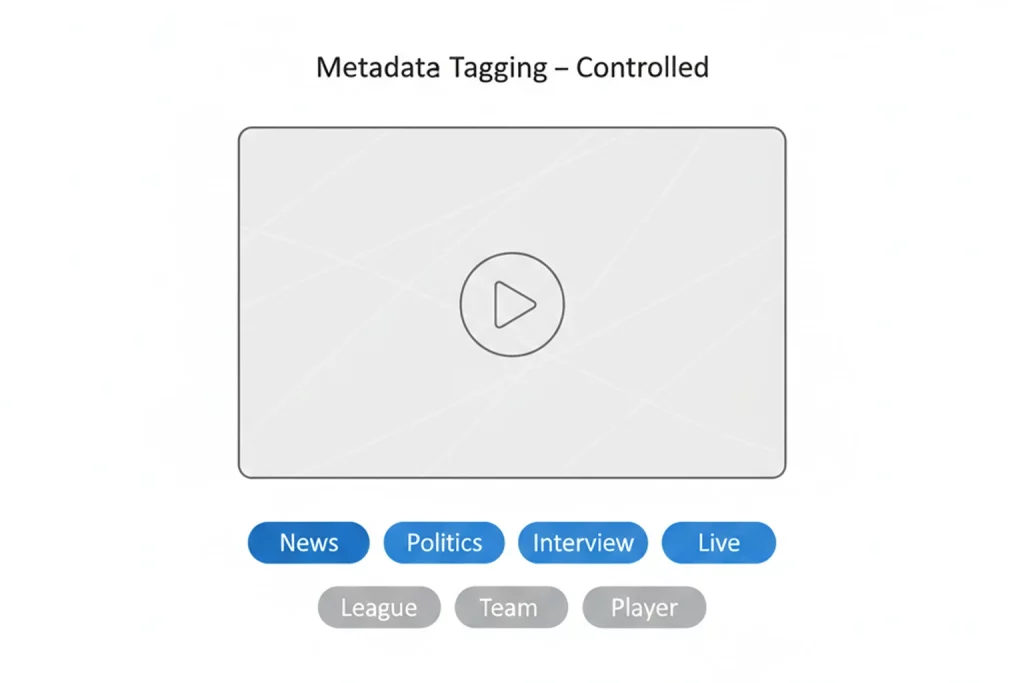

Overview of Leading Captioning Software and Platforms

Cloud platforms now handle ingest, ASR, human review and distribution inside one interface. Trance, OOONA, and SubtitleNEXT lead the market for enterprise workflows. Each offers API hooks for MAM integration, batch transcode and analytics dashboards.

Features to Look for in Captioning Tools

Essential features include speaker diarization, glossaries, frame-accurate editing and multi-format export. Advanced tools add AI quality scoring, redaction and multi-language spell check. Security features such as watermarking protect pre-release content.

Integrating Captioning Solutions with Existing Systems

APIs and watch-folder integrations push finished captions to Adobe Premiere, Avid or cloud encoders. A well-documented SDK shortens deployment time and reduces vendor lock-in. Digital Nirvana’s engineering team provides template scripts so customers can automate routine hand-offs.

How Digital Nirvana Elevates Multilingual Captioning

Our Trance platform combines ASR, machine translation and human expertise in a single browser workspace. Customers ingest raw media, choose target languages and assign reviewers. The system applies glossary rules, generates preliminary captions and alerts editors to timing conflicts. A built-in connection to Subtitles vs. Closed Captioning: What’s the Difference? guides best practice, while automated exports feed playout without manual intervention. Broadcasters report up to 40 percent faster delivery and fewer frame errors.

Case Studies: Successful Implementation of Multilingual Captioning

Broadcasters Expanding Reach Through Multilingual Captions

A Latin American sports channel added Portuguese and English captions to its marquee soccer league. Viewership in non-Spanish households rose 22 percent in a single season. Sponsors paid premium CPM rates because campaigns reached fans across language lines in real time.

Lessons Learned from Multilingual Captioning Initiatives

Successful teams start small, measure impact, then scale languages based on clear ROI. They document caption style guides early and secure executive backing to enforce them. Automation handles routine tasks, freeing linguists to focus on creative problem-solving.

Future Trends in Multilingual Captioning

The Impact of Emerging Technologies on Captioning

Edge computing shortens ASR turnaround by processing audio closer to the source camera. Neural machine translation continues to improve low-resource languages, bringing niches like Icelandic or Zulu into mainstream programming. Blockchain watermarks may soon certify caption authenticity, protecting distributors from tampering claims.

Predictions for the Evolution of Multilingual Broadcasting

By 2030 viewers will expect every live stream to carry captions in at least three languages. AI quality scoring will flag errors before viewers notice them. Audio description and sign-language overlays will merge with caption platforms to create fully inclusive feeds.

Conclusion

Multilingual captioning unlocks larger audiences, satisfies accessibility law and boosts search visibility. Broadcasters that invest in accurate, culturally aware captions build loyalty and win new markets without reshooting content. Start by auditing current workflows, identifying languages with the highest growth potential and choosing a flexible platform. Train teams on style guides and automate ingest to keep turnaround low. Digital Nirvana stands ready to help broadcasters reach every viewer, everywhere, with captions that match the quality of their storytelling.

Digital Nirvana: Empowering Knowledge Through Technology

Digital Nirvana stands at the forefront of the digital age, offering cutting-edge knowledge management solutions and business process automation.

Key Highlights of Digital Nirvana –

- Knowledge Management Solutions: Tailored to enhance organizational efficiency and insight discovery.

- Business Process Automation: Streamline operations with our sophisticated automation tools.

- AI-Based Workflows: Leverage the power of AI to optimize content creation and data analysis.

- Machine Learning & NLP: Our algorithms improve workflows and processes through continuous learning.

- Global Reliability: Trusted worldwide for improving scale, ensuring compliance, and reducing costs.

Book a free demo to scale up your content moderation, metadata, and indexing strategy, and get a firsthand experience of Digital Nirvana’s services.

FAQs

What file format works best for streaming captions?

WebVTT dominates streaming because browsers and smart TVs support it natively. The format handles multilingual cues and styling while staying lightweight.

How many words per minute should captions display?

Aim for 160 to 180 words per minute. That rate lets viewers read comfortably without falling behind live dialog.

Can automatic speech recognition replace human captioners?

ASR cuts drafting time, but human editors still correct names, idioms and timing. A hybrid workflow delivers speed and accuracy.

How do captions affect viewer engagement on social media?

Captions boost completion rates for muted autoplay videos and let algorithms surface clips in additional languages, driving higher shares.

Where can I learn more about multilingual captioning best practices?

Visit Digital Nirvana’s resource hub and explore posts like Multilingual Transcription Tools: Empowering Global Broadcasting for deeper technical guidance.