Media tagger tools now sit at the heart of every fast‑moving production house. Editors who once scrummed through bins for the right clip now surface it with a two‑word search. A single hour of high‑definition video can hold 100,000 searchable frames, and teams that apply frame‑accurate tags reclaim up to three workdays per week. Digital media search platforms report that 70 percent of content goes unused when it lacks descriptive metadata. Media groups that deploy AI tagging see a 50 percent lift in library reuse and shave delivery timelines from days to hours. Keep reading to learn why a purpose‑built tag editor delivers those gains and how MetadataIQ from Digital Nirvana leads the pack.

What Is a Media Tagger and Why It’s Critical Today

Media taggers assign searchable metadata to every audio and video asset in your archive. A modern tag editor sits inside your production tools and pumps time‑coded keywords, categories, and scene details directly into footage as you ingest it. That structure turns loose clips into a library you can query with plain text. Automated tagging keeps pace with live feeds so producers never run blind. Climbing file counts, tighter release windows, and new compliance mandates make a reliable tagger as essential as a camera.

Definition and role in digital content workflows

A media tagger is a software layer that listens, watches, and labels content during ingest. It captures speaker names, locations, topics, and visual cues, then embeds these tags into your production asset management system. Producers search any tag to locate the exact clip, quote, or frame they need. This shift from folder browsing to tag searching compresses research time and cuts repeated downloads. Faster rough‑cut assembly and fewer licensing surprises follow.

How tag editors enhance media team efficiency

When tags arrive automatically, assistant editors spend less time logging shots and more time curating story lines. Searchable tags mean fewer duplicate requests to the archive desk. Teams dispatch clip pulls in minutes instead of hours, freeing senior editors to focus on craft. Budgets stretch because every tagged asset becomes easier to repurpose across platforms.

Replacing manual processes with intelligent tagging

Manual logging once meant typing timecode notes while playback crawled. Intelligent tagging removes that drudgery by reading audio with speech‑to‑text and scanning video with computer vision. The system flags profanity, talent appearances, or brand placements without human prompts. By automating these checkpoints, you standardize quality and pass compliance checks the first time. The result is a leaner, audit‑ready pipeline.

Our Services at Digital Nirvana

At Digital Nirvana, we help you turn massive libraries into instantly searchable assets. The MetadataIQ platform drops frame‑accurate tags into Avid, Adobe, and custom MAMs without extra middleware. Paired with MonitorIQ for compliance logging and Media Enrichment Services for captions and translations, our suite covers every point in your workflow. Media teams that deploy MetadataIQ report a 40 percent cut in search time and a double‑digit boost in content reuse. Book a demo to see how our AI tools secure faster delivery and lower operating costs.

Features and Core Capabilities of MetadataIQ

Digital Nirvana built MetadataIQ to solve real production headaches, from format headaches to compliance. The features below keep media teams moving.

Seamless support for multiple audio and video formats

MetadataIQ ingests ProRes, XDCAM, DNxHD, H.264, MXF, MP4, and an expanding list of mezzanine and proxy formats. It reads channel layouts and maintains audio channel mapping so nothing breaks downstream. Support for diverse audio formats such as WAV, AAC, and Dolby E means the tagger never asks for a conversion that wastes render time.

AI‑powered auto‑tagging for faster metadata generation

Machine‑learning models identify faces, objects, topics, and on‑screen text. Speech recognition adds verbatim transcripts that feed closed captions and deep keyword search. Each audio tag joins the video markers at source timecode, building a multi‑layered metadata set you can trust.

Frame‑accurate metadata insertion for better compliance

MetadataIQ writes tags directly against source timecode. That precision lets standards teams jump to an exact frame when validating loudness or ad marker placement. The timecode fidelity also satisfies FCC closed captioning rules for frame alignment.

Deep integration with Avid and other media platforms

The tool docks inside Avid MediaCentral and Media Composer, exposing tags in panes editors already use. Plugins for Adobe Premiere and popular MAMs push the same data everywhere, keeping workflows intact. No separate editor window means no context switching.

How It Helps Your Media Team – Benefits

MetadataIQ improves every link in the chain once the system starts tagging.

Reducing time spent on metadata entry

By outsourcing tagging to AI, assistant editors reclaim hours per shift. That capacity flows back into creative polish and faster revisions. Projects hit deadlines without overtime.

Enhancing discoverability across digital libraries

Searchable tags lift asset reuse. Marketing teams find archival shots for promos. Social teams cut highlight reels without waiting on archive staff. Each reuse multiplies return on production spend. For deeper reading on discoverability, explore our blog on AI metadata tagging.

Automating compliance and regulatory checks

MetadataIQ flags banned content, adult language, and regional ad restrictions right at ingest. Compliance officers receive alerts with timecode stamps, shortening approval cycles and avoiding costly re‑edits. For a deeper dive into revenue impacts, see our post on metadata monetization strategies.

How MetadataIQ Handles Audio Tags and Formats

MetadataIQ treats audio as first‑class data, never an afterthought. The engine parses voice tracks alongside music beds to preserve every nuance.

Working with diverse audio formats across sources

Whether your clip arrives in WAV, AIFF, AAC, or Dolby E, MetadataIQ ingests and normalizes without lossy transcodes. Sample rates and bit depths stay intact so exports remain pristine. If your archive relies on legacy audio formats, MetadataIQ keeps them searchable.

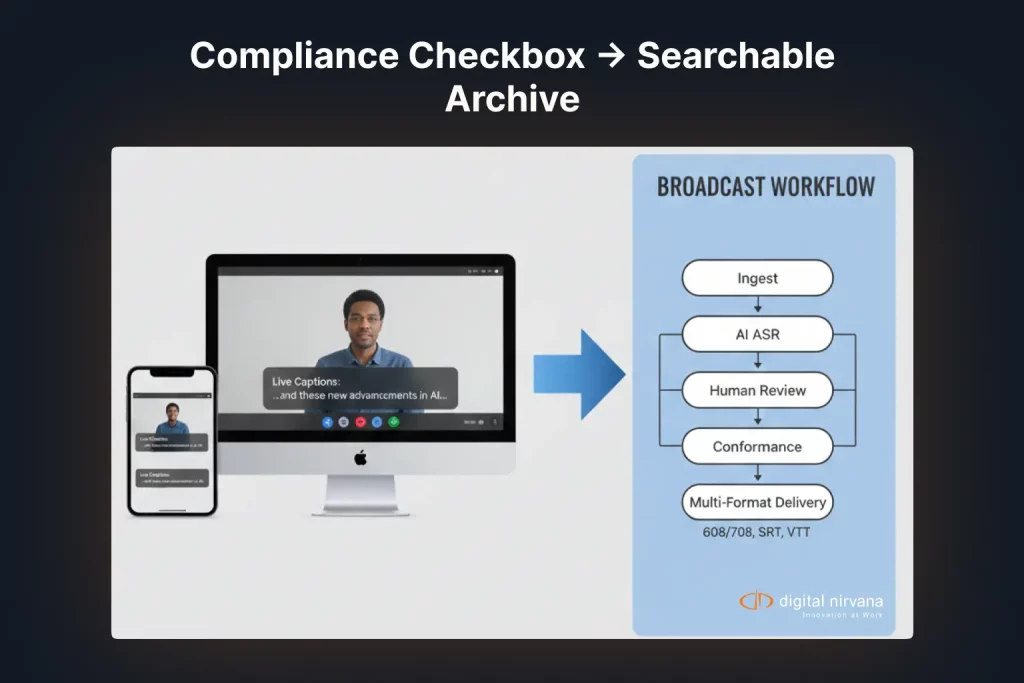

Audio tag processing for speech‑to‑text and indexing

Built‑in ASR converts speech to text in near real time, then assigns speaker IDs and topic markers. Editors jump to a phrase by clicking the transcript, and search engines pull matching clips instantly.

Transcription metadata tagging for captioning workflows

The same transcripts drive caption files, saving another pass through caption software. Producers export ready‑to‑air sidecar files while maintaining alignment with the master asset. For more on caption automation, review our blog on AI closed‑captioning.

Integrating MetadataIQ With Your Existing Systems

Rolling out a media tagger must feel invisible to busy teams. MetadataIQ delivers drop‑in connectivity and flexible hosting.

Plug‑and‑play connection to Avid MediaCentral

Avid users activate MetadataIQ inside the MediaCentral interface with a short license code. Tags appear in the same bin columns they already sort. No extra dashboards required.

Cloud‑based deployment with API access

Cloud hosting lets you scale compute on demand while an open REST API passes tags to any MAM or CMS. Integration teams script custom endpoints in hours rather than weeks. The Library of Congress outlines best practices for digital preservation that align with MetadataIQ’s archival integrity.

Real‑time updates without workflow disruption

Because MetadataIQ runs alongside ingest, it fills tag fields while files copy. Editors open assets already enriched, eliminating hand‑offs and idle time.

MetadataIQ in Action: Real Use Cases

The best proof lives in the field. Here is how customers wield MetadataIQ every day.

Tagging live broadcasts for compliance logs

Sports networks run MetadataIQ on live feeds to generate minute‑by‑minute logs that meet transmission rules and aid highlight creation.

Supporting captioning with accurate metadata

Newsrooms push speech‑to‑text output straight into caption encoders, cutting manual typing and ensuring captions match audio.

Organizing archives with searchable tags

Studios resurface decades‑old footage by querying tags such as actor names or landmarks. Previously lost gems turn into anniversary specials. See our blog on information access for a real‑world example.

Improving retrieval speed for production teams

Post houses report clip retrieval times dropping from 30 minutes to 90 seconds once tags populate search databases, proving that a well‑implemented tag editor pays daily dividends.

How to Choose the Right Media Tagging Platform

Selecting a tagger requires more than a flashy demo. Use these factors to weigh options.

Must‑have features for enterprise‑grade workflows

Look for native support for your ingest formats, AI models trained on broadcast data, and frame‑accurate writing to avoid re‑sync headaches.

Evaluating tag editor compatibility with your system

Confirm that the tagger writes metadata fields your MAM reads. A mismatch forces tedious mapping or data loss.

Considerations around security, scale, and formats supported

Demand encryption in transit and at rest, role‑based access, and autoscaling compute for large ingest spikes. Ensure the vendor certifies against your compliance framework and handles all major file formats.

Partner With Digital Nirvana for Seamless Tagging

Our services at Digital Nirvana remove every barrier between raw footage and searchable archives. When you pair MetadataIQ with solutions like MediaServices IQ, you get end‑to‑end automation that speeds production from ingest to distribution. Clients rely on us for dedicated support, predictable costs, and constant feature updates driven by real customer input. Connect with our team to see how we accelerate discovery, cut compliance headaches, and give editors back the hours they deserve.

In summary…

MetadataIQ proves that smart tagging transforms content libraries from cost centers into revenue engines.

- Media taggers replace manual logging with AI‑driven tagging.

- Editors find clips with plain‑text queries instead of sifting through bins.

- Editors find clips with plain‑text queries instead of sifting through bins.

- MetadataIQ supports dozens of video and audio formats without quality loss.

- AI models add speech‑to‑text, facial recognition, and object detection for deeper search.

- Avid and cloud integrations roll out quickly, with tags visible in familiar bin columns.

- Media teams reclaim hours daily, pass compliance faster, and reuse more content.

FAQs

What is the purpose of a media tagger?

A media tagger attaches searchable metadata such as keywords, speakers, and scene details to audio and video so editors can locate assets quickly.

Does MetadataIQ work with cloud storage?

Yes. You can deploy MetadataIQ in the cloud and connect it to Amazon S3, Azure Blob, or private object stores through the API.

How accurate is the speech‑to‑text engine?

In broadcast environments, MetadataIQ delivers word‑error rates as low as 5 percent when provided with clean audio.

Can I customize the tags that MetadataIQ creates?

You can train custom vocabularies and object models or map generated tags to your own taxonomy.

Is MetadataIQ suitable for digital media search platforms outside Avid?

Absolutely. The open API lets you push tags into any digital media search platform, DAM, or CMS.