The broadcasting industry is undergoing rapid changes, driven by the increasing demand for live broadcasts. This surge in content necessitates robust content moderation mechanisms to ensure quality, compliance, and viewer satisfaction. AI content moderation is emerging as a powerful tool, transforming how broadcasters manage and maintain their content standards in a fast-paced digital environment. The relevance of AI in content moderation lies in its ability to handle vast amounts of data efficiently, ensuring that inappropriate content is swiftly identified and managed.

The Need for Content Moderation in Broadcasting

Content moderation in broadcasting is crucial due to content sources’ diverse and dynamic nature. Broadcasters must navigate the challenges of maintaining high standards while ensuring compliance with regulatory requirements. Content can vary widely in quality and appropriateness, making manual moderation daunting. Additionally, live broadcasts present unique challenges, as there is little time to review and filter content before it goes on air. Effective content moderation ensures broadcasters can maintain their reputation, avoid legal issues, and provide their audience with a safe and engaging viewing experience.

What is AI Content Moderation?

AI content moderation employs advanced technologies like machine learning, natural language processing (NLP), and computer vision to analyze and manage content. Unlike traditional moderation methods, which rely heavily on human intervention, AI-driven systems can process and evaluate vast amounts of data in real-time. Machine learning algorithms can be trained to recognize inappropriate content, such as hate speech, violence, or nudity. NLP enables the AI to understand context and sentiment, ensuring more accurate moderation decisions. Computer vision allows AI systems to analyze visual content, detecting explicit or harmful imagery. This multi-faceted approach makes AI content moderation more efficient and reliable than manual methods.

Benefits of AI Content Moderation for Broadcasters

Real-Time Moderation Capabilities

AI content moderation systems can analyze and flag content in real-time, which is particularly beneficial for live broadcasts. This capability ensures that inappropriate content is identified and addressed immediately, preventing it from reaching the audience.

Scalability

AI systems are designed to handle large volumes of content, making them ideal for broadcasters with extensive content libraries. This scalability ensures consistent moderation, regardless of content volume.

Improved Accuracy and Consistency

AI content moderation reduces the risk of human error, providing more consistent and accurate results. AI algorithms can detect patterns and subtle instances of inappropriate content that human moderators might miss, ensuring a higher standard of content quality.

How AI Content Moderation Works?

AI content moderation involves several key steps. Initially, AI algorithms analyze the content to identify specific indicators of inappropriate material. This analysis can include detecting hate speech, violence, nudity, and other harmful content. Machine learning and NLP enable the AI to understand context and nuance, ensuring accurate detection. Once flagged, content is reviewed by human moderators for final decision-making. This combination of AI efficiency and human judgment ensures a balanced and effective moderation process. The AI can continuously learn and improve from human feedback, enhancing its accuracy and reliability.

Implementing AI Content Moderation in Broadcasting Workflows

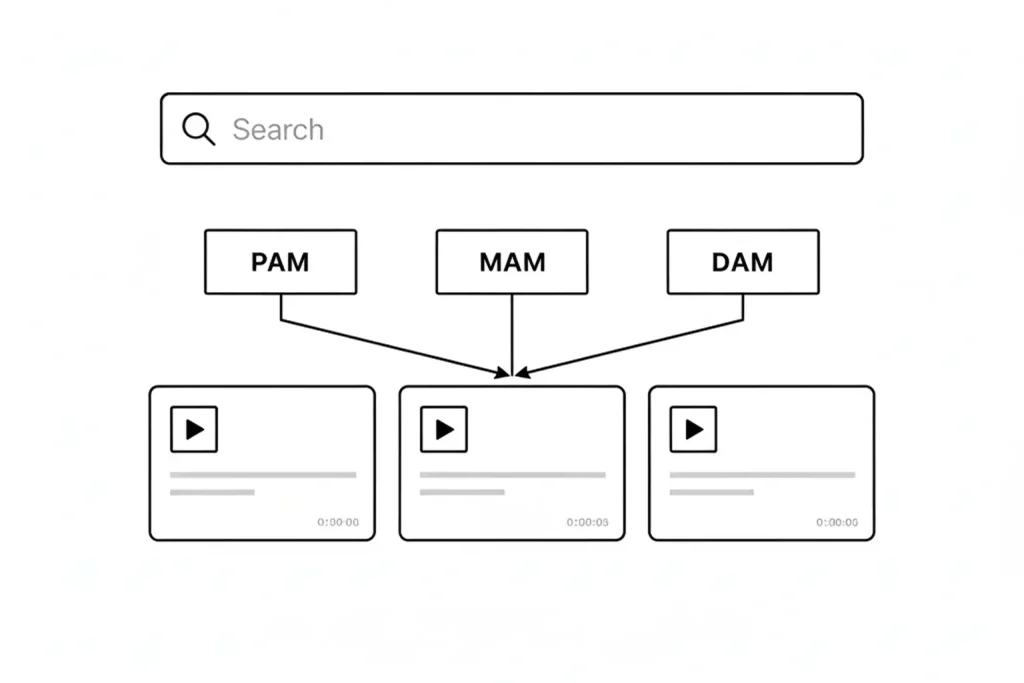

Integration with Existing Systems

AI content moderation tools can seamlessly integrate existing Production Asset Management (PAM) and Media Asset Management (MAM) systems. This integration streamlines workflows, allowing broadcasters to manage content more efficiently. By integrating AI tools, broadcasters can automate the moderation process, reducing the workload on human moderators and ensuring that all content is reviewed consistently.

Best Practices for Setup

Broadcasters should follow best practices to implement AI content moderation effectively. This includes training AI models on specific content types to ensure they can accurately identify inappropriate material. Regular algorithm updates are essential to adapt to new content trends and challenges. Additionally, balancing automated and human moderation is crucial to ensure nuanced decisions are made accurately. Human moderators play a vital role in reviewing flagged content and providing feedback to improve AI performance.

Challenges and Limitations of AI Content Moderation

Potential Biases in AI Algorithms

AI systems can exhibit biases based on the data they are trained on. The AI may produce skewed results if the training data is not representative or contains biases. Regular audits and updates to AI models are necessary to ensure fairness and accuracy in content moderation.

Privacy Concern

AI content moderation involves analyzing large amounts of data, which raises potential privacy concerns. Broadcasters must ensure compliance with data protection regulations and implement robust privacy measures to safeguard user information. Transparency in data use and security is critical for maintaining user trust.

Balancing Automation with Human Oversight

While AI can significantly enhance content moderation, human oversight remains crucial. Human moderators provide the necessary context and judgment that AI may lack. Combining AI’s efficiency with human expertise ensures a balanced and effective moderation process, addressing the limitations of both approaches.

The Role of AI in Enhancing Viewer Experience

AI content moderation not only ensures the removal of inappropriate content but also plays a pivotal role in enhancing the overall viewer experience. AI can analyze viewer preferences and behaviors using advanced algorithms, offering personalized content recommendations that align with individual tastes. This level of personalization keeps viewers engaged and encourages longer viewing times, which is crucial for broadcasters aiming to boost their ratings and ad revenues. Moreover, AI can help create more inclusive content by identifying and eliminating biases and ensuring the content is diverse and representative of a broad audience.

Ethical Considerations in AI Content Moderation

While AI content moderation offers numerous benefits, it raises important ethical considerations. The algorithms used must be transparent and free from biases to avoid unfair treatment of certain types of content or creators. Ensuring transparency in AI decision-making processes is essential to building trust among viewers and content creators. Additionally, there must be clear guidelines and accountability for using AI in moderation to address privacy and data security concerns. By adhering to ethical standards and regulatory requirements, broadcasters can harness the power of AI while maintaining public trust and upholding content integrity.

Case Studies: Successful AI Content Moderation in Broadcasting

Major Network’s AI Moderation Success

A prominent broadcasting network known for its extensive live programming faced significant challenges in moderating live content due to the sheer volume and speed at which it was produced. They implemented an AI content moderation system designed to operate in real time to address this. This system utilized machine learning algorithms and natural language processing to instantly analyze live feeds, identifying and flagging inappropriate content such as offensive language, violent imagery, and sensitive topics.

The impact was immediate and measurable. Within six months, the network reported a 50% reduction in inappropriate content that reached the airwaves. This improvement was attributed to the AI system’s ability to rapidly process and evaluate live content, allowing human moderators to act quickly on flagged issues. The network also noted a significant increase in viewer trust, as feedback indicated that audiences felt more secure and satisfied with the quality of the content. Furthermore, compliance with broadcasting standards improved, reducing the risk of fines and legal challenges. The efficiency of the AI system also allowed the network to allocate resources more effectively, focusing human moderators on more complex issues that required nuanced judgment.

Enhancing Viewer Experience for an Independent Media Company

An independent media company specializing in niche broadcasting faced difficulties managing the quality and appropriateness of its vast content library. Given its limited resources, manual content moderation was not feasible. The company integrated an AI content moderation tool to automate the review process.

The AI system employed a combination of computer vision to analyze visual content and natural language processing to evaluate text and audio. This comprehensive approach enabled the system to detect explicit content, hate speech, and other violations accurately. Over a year, the company experienced a dramatic improvement in content quality. The AI moderation tool filtered out inappropriate content and tagged and categorized media more efficiently, facilitating easier content management and retrieval.

As a result, viewer engagement surged. With higher-quality, well-moderated content, the company saw a 30% increase in viewer retention and a 20% boost in new subscriptions. Automating the moderation process freed the company’s staff to concentrate on creative production, leading to a more diverse and innovative content lineup. This transformation improved operational efficiency and enhanced the overall viewer experience, solidifying the company’s reputation in the industry.

These detailed case studies illustrate the transformative impact of AI content moderation in the broadcasting industry, highlighting specific results and improvements in large—and small-scale operations.

Future Trends in AI Content Moderation

Advances in AI Technology

As AI technology evolves, we can expect more sophisticated content moderation capabilities. Advances in natural language understanding and contextual analysis will enhance AI’s ability to detect subtle instances of inappropriate content. Improved AI algorithms will be better equipped to understand complex nuances and context, ensuring more accurate moderation.

Predictive Analytics and Proactive Moderation

Future AI systems will likely incorporate predictive analytics, allowing broadcasters to anticipate and prevent potential issues before they arise. This proactive approach will further enhance content safety and quality. Predictive analytics can help broadcasters identify patterns and trends, enabling them to address potential content issues before they become problematic.

Conclusion

AI content moderation revolutionizes the broadcasting industry by providing real-time, scalable, and accurate moderation solutions. By integrating AI tools with existing systems and balancing automation with human oversight, broadcasters can effectively manage content, ensure compliance, and enhance viewer experiences. Embracing AI content moderation is a strategic move for broadcasters looking to stay ahead in a rapidly evolving digital landscape. As AI technology advances, its role in content moderation will only become more significant, offering broadcasters new opportunities to improve content quality and viewer engagement.

Digital Nirvana: Empowering Knowledge Through Technology

Digital Nirvana stands at the forefront of the digital age, offering cutting-edge knowledge management solutions and business process automation.

Key Highlights of Digital Nirvana –

- Knowledge Management Solutions: Tailored to enhance organizational efficiency and insight discovery.

- Business Process Automation: Streamline operations with our sophisticated automation tools.

- AI-Based Workflows: Leverage the power of AI to optimize content creation and data analysis.

- Machine Learning & NLP: Our algorithms improve workflows and processes through continuous learning.

- Global Reliability: Trusted worldwide for improving scale, ensuring compliance, and reducing costs.

Book a free demo to scale up your content moderation, metadata, and indexing strategy for your media assets with minimal effort and get a firsthand experience of Digital Nirvana’s services.

FAQs:

1. What is AI content moderation?

AI content moderation uses technologies like machine learning, natural language processing, and computer vision to analyze and manage content, identifying inappropriate material efficiently and accurately.

2. Why is content moderation important for broadcasters?

Content moderation ensures broadcasters maintain high standards, comply with regulations, and provide a safe viewing experience, protecting their reputation and viewer trust.

3. How does AI improve content moderation?

AI improves content moderation by providing real-time analysis, scalability to handle large volumes of content, and increased accuracy and consistency in identifying inappropriate material.

4. What are the challenges of AI content moderation?

Challenges include potential biases in AI algorithms, privacy concerns, and balancing automation with human oversight to ensure nuanced decision-making.

5. What are the future trends in AI content moderation?

Future trends include advances in AI technology, such as improved natural language understanding and predictive analytics, which will enhance content moderation capabilities and enable proactive moderation.