Closed captioning services offer broadcasters a powerful and lasting connection between spoken content and every viewer’s screen. Accurate captions not only ensure regulatory compliance but also make content accessible to deaf and hard-of-hearing audiences. They boost SEO by adding fresh, searchable text data, making them a strategic asset in today’s digital landscape.

The core of a successful captioning strategy lies in a unified workflow that combines skilled editors with the power of artificial intelligence. This approach safeguards broadcast licenses, reduces editing hours, and opens up multilingual markets, all without the need for costly reshoots.

In this guide, we’ll walk you through the essential standards, tools, and trends shaping modern captioning strategies. Keep reading for a section-by-section breakdown designed to help your team make informed decisions and move fast.

Why Closed Captioning Matters in Broadcast Compliance

Closed captioning sits at the heart of legal duty and audience expectation, making it a non-negotiable line item for every media budget. A reliable service guards against fines, helps brands show social responsibility, and boosts ad revenue because compliant shows keep sponsors confident. When captions arrive on time and in sync, deaf viewers follow the plot without frustration, hearing viewers watch on mute during commutes, and search engines index every keyword for better discovery. Those combined gains outweigh the cost of any caption contract. Smart leaders treat captions as vital infrastructure, not an afterthought.

Meeting FCC and ADA Mandates

The Federal Communications Commission enforces accuracy, synch, and completeness benchmarks that broadcasters must hit on every airing. Station managers study the updated FCC closed captioning guidelines to set house rules, then hire vendors who document compliance with time-stamped reports. The Americans with Disabilities Act and Section 508 extend similar duties to government and educational streams, so sports arenas and campuses need captions as much as prime-time networks. Clear records of performance silence complaints before they reach legal desks. In short, meeting the mandate keeps the signal on air and the budget in check.

Captions as Accessibility Tools

Captions turn sound into text for 48 million Americans with some level of hearing loss, allowing them to experience live content without delay.. But their impact goes far beyond accessibility. Captions help language learners grasp fast-paced dialogue and slang, let bar patrons follow a muted game, and enable commuters to consume news silently during their daily grind. Accessibility has evolved from a moral gesture into a mainstream viewer preference that platforms compete to meet. By treating captions as a core feature rather than a special overlay, networks win loyalty from viewers who might otherwise swipe away. Inclusivity pays dividends in minutes watched and shares earned.

Captions and Viewer Engagement

Analytics across news, sports and entertainment show that videos with captions post longer average watch times and higher completion rates. Text reinforces dialogue that quick speakers blur, keeps audiences through loud environments and gives algorithms more clues to rank the clip. Advertisers notice the boost and pay stronger CPMs because they trust the audience will stay. Social media tests reveal that captioned clips win more reposts, spreading the brand’s reach without extra spend. Engagement becomes a measurable return on the caption line item.

How Digital Nirvana Supports Compliance

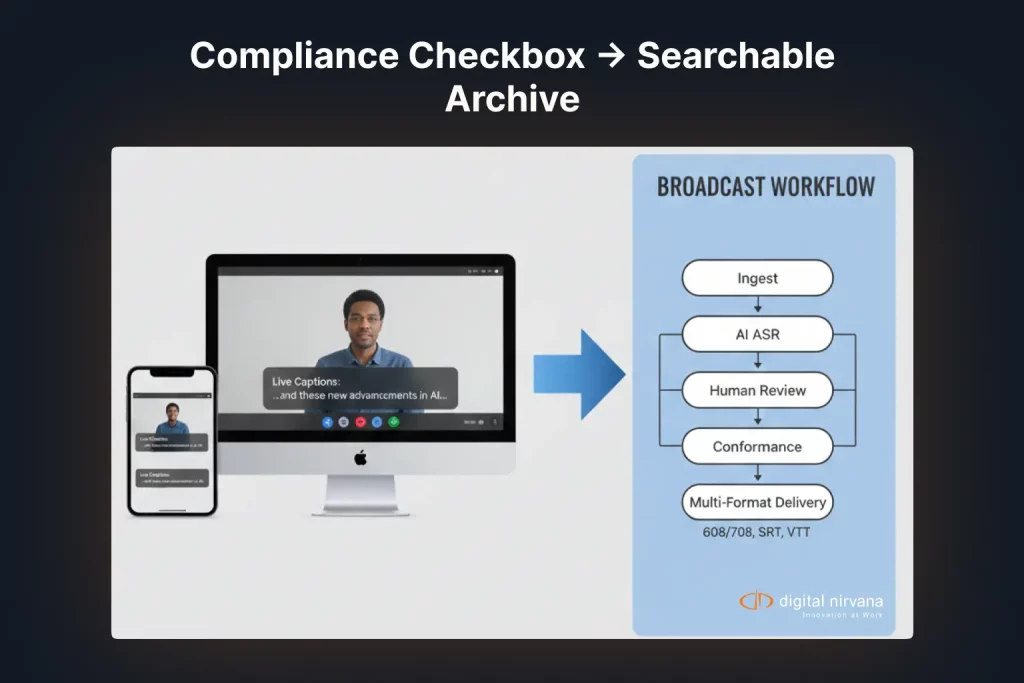

At Digital Nirvana, we combine the speed of artificial intelligence with the precision of human editors to deliver captions that are both broadcast-ready and legally defensible. Our Trance captioning platform drafts transcripts in seconds, then seamlessly guides editors through intelligent, color-coded corrections, speaker identification, and fine-tuned placement adjustments.

With real-time output support for SDI, NDI, or HLS streams, no additional hardware required, Trance fits effortlessly into live and post workflows. Built-in compliance checks automatically flag FCC style violations, CEA-608/708 field mis-mappings, and missing sound effect tags, ensuring quality and regulatory alignment before files ever leave the cloud.

Even better, our deep integration with MetadataIQ allows every caption to sync instantly with media indexing systems like Avid, making your content searchable and accessible the moment it’s published.

Digital Nirvana doesn’t just caption content; we optimize it for performance, compliance, and reach.

What Makes a Closed Captioning Service Reliable

A reliable captioning service delivers accurate text, precise timing, and the ability to scale without problems. It starts with trained captioners, uses strong software, and includes a support team that can respond quickly during live events. Providers who focus on quality consistently perform better than low-cost options that cut corners. Choosing the right service from the start helps avoid mistakes and last-minute stress when it matters most.

Accuracy and Timing Standards

Professional captioning providers stake their reputation on two critical metrics: 98% word accuracy and a sync window of two frames or less. These benchmarks aren’t just industry best practices, they’re viewer expectations.

The process starts with automated checks for spelling, homophones, and speaker identification, followed by meticulous human review for final approval. This layered approach minimizes errors and maximizes trust.

Timely captions are just as important as accurate ones. When dialogue aligns precisely with on-screen speech, it preserves the lip-sync illusion and enhances viewer immersion. Audiences instinctively trust captions that “feel” right, and question those that don’t.

Accuracy and timing are the twin pillars of effective captioning, and no serious broadcaster can afford to compromise on either.

Real-Time vs. Pre-Recorded Captioning

Live news and sports rely on stenographers, voice writers, or ASR editors who push text within seconds of speech. Pre-recorded shows enjoy more polish, style cues, and multi-language tracks because teams edit offline. A reliable vendor offers both paths in one contract, switching smoothly when a taped segment feeds into a live press conference. Consistency across modes maintains brand tone and keeps technical staff sane. Flexibility proves mission-critical during breaking events.

Language Support and Translation Options

Growing channels often add Spanish or French streams first, then branch into Mandarin or Hindi as markets expand. Caption partners should supply human translators who understand idiom and regional slang, plus machine translation that drafts quick first passes. An explainer like Subtitles and Closed Captions clarifies format choices for each locale. Accurate translation avoids cultural misfires and widens the audience without inflating production hours. Multilingual support marks a vendor that plans for tomorrow.

Integration With Existing Broadcast Systems

Caption files must drop into Avid, Edius or cloud MAM folders without manual reshuffling. Modern providers expose REST APIs that let schedulers trigger orders and pull finished SRT or WebVTT files automatically. Plug-ins for Adobe Premiere and Avid MediaCentral show captions on the timeline so editors can tweak placement before render. Seamless integration cuts workflow friction and reduces human error. Compatibility trumps fancy dashboards when deadlines loom.

Turnaround Time and Scalability

Election nights, playoff runs, and awards shows spike workload far beyond regular traffic. Vendors answer by spinning up cloud engines and routing overflow to additional editors, keeping delivery windows intact. Detailed service-level agreements list target times for short clips, feature films, and marathon streams. When fast delivery meets guaranteed quality, producers sleep easier and viewers never see the scramble.

Types of Closed Captioning Services

Not all content is created equal, and neither are captions. Different formats, from live news broadcasts to scripted dramas or social media clips, demand tailored captioning workflows that balance speed, clarity, and cost.

Understanding these distinctions from the start helps production planners choose the right captioning method early on, reducing the risk of costly rework and delays. Whether it’s fast-turnaround live captions or high-precision subtitles for VOD, matching the caption path to the content ensures both quality and efficiency.

Live Captioning for News and Sports

Stenographers capture courtroom-grade shorthand, voice writers re-speak into speech engines, and AI editors polish the stream in split seconds. This trio feeds encoders that embed CEA-708 packets for broadcast or sidecar WebVTT files for OTT. Live captions carry adrenaline, technical jargon and crowd noise, so editors must know the subject to keep pace. The payoff is immediate access for viewers who cannot wait for a replay. Successful shows rehearse the workflow before every season opener.

Offline Captioning for Recorded Content

Drama series, documentaries, and marketing videos benefit from careful script alignment, style rules, and foreign-language mixes. Editors adjust read speed, add music tags, and ensure line breaks match breath pauses. Once approved, captions convert into SRT for YouTube, SCC for station playout, and TTML for international delivery. Meticulous offline work boosts binge-watch satisfaction and archival value. A well-captioned library becomes a searchable gold mine for future promos.

Captions for OTT and Streaming Platforms

Streaming apps accept multiple caption tracks that viewers toggle by language or style. Files need UTF-8 encoding, byte-accurate time stamps, and adaptive bitrate alignment. Services export WebVTT and IMSC 1.1 to cover smart TVs, browsers, and game consoles. Consistent metadata means search and recommendation engines can index scenes within each show. Proper OTT captions extend shelf life across devices without extra code.

Captions for Corporate and Educational Videos

Internal portals and LMS environments rely on captions for policy training, onboarding and lecture capture. Automatic indexing turns transcripts into clickable outlines so staff jump to key moments. Compliance with Section 508 assures equal learning access for employees with disabilities. Captions double as study aids, letting users skim before committing to a full watch. The corporate world values that efficiency.

Key Features to Look For

A tight feature list separates full-service partners from transcription brokers who stop at raw text files.

Speaker Identification and Sound Effects

Labels such as [MUSIC] or [APPLAUSE] add context that pure dialogue misses, while color codes differentiate panelists. Clear speaker tags keep roundtables intelligible even when voices overlap. These cues matter to deaf audiences, who rely on text alone to sense mood shifts. Sound effect tags also help editors auto-generate highlight reels. Detail pays off in user satisfaction.

Multi-Language Workflows

A central dashboard should manage translation, caption styling and QC so teams avoid duplicate uploads. Glossaries lock brand terms, while neural machine engines draft first passes for linguists to refine. That hybrid approach speeds delivery without losing nuance. Platforms that support parallel workflows let a single producer fire captions in five languages within one session. Scale becomes practical rather than theoretical.

Support for Different Caption Formats (CEA-608, 708, SRT, WebVTT)

From CEA-608 and 708 to SRT and WebVTT, each distribution channel requires a specific caption standard. Legacy cable demands closed captions, ATSC 3.0 introduces modern encoding protocols, and mobile platforms prefer streamlined formats. To meet these needs, batch transcoders generate every required file type with precision, ensuring zero frame drift and accurate synchronization. Each caption file is automatically matched to its asset ID. Editors verify that visual styles, fonts, and background opacity remain consistent across formats. Supporting multiple caption specifications is not optional. It is a safeguard against compliance issues and unexpected playback failures at launch.

Caption Placement and Customization

Crowded graphics and lower thirds push captions into awkward positions unless editors nudge them. Modern tools preview on 4K screens, tablets and phones, letting staff move lines away from score bugs or stock tickers. Brand-minded networks adjust font, size and edge color for on-air cohesion. Viewers feel the polish even if they cannot name it. Smart placement respects both design and readability.

API and Automation Capabilities

REST calls let news rundowns auto-order captions, query job status and retrieve files once QC passes. Webhooks alert control rooms if a script mismatch needs manual review. Automation saves minutes when breaking stories hit social feeds. The extra speed often spells the difference between first and second place in a crowded feed. Efficiency scales revenue.

Common Challenges Broadcasters Face

Even seasoned shops wrestle with tech hiccups and volume spikes that threaten caption quality.

Mismatched Audio-Caption Sync

Frame drift emerges when encoders buffer audio differently than text, creating lip-read chaos. Automated alignment tools insert stretch or squeeze commands that realign captions mid-show. Engineers test latency on every signal path before premiere week to prevent surprises. When sync holds, viewers stay immersed. Preventive QA beats reactive fixes.

Caption Quality Control Failures

Typos, dropped words and wrong speaker tags drive complaints to station hotlines. QC teams run spell checks, profanity filters and human spot reviews on all premium content. A second set of eyes often catches domain-specific terms that ASR engines butcher. Consistency builds trust that rolls into higher ratings. Quality remains king.

Regulatory Penalties for Non-Compliance

Missed caption minutes or unreadable text can draw hefty fines and public shaming. Continuous airchecks from the MonitorIQ compliance suite capture every signal path and store proof for audits. When regulators knock, engineers serve logs instead of excuses. Saved time, saved money.

Handling High-Volume Content

Marathon sports days and election coverage balloon hours of footage. Cloud caption engines spin up extra GPUs while human editors focus on flagship segments. Queue management assigns domain experts to science panels and legal pros to court feeds. Load balancing keeps turnaround times predictable. Scale matters more than ever.

AI-Driven Captioning Workflows

Neural engines handle raw speech, while language models guess punctuation and speaker shifts. Editors spend their minutes on brand names, jargon and timing finesse. The blend cuts cost without harming polish. AI becomes a force multiplier rather than a job threat. Savings shift to additional language tracks.

Real-Time Caption Insertion With Broadcast Tools

Our plugin for major automation systems monitors event IDs, grabs source audio and inserts captions directly into ancillary data fields. No extra cabling, no last-second muxing errors. Control rooms view confirmation ticks on the multiviewer in real time. Peace of mind rides alongside the broadcast.

Built-In Compliance Validation

Each caption job passes automated checks that measure read speed, timing offset and profanity filters against FCC tables. Failing files reroute to editors automatically before they ever reach master control. Teams clear daily workloads with confidence and no silent errors slipped through. Compliance shifts from a fear to a routine report.

Integration With Metadata and Logging Systems

Trance exports JSON sidecars that match asset IDs in MAM, allowing producers to search shows by keyword minutes after ingest. MonitorIQ ingests the same metadata, so ad sales tallies caption exposure time for brand studies. One system feeds editorial, legal and revenue data alike. Silos disappear.

Evaluating Captioning Vendors: What to Ask

Due diligence keeps costly gaps from sneaking into a multi-year contract. A short list of pointed questions reveals whether a bidder can deliver.

Is the Service FCC and ADA Compliant?

Request audit logs and success stats rather than verbal promises. Evidence speaks louder than pitch decks.

What Accuracy Benchmarks Are Guaranteed?

Seek commitments of at least 98 percent, backed by service credits if the mark is missed. Clear stakes spur reliable performance.

Can It Handle My Platform Requirements?

Run a pilot across cable, satellite and OTT endpoints to prove multi-format fluency before signing. Trial beats trust.

What’s the Escalation Process for Errors?

Live events need a hotline answered by humans who can push patches within minutes. A slow ticket system spells disaster.

The Future of Closed Captioning in Broadcast

Technology advances pull captions into new realms of speed, depth and presence.

Role of Machine Learning and NLP

Language models now grasp idioms, dialects from the audio context. Cutting edit passes and catching tricky homophones like this tripped the older engines. Continuous training on domain footage raises accuracy further. The gap between ASR draft and final publish keeps shrinking. Editors shift from typing to curating. Quality rises as effort falls.

Captioning in Immersive Media (AR/VR)

Virtual worlds need captions that float within the headset yet avoid blocking action. Early standards propose spatial anchors that keep text near speakers. Audio-driven triggers fade captions when dialogue pauses, reducing clutter. The field evolves quickly but captions remain a must for inclusive design. Pioneers who bake them in early win user love.

Standardization Across Platforms

SMPTE and W3C committees push toward harmonized profiles that map WebVTT, IMSC and CEA packets, ending the format maze. Uniform specs slash conversion errors and vendor lock-in. Broadcasters cheer each step toward simplicity. Viewers notice only that captions work everywhere. Progress feels invisible but powerful.

Cloud-Native Captioning Infrastructure

Microservices scale, self-heal and deploy updates without downtime. Edge nodes run inference near cameras, pushing captions to viewers within two seconds. Bandwidth costs drop because only text, not video, travels back to the core. Cloud principles make captioning as elastic as the rest of modern broadcast. Agility becomes the default.

Conclusion: Aligning With a Partner for Long-Term Success

Closed captioning services do more than enhance accessibility, they shield you from costly fines, expand your audience, and boost engagement metrics. The most effective solutions combine AI-driven speed with human-level nuance, integrate seamlessly into your existing workflows, and provide proof of compliance on demand.

At Digital Nirvana, we deliver that winning blend today, and continuously evolve our offerings to meet tomorrow’s standards. We treat captions not as an afterthought, but as critical data assets that power discoverability, compliance, and viewer trust.

Choose a partner who understands the stakes, one who can scale with you, adapt to new formats, and ensure your content speaks clearly to every viewer. Because when your words reach every eye, your message, and your ROI, goes even further.

Digital Nirvana: Empowering Knowledge Through Technology

Digital Nirvana stands at the forefront of the digital age, offering cutting-edge knowledge management solutions and business process automation.

Key Highlights of Digital Nirvana –

- Knowledge Management Solutions: Tailored to enhance organizational efficiency and insight discovery.

- Business Process Automation: Streamline operations with our sophisticated automation tools.

- AI-Based Workflows: Leverage the power of AI to optimize content creation and data analysis.

- Machine Learning & NLP: Our algorithms improve workflows and processes through continuous learning.

- Global Reliability: Trusted worldwide for improving scale, ensuring compliance, and reducing costs.

Book a free demo to scale up your content moderation, metadata, and indexing strategy, and get a firsthand experience of Digital Nirvana’s services.

FAQs

Which caption file works best for streaming platforms?

WebVTT leads because browsers and smart TVs read it natively, while SRT remains a solid fallback for legacy players.

How quickly should live captions appear on screen?

Aim for latency under three seconds to keep dialogue and text aligned, ensuring viewers never feel the lag.

Do automated captions fully replace human editors?

Automation drafts the bulk, but human editors still correct names, jargon and tricky timing for broadcast-grade polish.

Will captions improve my video SEO?

Yes, search engines index transcript text, making pages rank for spoken keywords and increasing organic traffic.

Where can I read more on advanced caption metadata?

Check Digital Nirvana’s post on harnessing the power of autometadata in broadcasting for in-depth insights.