Metadata tagging software stops editors from hunting through endless footage and make metadata editing a breeze. Top tools attach searchable labels during ingest, so producers drag-and-drop the right clip in seconds. Auto-generated tags track rights, talent, and sponsors for smooth reuse, while live tagging keeps sports and news feeds compliant on air. Most stations pair AI‑powered metadata managers with their asset libraries, trimming edit time by half and cutting archive costs by a third.

What Is Metadata Management and Why It Matters for Media Teams

Metadata management keeps every audio or video file labeled, searchable, and monetizable. Teams define taxonomies, required fields, and retention rules so every ingest follows the same playbook. Good metadata flows into your MAM and NLE without retyping, which cuts errors and improves handoffs. It also carries rights and clearance data that protects your brand on air and online. In this section you will learn how solid metadata lifts broadcast speed, protects rights, and drives new revenue.

Understanding Metadata in Audio and Video Files

$1When editors pull a clip, the embedded data tells them exactly how and where they can use it. For a revenue-focused view on smart tagging, see The Role of Metadata in Content Monetization Strategies.

Role of Metadata Editors in Post‑Production

Post‑production teams rely on metadata editors, specialist apps that sit between ingest and non‑linear editors. These tools read raw footage, suggest tags, and let operators adjust values before media lands in Avid or Premiere. Tight API hooks then pass the enriched XML straight into the project bin. Because the editor controls tags at the panel, the cut list always stays synced with the archive.

Manual Tagging vs. Automated Metadata Tagging

$1The best workflows blend both: machines handle the grind, humans polish the details.

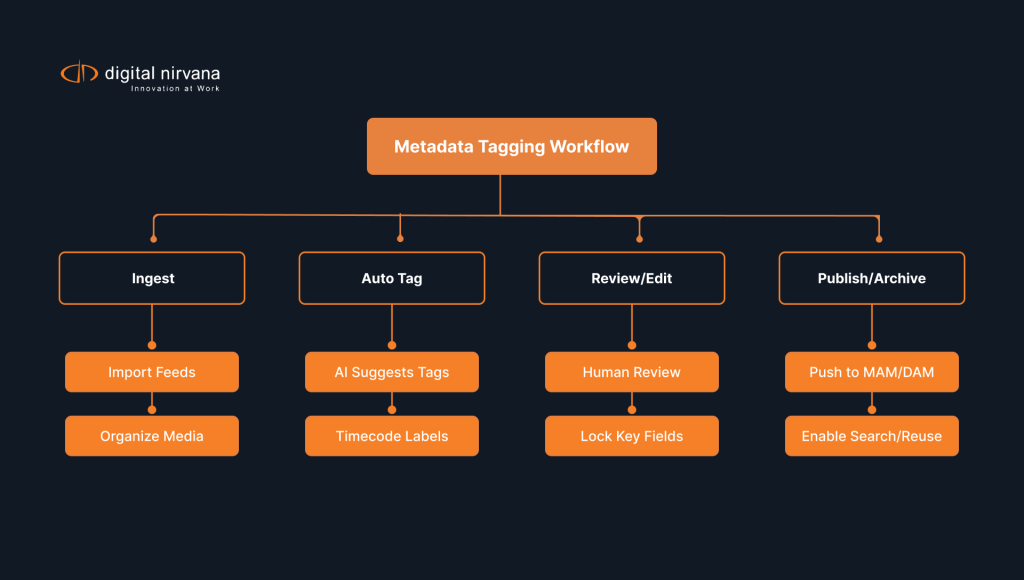

How Digital Nirvana Helps You Tackle Tagging Today

Our services at Digital Nirvana focus on measurable outcomes for news, sports, and entertainment teams. MetadataIQ automates scene detection, speaker identification, and transcript indexing inside Avid and other MAMs, so editors find the right moment fast. MonitorIQ adds compliance logging, as-run verification, and ratings correlation in one dashboard that legal and ad ops trust. TranceIQ generates captions and subtitles with frame-accurate timing and built-in quality control, which lifts accessibility and search. If you prefer a service model, our Media Enrichment Solutions team delivers turnkey transcription, captioning, and translation at scale with clear SLAs. Share your bottlenecks and we will map a pilot you can measure within weeks.

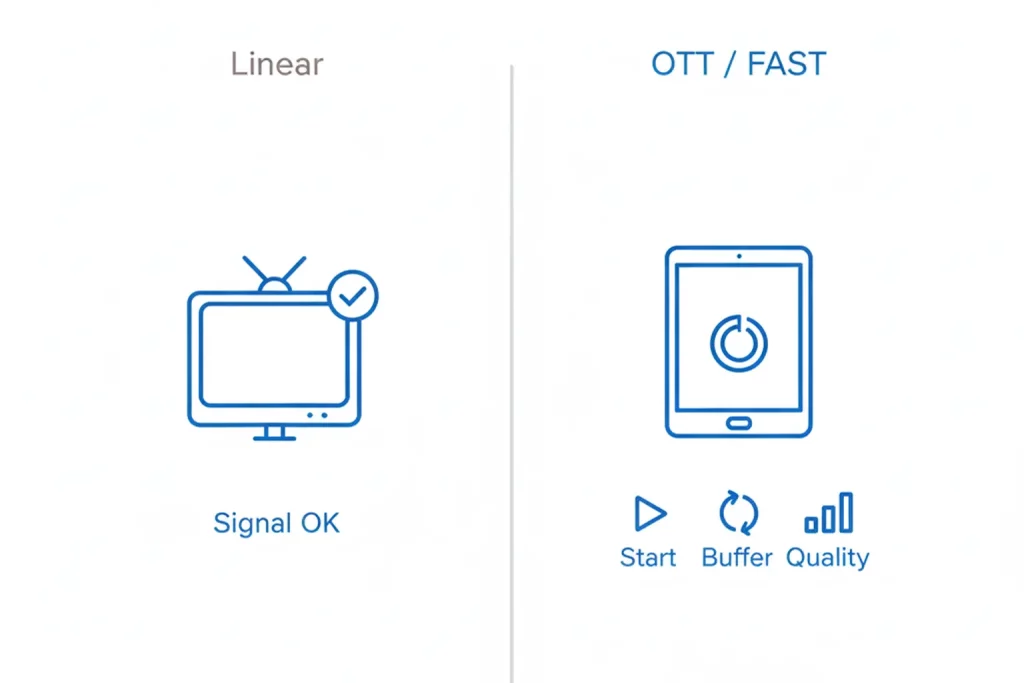

Why Broadcast Teams Rely on Metadata Tagging Software

Fast turns and strict regulations push broadcasters to trust tagging software. Breaking news and live sports leave no time for manual scrubs, so the system must surface clips by query in seconds. Smart labels also keep stations compliant by tying every asset to owners, windows, and territories. Live indexing unlocks rolling review, which lets teams clip and publish while the event unfolds. This section shows how smart labels cut hunt time, secure compliance, and index live feeds.

Speeding Up Search and Retrieval with Smart Tags

A metadata‑rich archive behaves like a search engine. Producers type “goal celebration second half” and the platform returns the exact match in seconds. Without tags, they shuttle through hours of footage. Speed gains go straight to air, letting stations break news first and push highlight reels to social before the competition.

Compliance, Licensing, and Rights Management

$1Rights researchers also trace music cues and royalty splits with one query instead of reading paper cue sheets. For policy details, review the FCC closed captioning rules for television and the U.S. Copyright Office Title 17. Many teams also capture evidence and airchecks through MonitorIQ, which centralizes compliance logging and exports proof on demand. For a practical view of monitoring in modern control rooms, see our explainer on a digital broadcast monitoring system.

Indexing Live Feeds for Real‑Time Access

Newsrooms run rolling ingest, capturing multiple feeds at once. Tagging platforms index those feeds in real time, so journalists scrub back through the last hour without stopping record. Sports teams mark goals, fouls, and player stats as events happen, then push highlight packages to digital channels by halftime.

Automation Trends in Metadata Tagging Software

Automation reshapes tagging from task to byproduct. Modern models process audio, video, and graphics in parallel, which raises recall without slowing ingest. Cloud inference scales up during peak events, then scales down when the newsroom quiets. Edge tagging at cameras and encoders trims latency and keeps crews nimble in the field. This section explores AI engines, real-time tools, and remote production demands.

How AI and Machine Learning Power Auto Tagging

$1Continuous training improves accuracy, so the system learns regional accents and new sponsor logos without manual rules. For a deeper walkthrough, read our AI metadata tagging guide.

Real‑Time Tagging Tools for Live Broadcasts

Live tagging modules process feeds within seconds. Operators set hotkeys for common events, goal, touchdown, applause, while AI listens for crowd spikes and commentator cues. The mixed tagging approach produces highlight markers almost instantly, letting social teams clip and post on the fly. People tags, automated image tagging, geotagging are other bonus features some DAM systems come with. These tagging practices boost operational efficiency of your broadcast team.

Future of Metadata Tagging in Remote Production Workflows

Cloud switching and distributed crews demand tagging that travels with the stream. Modern platforms embed JSON sidecar files so metadata moves from field encoders to central storage with zero delay. Edge AI devices will soon tag at the camera, removing latency entirely and giving directors searchable rough cuts during the shoot.

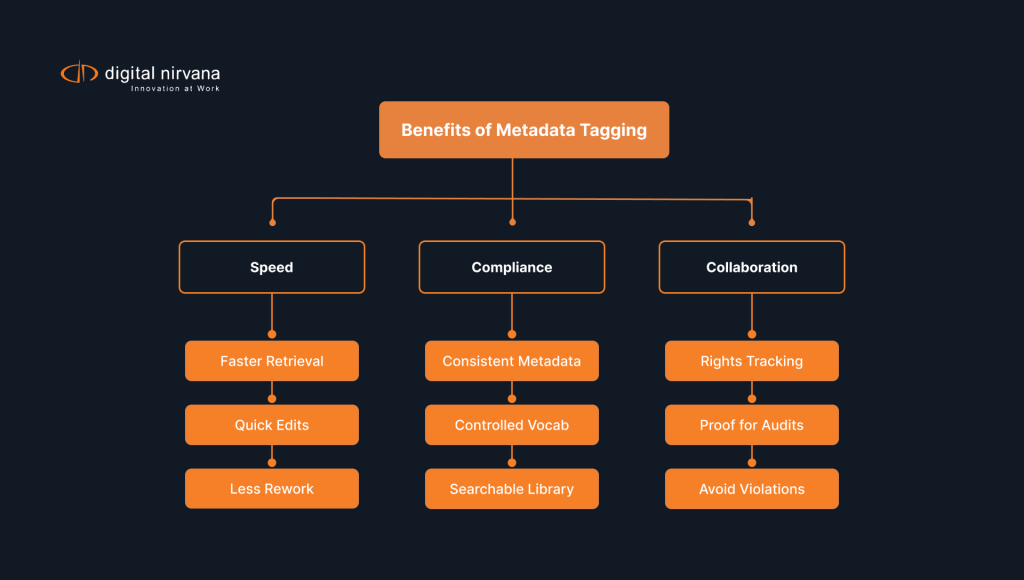

Key Benefits of Metadata Tagging for Media Operations

Metadata pays off in quick collaboration, clear handoffs, and fresh monetization. Teams find, verify, and package clips faster, which boosts output without adding headcount. Shared tags align engineering, editorial, graphics, and legal on the same facts. Measurable KPIs include time to first cut, archive retrieval time, and compliance holds avoided. This section breaks down the core operational wins.

Better Digital Asset Management Across Teams

A unified tag set links ingest, editing, graphics, and playout. Producers swap clips without rechecking details because the system carries names, rights, and timecodes end to end. That single source of truth prevents duplicate storage and keeps multiple departments on the same page.

Improved Collaboration and Editorial Efficiency

When editors can locate B‑roll in seconds, writers lock scripts faster and graphic artists slot lower thirds on time. Shared tagging taxonomies ensure everyone reads the same context, so fewer emails fly back and forth. Efficiency gains free staff hours for deeper storytelling.

Faster Archival and Content Repurposing

Archive managers no longer rely on memory. Keyword queries pull forgotten gems for anniversaries, documentaries, and social throwbacks. Automated tagging even surfaces patterns, such as every on‑air mention of a brand, making sponsorship recap reels a breeze.

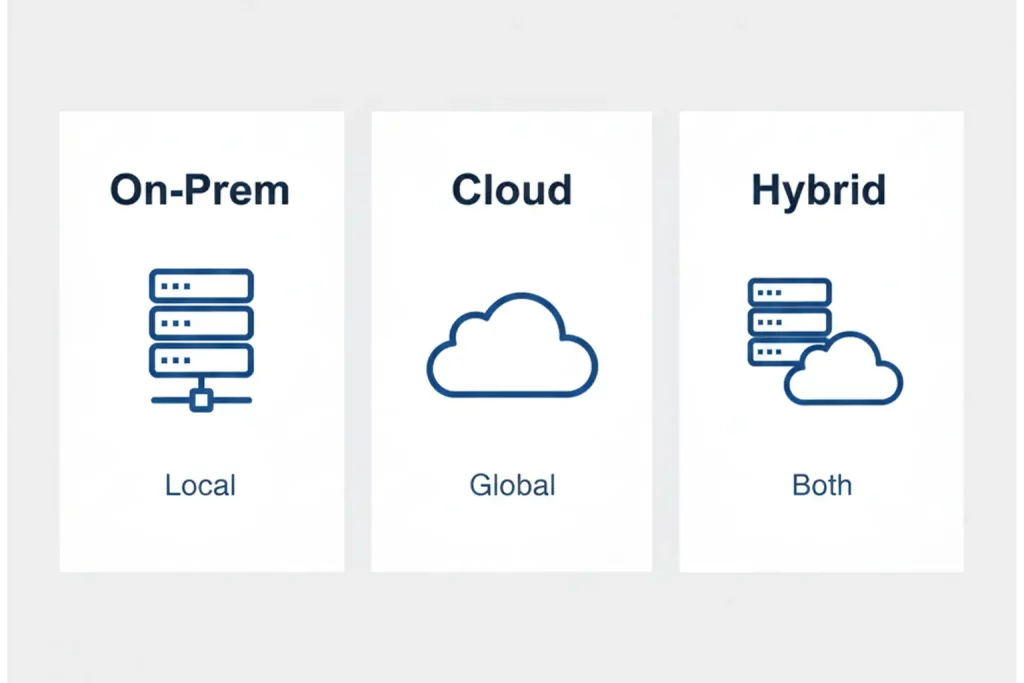

Different Types of Metadata Tagging Software Available Today

Not all tagging tools look the same. Broadcasters mix audio taggers, video-centric editors, and enterprise DAMs based on content mix and staff skills. Some teams buy cloud subscriptions, while others run appliances in their racks for low-latency control. Budget and volume drive the choice, but integration with your MAM matters most. This section outlines audio, video, and integrated platforms so you can match solutions to your mix.

Audio Tagging Tools for Broadcasters and Podcasters

Radio stations and podcasters favor audio‑only taggers that decode speech, music beds, and chapter markers. These tools split long shows into searchable segments, generate show notes, and feed podcast CMS fields without extra typing.

Video Tag Editors for Sports, News, and Entertainment

Sports networks need multi‑angle awareness. Video tag editors clip and label each camera track, merge statistics, and publish highlight packages within minutes. Newsrooms lean on facial recognition and location tags to sort interviews. Entertainment studios track wardrobe, sets, and continuity during shoots.

Integrated Metadata Management in DAM Platforms

$1Because tags live inside the DAM, teams gain global search across marketing, promos, and archival holdings. To see how strong metadata improves discovery, check out How Video Metadata Drives Discoverability and Engagement.

Simplifying Media Indexing with Digital Nirvana

Digital Nirvana offers MetadataIQ, a cloud service that brings AI-powered tagging to broadcast archives. Our connectors drop time-coded tags into Avid and other MAMs with minimal IT lift, so editors feel the speed on day one. Product specialists help you define a pilot, train models on your footage, and set a clean taxonomy. You can run the service alone or pair it with MonitorIQ and TranceIQ for a unified backbone. This section shows how the platform boosts speed, compliance, and editor happiness.

Segment Detection and Tag Suggestions with MetadataIQ

MetadataIQ listens to live or recorded feeds, spots scene changes, and proposes tags for events, speakers, and on‑screen graphics. Editors approve or tweak suggestions, locking accuracy while saving hours each day. The tool also writes captions and transcripts, which adds accessibility and search depth.

Exportable Logs and Searchable Archives for Editors

Every tag, caption, and transcript line lands in a searchable database. Editors export logs as CSV or XML, feed compliance systems, or drop timecode‑accurate markers straight into Adobe Premiere. Quick lookups replace slow rewind sessions, letting journalists focus on story craft. When you need captions or translations alongside search, consider TranceIQ to generate FCC-ready text assets that pair with your media.

Seamless Integration with Broadcast Workflows

$1That seamless link compresses production cycles and keeps crews light. Teams that need done-for-you help can also lean on our Closed Captioning Services when schedules spike.

Choosing the Right Metadata Tagging Software for Your Team

Finding a tagging platform starts with feature checks and ends with scale tests. Begin with your critical jobs to be done, then map them to features, integrations, and roles. Calculate total cost over three years, including storage, API calls, and training. Ask vendors for a proof of concept that uses your real content and measures time saved per task. This section walks through the top criteria.

Core Features to Look for in a Metadata Editor

Real‑time indexing, bulk tag edit, rights management fields, facial and logo recognition, and open APIs for asset managers stand at the top of the checklist. A clear audit trail matters too, so legal researchers trust the data.

Manual Controls vs. Auto Tagging Capabilities

Automation does heavy lifting, but editors still need override ability. Look for interfaces that let users batch‑edit, lock key fields, and push corrections back to the AI model. Balance speed with editorial judgment.

Scalability for Small and Large Broadcast Teams

A local TV station may tag two feeds, while a sports network might monitor forty cameras. Choose cloud architectures that spin up GPU power on demand and price by usage. That way your spend tracks growth without sudden license jumps.

Common Questions About Metadata Tagging and Management

Broadcasters ask how tagging affects day-to-day work and long-term strategy. Leaders also want to know whether small teams gain enough to justify the spend. Editors care about override control, while legal asks how systems preserve audit trails. These questions shape buying criteria and rollout plans for real newsrooms. This section provides clear answers.

What is metadata tagging used for in media?

Metadata tagging links footage to context, rights, and revenue. With clear tags, teams search faster, avoid legal traps, and repurpose content into new shows, clips, and ads.

Can I manually edit tags after auto tagging?

Yes. Leading platforms treat AI suggestions as drafts. Editors adjust values, lock fields, and retrain the engine, so accuracy improves over time.

How does metadata tagging improve compliance?

Tagging tracks license windows, sponsor obligations, and regional restrictions. Automated alerts block expired or restricted content from air, saving fines and brand damage.

Is metadata tagging software useful for small teams?

Small crews gain even more because they have less time to waste. Auto tagging handles grunt work, letting a lean staff push polished stories to multiple channels.

What’s the difference between metadata tagging and management?

Tagging assigns labels to individual files. Management governs taxonomies, enforces standards, and maintains the database that keeps those tags consistent across departments.

At Digital Nirvana, We Help You Ship Faster With Less Risk

At Digital Nirvana, we help you move from scattered tags to a shared data backbone that your staff trusts. MetadataIQ writes consistent, reviewable tags that travel with your assets from ingest to archive. MonitorIQ gives compliance teams instant evidence with exportable airchecks and logs. Our Closed Captioning Services and TranceIQ keep you aligned with FCC quality and display rules while improving viewer experience. Our specialists guide rollouts, train staff, and tune models to your footage, so your team sees gains on day one.

In summary: Key Takeaways and Action Steps

Metadata tagging moves media from chaos to control. Key points include:

- AI engines attach time‑coded tags during ingest, cutting search time by half.

- Rights fields inside tags shield stations from clearance violations.

- Real‑time tagging feeds live highlights to social before the final whistle.

- Integrated platforms like MetadataIQ sync tags with Avid and Dalet out of the box.

- Balanced workflows let machines suggest and humans refine for top accuracy.

Finish by mapping your current ingest flow, listing pain points, and matching them to features in a tagging platform. Then test with one pilot feed and measure the speed and compliance gains.

FAQs

How long does AI tagging take to process one hour of HD footage?

Most cloud engines finish in ten to fifteen minutes, far quicker than realtime playback.

Do I need special hardware to run metadata tagging?

Cloud services run in any browser. On‑prem appliances need a GPU but fit in a single rack unit.

Can tags follow files into social media platforms?

Yes. Sidecar JSON or XMP embeds push tags to YouTube, Facebook, and TikTok, boosting discovery.

How do I keep taxonomies consistent across departments?

Assign a metadata owner, publish a controlled vocabulary, and enforce it through required fields in your tagging tool.

What budget range should small stations expect?

Entry‑level cloud plans start near four hundred dollars a month and scale with hours processed.