Video indexing places rich, searchable data on every frame, shot and scene, turning a raw library into a living knowledge base. With precise tags for speech, objects and sentiment, editors find the right clip in seconds instead of hours, ad ops match brands to moments that sell, and regulators see proof of compliance in one click. The result is faster production, sharper monetization and lower risk across the entire media chain. This guide delivers the nuts and bolts, industry use cases and future outlook you need to treat video indexing as a core workflow, not an afterthought.

Understanding Video Indexing

Video indexing assigns time-coded metadata that makes clips searchable by content instead of file name. The method combines speech recognition, computer vision and language models to label dialogue, faces, logos and tone. Accurate indexes power automated highlight reels, ad insertion and rights checks, all while feeding analytics teams clean data.

What Is Video Indexing?

Video indexing converts unstructured pixels and audio waves into structured tags. Each tag links to a time stamp so that a database query returns both the clip and the exact moment of interest. This structure supports instant jump-to-scene functions and topic playlists. Modern platforms also enrich tags with confidence scores, giving users a quick read on reliability before publishing. Over time, indexed clips join knowledge graphs that surface hidden relationships between programs, presenters and brands.

The Evolution of Indexing

Early systems relied on manual log sheets that catalogued tapes with broad themes. As digital storage took over, shot-list spreadsheets replaced notebooks but kept the human bottleneck. Automatic speech recognition introduced partial automation yet missed faces, text and tone. Today’s AI combines multimodal models that learn context from millions of frames, creating far deeper descriptions. Open APIs let any app call those tags, making indexing a shared backbone rather than a closed silo.

Our Services at Digital Nirvana

Digital Nirvana offers comprehensive automated ad detection solutions that integrate seamlessly into broadcast workflows. Our services deliver robust monitoring and compliance tools that index every ad with frame-level accuracy. By combining AI-driven fingerprinting and metadata parsing, we capture a detailed view of when and where ads run. We also help ensure your operations adhere to any relevant regulations, whether local, federal, or international.

If you need a deeper dive or want to explore how our automated solutions could align with your business goals, visit our Digital Nirvana resource library for case studies and technical insights. Our agile cloud architecture scales with demand, so you can monitor multiple channels without sacrificing performance. Our engineering team is ready to help you integrate ad detection with your existing media asset management, traffic, and billing systems.

Why Video Indexing Matters

A well-planned indexing strategy pays dividends across discovery, compliance and revenue. Every department gains speed and insight once video becomes as searchable as text.

Faster Content Discovery

Producers waste hours hunting a single quote buried deep in a two-hour file. Indexing converts that hunt into a keyword search that returns the exact time code. Teams publish breaking news packages before competitors because the footage appears instantly. Viewers benefit too when interactive players let them jump straight to a play, keynote or lyric they heard about online.

Smarter Media Asset Management

Asset managers juggle petabytes of footage while deadlines move faster each year. Indexed metadata feeds MetadataIQ so Avid, Adobe or cloud MAM systems surface only relevant assets, reducing clutter. Editors reuse archived clips instead of repurchasing them, cutting licensing spend while keeping creativity high.

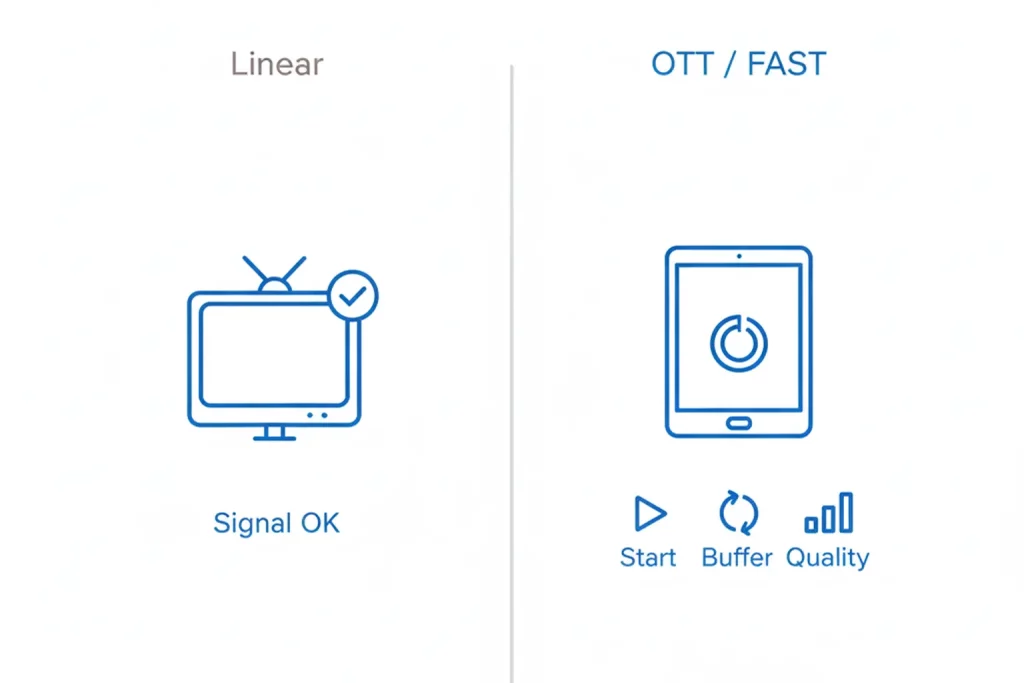

Compliance and Monitoring Support

Regulators demand evidence that captions, ads and content rules match local law. Indexing pairs with MonitorIQ to verify every airing in real time. Automated logs prove ad separation, loudness and caption presence, sparing operators costly fines and lengthy audits.

Core Capabilities in Modern Video Indexing

State-of-the-art platforms package several recognition engines into one workflow, each adding a crucial layer of context.

Speech-to-Text and Closed Captions

Automatic speech recognition transcribes spoken words, assigns speaker IDs and attaches punctuation. The transcript becomes searchable text, fuels caption files and anchors other tags like sentiment.

Object and Face Recognition

Computer vision models track people, props and scenery with bounding boxes. Editors locate every cameo of a celebrity or trace product placement across an entire season.

Logo and Brand Detection

Brands guard trademarks, and advertisers crave exposure metrics. Logo classifiers detect on-screen marks, measure duration and flag unauthorized appearances.

Language and Sentiment Analysis

Natural language processing scores emotion, urgency and topic. Sports networks use sentiment spikes to build hype reels, while newsrooms flag negative tone for editorial review.

How Video Indexing Works

Behind the simple search box sits a chain of processing stages that convert raw video into actionable data.

Ingest and Frame Analysis

The system ingests files or live streams, splitting them into frames and audio chunks. Vision models scan each frame for faces, text and motion while audio feeds the speech engine. Public cloud services such as Google Cloud Video Intelligence handle the heavy compute, delivering tags in near real time.

Temporal Metadata Generation

Detected entities gain exact start and end times, forming temporal ranges. The timeline view helps editors lift highlight clips with surgical accuracy and supports chapter markers for OTT players.

Keyword Mapping and Topic Clustering

Indexing engines group tags into higher-level topics like “press conference,” “goal” or “product launch.” Clusters guide recommendation engines and playlist assembly, boosting viewer engagement.

Applications Across Industries

The versatility of video indexing spans every sector that produces or relies on moving images.

Broadcast and Media

Networks accelerate clip turnaround, feed news tickers and manage rights across territories. Archived libraries become searchable gold mines for documentaries and retrospectives.

Sports and Highlights Editing

Automated detection of goals, touchdowns or home runs builds highlight reels seconds after the play. Coaches pull every defensive snap by player name to refine strategy.

Corporate Training and Knowledge Management

HR teams index onboarding sessions so employees search policy explanations without rewatching entire videos. Executives mine town halls for action items.

E-learning and Academic Video Portals

Universities enrich lecture capture with slide OCR and term tagging, letting students jump to any formula or citation. Researchers analyze speaking trends across disciplines.

Legal and Law Enforcement Footage

Police body-cam and courtroom videos hold critical evidence. Indexing highlights suspect appearances and spoken keywords, speeding case preparation while maintaining chain of custody.

Government and Public Archives

Agencies digitize decades of programming and speeches, then index them for historical research and public transparency.

Video Indexing for Monetization

Beyond workflow gains, indexing creates new cash streams by matching content to audiences and advertisers.

Enabling Contextual Ad Insertion

Brand-safe tags tell ad servers which scenes fit a campaign’s mood and guidelines. Platforms deliver coffee ads during calm morning segments and action games during energetic highlights.

Optimizing Content Recommendations

Streaming services feed indexed data into algorithms that suggest shows by theme, pace or topic. Better matches cut churn and raise viewing hours, lifting subscription lifetime value.

Licensing and Syndication Opportunities

Rights teams search indexes for reusable clips, offer them to third parties and price by second. Metadata confirms exclusivity windows and prevents accidental over-use.

A detailed look at monetization appears in The Role of Metadata in Content Monetization Strategies, a post that dives deeper into revenue models.

Integrating Video Indexing into Workflows

Successful adoption hinges on seamless connectivity with existing systems.

API Access and Platform Integration

REST or GraphQL APIs push tags to newsroom rundowns, OTT CMS and data lakes. Developers script automated rough-cut builds directly from the index.

Compatibility with Media Asset Management Systems

Index files export as JSON, XML or MPEG-7, then attach to assets inside Avid MediaCentral, Adobe Encore or cloud MAMs. Editors open a clip and scroll a timeline of tags rather than raw footage.

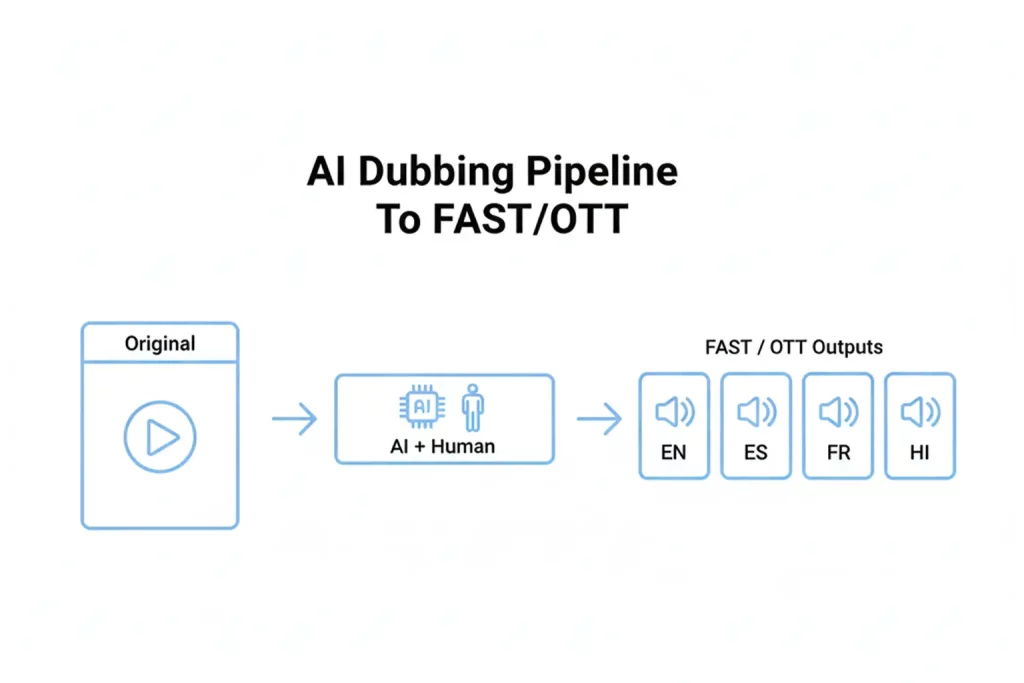

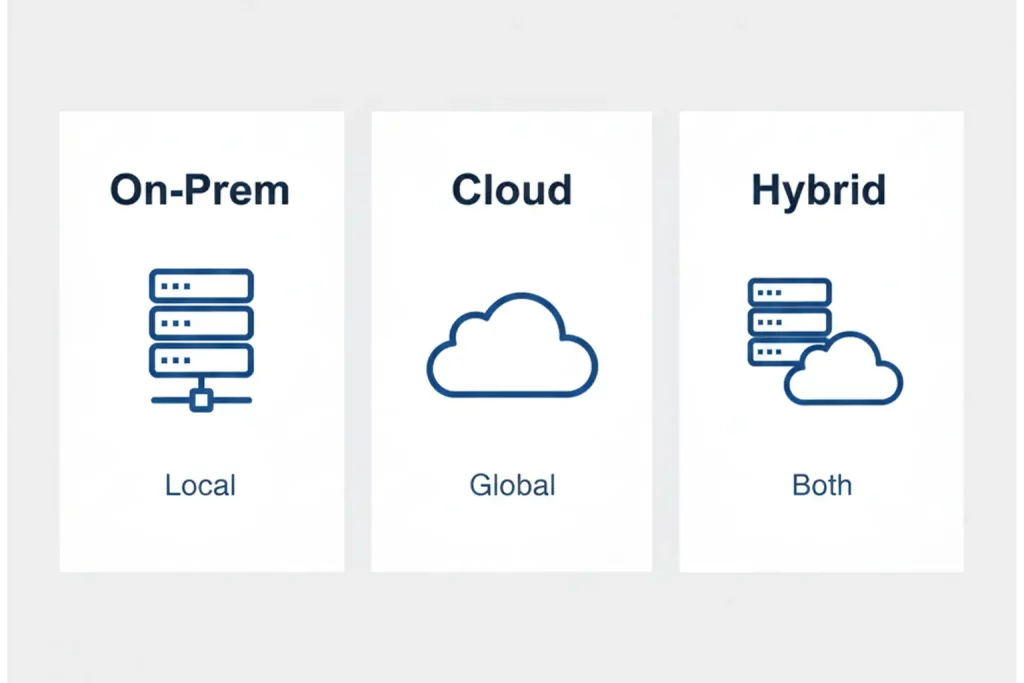

Cloud vs On-Prem Indexing Solutions

Cloud services scale elastically for peak events, while on-prem clusters satisfy strict data residency or latency rules. Hybrid models stage sensitive clips locally and burst heavy compute to the cloud when demand spikes.

Digital Nirvana Advantage

Our team at Digital Nirvana fuses AI and human expertise inside MetadataIQ to automate speech, face and logo tagging with frame-accurate timecodes. Editors view tags directly in Avid panels, drag clips to a sequence and publish without file swaps. We handle monitoring through MonitorIQ, so every indexed tag remains auditable after air. Clients report turnaround times cut in half and searchable archives that pay for themselves within a year. Explore the full workflow at our media and broadcasting AI page to see how indexing can reshape your operations.

Challenges and Considerations

No system is perfect. Planning for common hurdles avoids rework and protects trust.

Accuracy of Recognition Models

False positives undermine confidence. Teams must train models on domain footage and run regular gold-set evaluations. External articles such as the IBM deep-learning overview explain tuning techniques that raise precision without ballooning cost.

Managing Large Video Repositories

Petabyte libraries can overwhelm storage and query performance. Scalable object storage with lifecycle tiering archives older indexes while keeping hot clips on faster media. Sharded search clusters balance load during peak queries.

Privacy and Ethical Use

Face recognition triggers legal safeguards. Policies should restrict biometric queries to approved users and log all access. Anonymization tools blur minors or bystanders to meet global privacy rules.

Future of Video Indexing

Innovation continues to push indexing closer to real time and deeper into context.

Real-Time Indexing and Live Events

Edge inference chips run lightweight models on site, attaching tags to SDI streams before they hit the encoder. Fans receive real-time highlight compilations during halftime instead of postgame.

Multilingual and Cross-Cultural Indexing

Language models now support low-resource tongues, letting regional outlets caption and index local dialects. Cross-lingual tags map identical concepts across cultures, broadening global reach.

AI Improvements in Contextual Understanding

Next-gen models link visual cues to narrative arcs, labelling “plot twist” or “comic relief.” This contextual depth powers richer recommendations and smarter brand safety decisions.

Conclusion: Why Smarter Indexing Drives Smarter Media Workflows

Video indexing gives every frame a purpose, letting teams create, monetize and comply with confidence. Adopt a platform that merges speech, vision and language AI, integrate it with your MAM and set clear accuracy goals. Train staff on metadata best practice and schedule regular model reviews to keep results sharp. When your footage becomes fully searchable, stories move faster, viewers stay longer and revenue follows.

Digital Nirvana: Empowering Knowledge Through Technology

Digital Nirvana stands at the forefront of the digital age, offering cutting-edge knowledge management solutions and business process automation.

Key Highlights of Digital Nirvana –

- Knowledge Management Solutions: Tailored to enhance organizational efficiency and insight discovery.

- Business Process Automation: Streamline operations with our sophisticated automation tools.

- AI-Based Workflows: Leverage the power of AI to optimize content creation and data analysis.

- Machine Learning & NLP: Our algorithms improve workflows and processes through continuous learning.

- Global Reliability: Trusted worldwide for improving scale, ensuring compliance, and reducing costs.

Book a free demo to scale up your content moderation, metadata, and indexing strategy, and get a firsthand experience of Digital Nirvana’s services.

FAQs

How accurate is automatic video indexing today?

Leading models exceed 90 percent accuracy on clear speech and common objects, and performance improves with domain-specific training.

Does indexing work on live streams?

Yes. Cloud and edge solutions tag scenes within seconds, enabling near-live search and clip generation.

Can I run indexing on-premises for security?

Hybrid deployments keep sensitive content local while offloading heavy compute to public clouds during busy periods.

Which file formats hold the metadata?

JSON and XML remain standard. Some systems embed tags in MXF sidecars or use MPEG-7 for frame-level detail.

Where can I learn more about automated metadata and compliance?

See Digital Nirvana’s post on harnessing autometadata in broadcasting for a deeper technical dive.