Introduction

Most media teams are sitting on a growing mountain of raw footage. Games, live events, news feeds, interviews, explainer videos, webinars, internal town halls, and more all end up in storage. The problem is not collecting content; it is finding the exact moment you need when a deadline or opportunity hits.

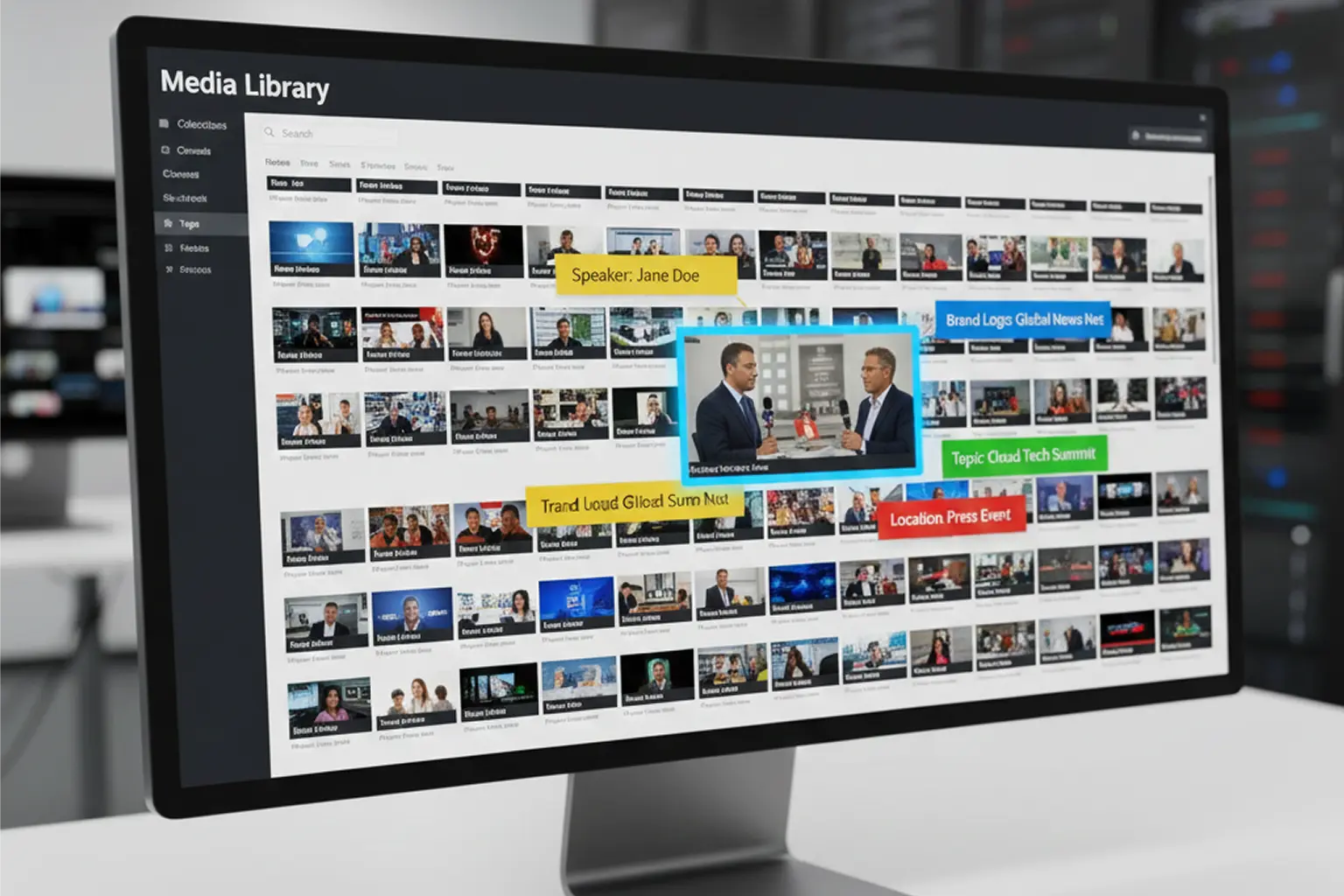

That is where metatagging comes in. When every clip, scene, and quote is tagged with rich metadata, your digital asset library becomes a searchable source of truth. Producers, editors, marketers, and archive teams can discover and reuse content in minutes instead of hours.

But doing this by hand no longer scales. The volume and velocity of modern media demand a different approach. Auto metadata tagging, powered by AI, can watch, listen to, and understand footage at a speed no human team can match, then generate time-coded tags that plug straight into your existing PAM, MAM, or DAM systems.

In this article, we will unpack how metatagging digital assets at scale really works, where auto metadata tagging fits in, and how Digital Nirvana’s MetadataIQ helps teams transform raw footage into searchable, compliant, and monetizable gold without disrupting day-to-day production.

What metatagging digital assets really means today

Metatagging digital assets means attaching structured information to every piece of content so people and systems can understand what it is, where it belongs, and how to use it. In a digital asset management context, that includes:

- Descriptive metadata: who is speaking, what topics are discussed, which brands or teams appear

- Technical metadata: format, resolution, frame rate, audio channels

- Administrative metadata: rights, licenses, usage windows, territories

- Time-based metadata: scene changes, chapters, goals, quotes, on-screen text, key shots

For raw video footage, time-based metadata is vital. Instead of a single description for a 60-minute file, you want to know what happens at minute 03:42, 18:07, or 42:19. That is the difference between “we have that somewhere” and “here is the exact clip you need.”

A strong metatagging practice makes your digital assets:

- Easily searchable and browsable

- Safer to use from a rights and compliance standpoint

- Easier to package into highlights, compilations, and campaigns

- More valuable over time as part of a well-indexed archive

Why manual tagging breaks at scale

Metatagging starts: a few tags, a basic taxonomy, a small team adding metadata as they upload files. It usually breaks when three things happen at once:

- Volume explodes

- Always-on news, sports feeds, remote shoots, and user-generated content drive hours of new footage every day.

- Even diligent teams cannot watch and log everything.

- Teams and use cases multiply

- Broadcast, social, marketing, OTT, corporate communications, and training all tap into the same libraries.

- Each group uses slightly different language and priorities.

- Compliance and governance grow more complex

- Political content, sponsorship disclosures, brand safety rules, and region-specific regulations need to be tracked at the frame level.

The result is familiar:

- Inconsistent tags that reflect individual preference rather than shared standards

- Gaps where high-value footage is stored but practically invisible

- Editors and producers are spending more time searching than creating

- Compliance teams are relying on spreadsheets and manual notes to track risk.

Industry best-practice guidance on DAM and media management repeatedly emphasizes that manual-only metadata approaches are not sustainable for large libraries or video-heavy workflows.

To get ahead of the problem, organizations are shifting from “humans tagging everything” to “AI does the heavy lifting, humans refine, and govern.”

Auto metadata tagging: how AI reads your footage

Auto metadata tagging uses AI and machine learning to analyze your digital assets and automatically generate metadata. For video and audio, that typically includes:

- Speech-to-text: turning spoken dialogue into time-coded transcripts

- Speaker detection: identifying who is talking, and when speakers change

- Computer vision: detecting faces, logos, objects, scenes, and on-screen text

- Audio cues: crowd noise, music, silence, applause

- Topic extraction: identifying entities, keywords, and themes across the timeline

- Rule-based tagging: applying tags for sensitive categories such as politics, profanity, or brand mentions based on defined policies

Modern auto metadata tagging tools can process both live feeds and archives, generating time-coded markers and tags far faster than human loggers. Those tags can then be written into your media asset management systems, where editors and producers interact with them directly.

The goal is not to eliminate people; it is to:

- Give creative teams a rich set of “first draft” metadata to work with

- Standardize tagging across shows, channels, and regions.

- Ensure no feed or file enters your system without being fully tagged.

From raw footage to searchable gold: the end-to-end flow

At scale, metatagging digital assets becomes a repeatable pipeline. A modern, AI-enabled flow typically looks like this:

- Ingest

- Raw footage arrives from live feeds, cameras, remote uploads, or file deliveries.

- Basic technical and source metadata are automatically captured during ingest.

- Auto metadata tagging

- AI engines generate speech-to-text, detect faces, logos, and on-screen text, and identify topics and scenes.

- Time-coded markers are created for key events, quotes, and segments.

- Taxonomy alignment

- Tags are mapped to your controlled vocabulary and business rules, so “UOP” and “University of Portland” are treated as the same entity, for example.

- Editorial review and enrichment

- Producers or metadata specialists review high-value content, refine critical tags, and add context where AI needs guidance.

- Compliance teams validate sensitive categories and apply final approvals.

- Distribution into PAM/MAM/DAM

- Enriched metadata is written back into the systems your teams use every day.

- Editors see markers on their timelines, archivists see structured fields in catalogs, marketers see filters in their asset portals.

- Search, reuse, and monetization

- Users can search by person, topic, event, br, and/or quote, and jump straight to the right moment.

- Archives become a source of clips, compilations, and licensed packages rather than cold storage.

The key is that this pipeline runs continuously. Every new piece of content goes through the same automated metatagging process, so your library stays usable as it grows.

Designing a metadata model that actually works for users

Technology is only half of metatagging at scale. The other half is designing a metadata model that reflects how your teams think and search.

When you define your metadata schema and taxonomy, consider:

- Core entities

- People: anchors, athletes, spokespeople, executives

- Organizations: teams, br, ands, partners, institutions

- Events: matches, conferences, product launches, press briefings

- Content structure

- Program, episode, segment, and clip relationships

- Time-based structures such as acts, periods, quarters, or chapters

- Business and compliance needs

- Sponsorship and product placement

- Political or issue-based advertising

- Regional restrictions and license windows (

A well-structured taxonomy ensures:

- Consistency across teams, channels, and years

- Easier adoption of auto metadata tagging, because AI outputs map to a clear set of labels

- Better analytics and reporting on how content is used

This is an area where expert services and domain-specific tools make a concrete difference. Instead of building everything from scratch, you can start from proven models and customize them to your editorial voice and regulatory environment.

Balancing AI automation with human editorial review

Auto metadata tagging is powerful, but it is not infallible. The most effective organizations combine AI with targeted human oversight.

A balanced model typically looks like this:

- Let AI handle:

- Baseline tagging for all incoming content

- Obvious entities such as logos, recurring faces, and common phrases

- Routine compliance categories where rules are precise and repeatable

- Focus human effort on:

- Flagship shows, significant events, and high-value campaigns

- Nuanced topics where context matters more than literal words

- Quality assurance for compliance-sensitive tags, and contentious content

With the right workflow design, humans validate and enrich where it matters most, rather than trying to tag everything from scratch. Governance dashboards and metadata quality scores can help you monitor where AI is performing well, and where additional rules or training data are needed.

How MetadataIQ helps you metatag at scale

Digital Nirvana’s MetadataIQ is explicitly designed to bring auto metadata tagging into real-world media workflows while respecting the tools and processes you already have.

Key capabilities that support metatagging digital assets at scale include:

- Automated, time-coded tagging

- AI-driven speech-to-text tuned for news, sports, and entertainment.

- Detection of faces, logos, on-screen text, and other visual elements

- Time-indexed markers dropped directly into Avid and other production environments so that editors can search and jump to relevant moments instantly.

- Compliance-aware metadata

- Tagging for political content, br, and mentions, sensitive scenes, and disclosures

- Rules engines aligned with region-specific and client-specific standards.

- Audit-ready logs that support internal and external reviews

- Governance and quality management

- Dashboards that score metadata completeness and highlight gaps

- Tools to check consistency across channels, shows, and archives

- Batch scheduling to process thousands of hours of content without manual intervention

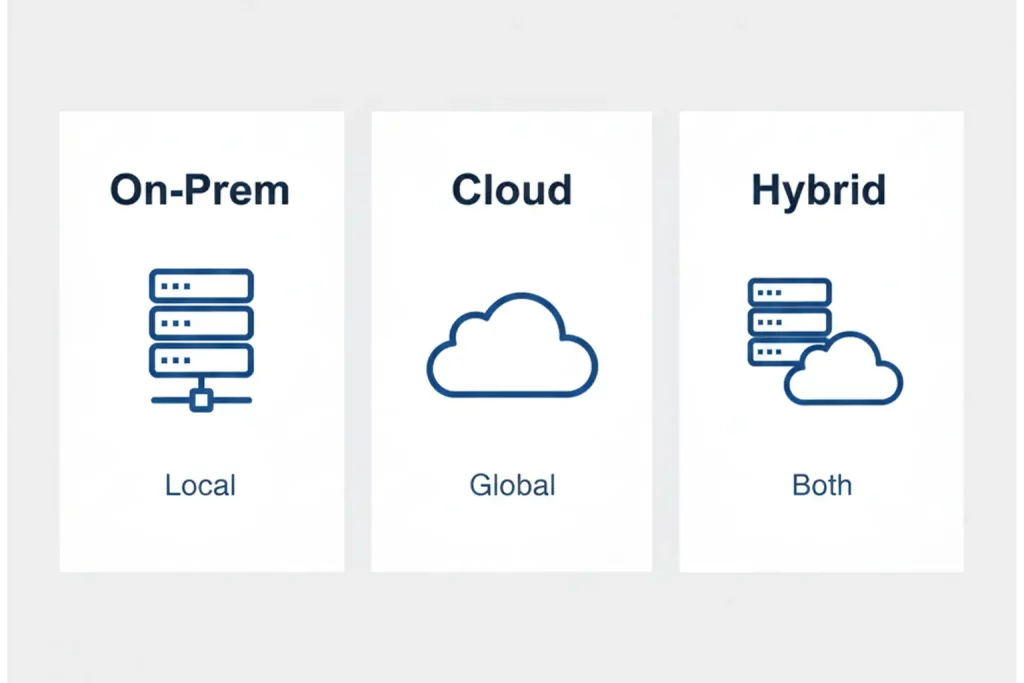

- Seamless integrations

- Native support for Avid ecosystems, Grass Valley, and leading MAM/DAM platforms

- API-based connectors for custom workflows across broadcast, OTT, post-production, and archives

Instead of asking teams to learn a new interface, MetadataIQ runs inside the environment they already use, with metadata flowing across ingest, production, distribution, and archive.

Implementation roadmap: scaling metatagging without breaking workflows

Rolling out metatagging and auto metadata tagging at scale works best as a phased initiative.

- Assess where tagging matters most

- Identify high-impact workflows: live news, sports, flagship shows, marketing libraries, or archives.

- Quantify the current cost of search time, rework, and compliance risk.

- Map your current metadata landscape

- Document which systems hold which assets, and where metadata is already being created.

- Audit a sample of assets to see how consistent tags, naming, and structures really are.

- Define your target taxonomy and policies

- Align stakeholders from editorial, operations, compliance, and marketing.

- Agree on a pragmatic core schema that can be extended over time.

- Run a pilot with auto metadata tagging

- Connect MetadataIQ to a contained set of feeds, shows, or archive collections.

- Validate tagging quality, integration behavior, and impact on search and turnaround.

- Scale, and optimize

- Exp, and coverage to additional channels, and asset types.

- Use governance dashboards and usage analytics to refine rules, taxonomies, and priorities.

Throughout, the aim is to make metatagging feel like a natural part of the workflow, not an extra chore. When users can see time-coded markers directly in their timelines, and when search actually returns the clips they expect, adoption tends to take care of itself.

FAQs

Tagging is often used informally to describe adding a few labels or keywords to a file. Metatagging digital assets goes further: it uses a structured metadata model that covers descriptive, technical, administrative, and time-based information, enabling assets to be organized, searched, governed, and reported on consistently across the entire organization.

Auto metadata tagging creates many more high-quality tags than a human team could reasonably maintain, and it does so consistently. By combining speech-to-text, computer vision, and rule-based tagging, the system can capture who appears, what is being said, and what is shown on screen, all mapped to timecodes. This enables precise searches by person, topic, quote, or moment, instead of relying on file names or a handful of manual keywords.

Modern AI models are accurate enough to handle the majority of routine tagging, especially when trained on media-specific data and fine-tuned for your domain. Platforms such as MetadataIQ combine machine output with configurable rules and human oversight, so your team can set confidence thresholds, review flagged content, and correct edge cases while letting the system handle repetitive tagging tasks at scale.

In practice, auto metadata tagging changes the role rather than eliminating it. Human specialists move from manually typing every tag to designing taxonomies, reviewing AI output, and focusing on high-value content and edge cases. This shift allows teams to cover more footage, improve consistency, and direct expertise where it has the most impact.

MetadataIQ is designed to integrate with existing PAM, MAM, and DAM systems. It extracts media directly from platforms such as Avid MediaCentral, generates speech-to-text and video intelligence metadata, and inserts time-coded markers back into the timeline or catalog. That means editors access AI-generated tags inside their familiar tools, and your central asset management platforms receive richer metadata without changing how teams work day to day.

Conclusion

Metatagging digital assets has moved from a nice-to-have to an operational requirement. Without rich, consistent, and time-based metadata, even the most sophisticated storage and asset management platforms become expensive libraries where valuable footage goes to hide.

By embracing auto metadata tagging, you turn raw footage into searchable gold. AI handles the heavy lifting of listening to and watching every second of content, while your teams focus on editorial decisions, compliance judgment, and creative storytelling. The combination unlocks faster search, safer reuse, and new monetization paths across your archives.

Digital Nirvana’s MetadataIQ is built to make that shift real for broadcast, OTT, post-production, and enterprise media teams. It plugs into your existing PAM, MAM, and DAM systems, brings broadcast-grade auto metadata tagging into your workflows, and gives you the governance tools to manage metadata as a strategic asset.

If you are ready to move from manual tagging to scalable metatagging, the next step is to pick one high-impact workflow, connect it to MetadataIQ, and let the data show you how much searchable gold has been hiding in your footage all along.