Global news never speaks just one language.

A single rundown might include English anchors, Spanish field reports, Arabic agency feeds, and French partner clips, all landing in your system within minutes of each other. Producers need to understand what’s being said, editors need to find the right soundbite, and digital teams need localized versions ready for web, OTT, and social.

Trying to manage that with manual workflows, email chains, and scattered vendors is a guaranteed recipe for a bottleneck.

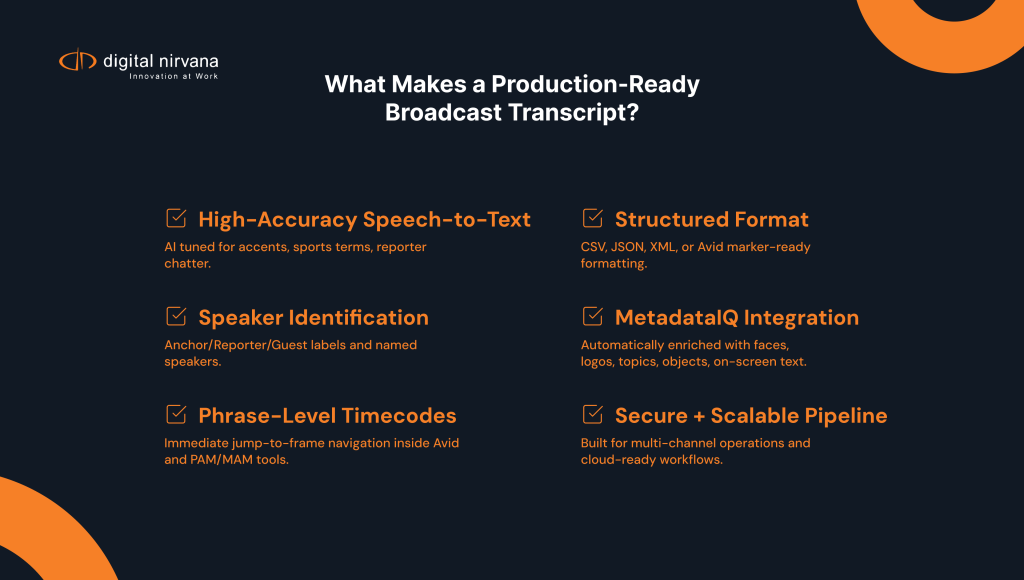

MetadataIQ, from Digital Nirvana, is built to fix that. It applies AI and ML to your incoming content to generate speech-to-text transcripts, captions, and translations, then pushes time-coded metadata back into your Avid and PAM/MAM environments so multilingual content becomes as searchable and usable as your native-language shows.

In this article, we’ll walk through exactly how MetadataIQ handles multilingual transcription for global newsrooms and what that means for speed, consistency, and revenue.

The Multilingual Reality of Modern Newsrooms

If you’re working in an international or multi-market operation, you’re probably dealing with:

- Agency feeds from multiple regions and languages

- Correspondents and bureaus reporting in local languages

- Sports rights and highlights from non-English broadcasters

- Partner channels and syndication across territories

- User-generated or social clips from anywhere in the world

The editorial reality:

- Producers need quick comprehension in a pivot language (often English)

- Editors must be able to search and cut across all languages from within Avid

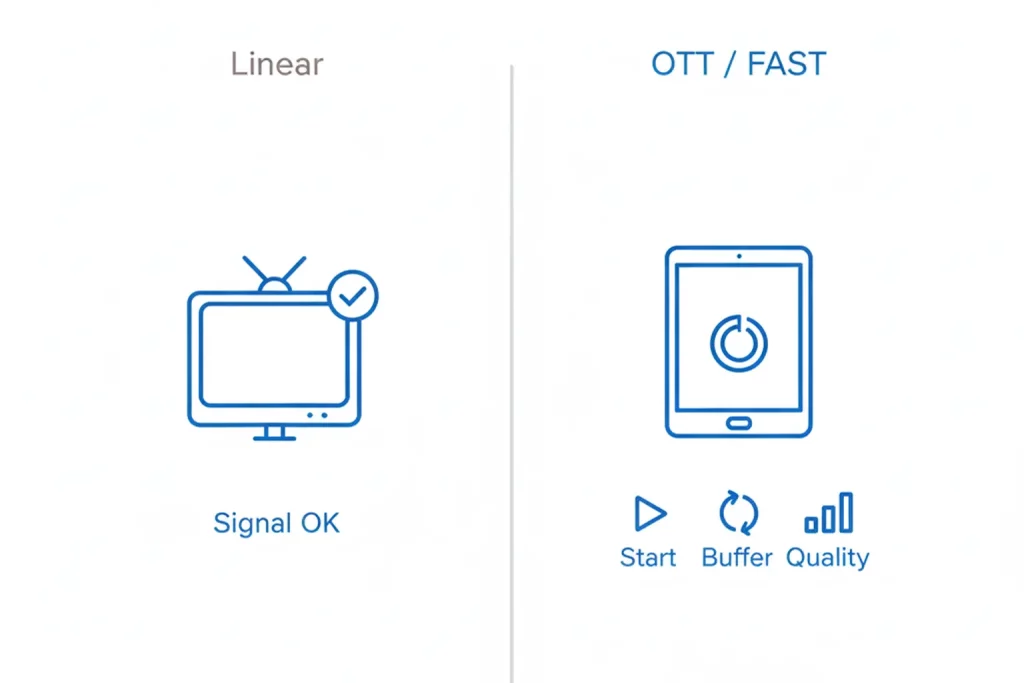

- Digital and OTT teams need subtitled and localized versions on tight deadlines

- Compliance and legal teams must verify what was said, even if it wasn’t in their language

Multilingual transcription is not just a “nice extra.” It’s how global newsrooms stay accurate, fast, and competitive. Digital Nirvana’s own guidance on multilingual transcription highlights this problem: without automation, teams struggle to maintain quality and turnaround times at scale.

MetadataIQ at the Core of Multilingual Transcription

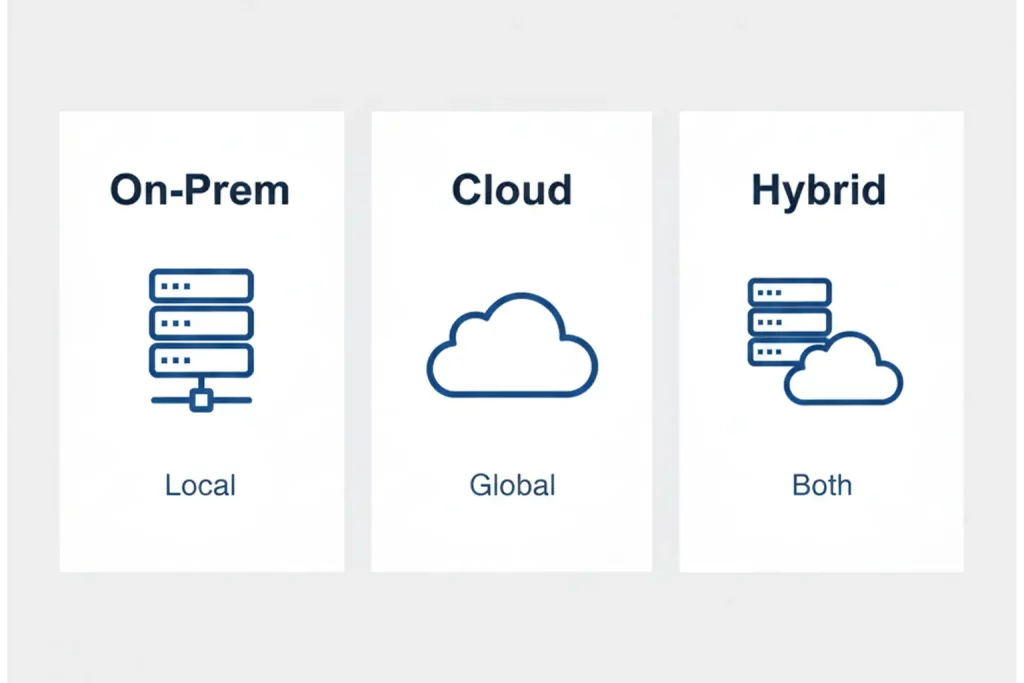

MetadataIQ is Digital Nirvana’s SaaS-based metadata automation platform for Avid and other PAM/MAM environments. It:

- Transfers media or audio essence from your production environment

- Generates speech-to-text transcripts (including non-English languages)

- Routes content for captions, subtitles, and translations

- Sends back time-coded markers and sidecar files that editors can use immediately in Avid Media Composer, MediaCentral, and related systems

In multilingual workflows, MetadataIQ serves as the control layer that keeps transcription, translation, and metadata aligned to the same timecode spine.

Step-by-Step: How MetadataIQ Handles Multilingual Content

1. Ingest and Language Detection

As file-based or live assets arrive, MetadataIQ:

- Connects to Avid (or your PAM/MAM) through off-the-shelf integrations and APIs.

- Extracts audio or proxy streams without forcing you to manage separate low-res proxies.

- Identifies the primary language or languages present in the feed (based on configuration and/or speech engines).

This means you can have multiple language feeds running in parallel without needing separate ad hoc processes per region.

2. AI-Driven Speech-to-Text in Source Language

MetadataIQ then applies AI-driven speech engines to generate transcripts in the original language:

- Handles a variety of broadcast-grade audio conditions (studio, field, stadium).

- Supports multiple languages and accents, tuned over time with your own content.

- Produces time-coded transcripts, line by line, ready to index back against media assets.

These transcripts can be used as-is by local-language teams or as input for translation.

3. Translation and Pivot-Language Transcripts

For global editorial teams, the key is a pivot language, often English:

Using MetadataIQ and Digital Nirvana’s transcription and translation workflows:

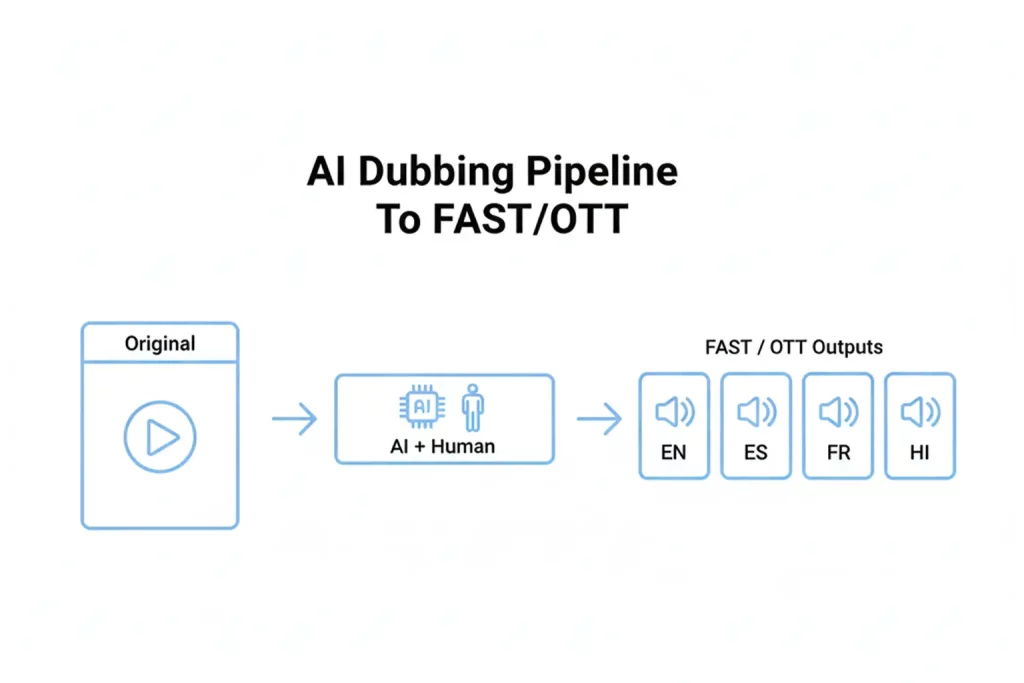

- Source-language transcripts are routed into translation workflows (machine + human, depending on your quality tier).

- You get time-coded translated transcripts that preserve alignment with the original media.

- Editors and producers in headquarters can read, search, and decide in English (or another chosen pivot language), even if the original clip was in a different language.

Because MetadataIQ keeps timecodes consistent across source and translated text, there’s one clock, many languages, which is critical for news, compliance, and rights.

4. Captions, Subtitles, and Localized Versions

From the same pipeline, you can generate:

- Closed captions in the original language

- Subtitles in one or more target languages

- Caption translations for OTT and VOD distribution

MetadataIQ submits and retrieves these assets as sidecar files (e.g., SRT, VTT), which are then re-ingested into Avid or handed off to distribution systems.

This lets your team support accessibility and localization without juggling multiple vendors or manual file transfers.

5. Time-Coded Metadata in Avid and PAM/MAM

The real power of MetadataIQ for global newsrooms is that multilingual transcription doesn’t live in a silo it goes back into your editing and archive tools:

- Transcripts (source and translated) become time-coded markers in Avid Media Composer and MediaCentral.

- Editors can search by keywords, names, places, and brands, even across languages.

- Producers can quickly identify which feeds contain usable quotes or visuals.

- Archivists can tag and classify content for future reuse in multiple languages.

Result: Multilingual content behaves exactly like native-language content in your workflow, is searchable, discoverable, and ready to cut.

Key Benefits for Global Newsrooms

1. Faster, More Confident Editorial Decisions

- Producers get English (or chosen pivot language) transcripts quickly so they can decide which feeds to prioritize.

- Editors can search all incoming feeds by topic or name, without worrying about language barriers.

- Teams cut highlight reels and packages from multilingual material at the same speed as domestic content.

2. Consistent Compliance Across Regions

With multilingual transcripts and translations aligned to timecode:

- Compliance teams can verify what was said in any language, with an audit-ready record.

- Legal and policy teams can quickly review sensitive segments (politics, hate speech, misinformation).

- Logs from tools like MonitorIQ align with transcript and caption data, strengthening your compliance posture.

3. Scalable Localization Without Headcount Spikes

- AI and human-in-the-loop workflows let you set different quality tiers for each show, region, or platform.

- High-volume items (e.g., rolling news) can use more automated chains, while flagship programming gets a curated review.

- A single MetadataIQ-based pipeline means fewer vendors to manage and fewer manual steps.

4. Better Archive Value Across Markets

Because transcripts and translations are written back as metadata:

- Archive teams can search archives by language, topic, and market.

- Content can be repurposed for new regions and platforms without redoing the foundational work.

- Rights and syndication teams can quickly identify region-specific clips.

Why Digital Nirvana + MetadataIQ for Multilingual Transcription?

Digital Nirvana combines:

- Decades of broadcast and compliance experience

- AI/ML capabilities for speech-to-text, video intelligence, and translation

- Deep integration with Avid and modern PAM/MAM stacks

MetadataIQ, specifically:

- Automates speech-to-text and video intelligence metadata generation

- Submits media directly to transcription, captioning, and translation workflows

- Ingests enriched, time-coded metadata back into your production environment as markers and sidecars

- It is already used by global news organizations handling dozens of live feeds simultaneously

For global newsrooms, that means multilingual transcription isn’t an add-on; it’s built into the same tools you’re already using to cut shows and manage archives.

FAQs: Multilingual Transcription with MetadataIQ

MetadataIQ leverages industry-grade speech and translation engines, combined with Digital Nirvana’s broadcast tuning, to support a wide range of major broadcast languages (e.g., English, Spanish, French, German, Arabic, and more), with the ability to expand and customize based on your footprint and content mix. Specific language coverage and accuracy can be validated during a pilot for your newsroom.

Yes. Many global feeds include code-switching anchors in one language, guests in another, on-screen graphics in a third. MetadataIQ’s workflows can handle multi-language segments by:

Detecting or configuring expected languages

Generating source-language transcripts per segment

Routing segments for appropriate translation and captioning while keeping a single timecode spine.

AI-driven multilingual transcription has improved significantly and is ideal for speed and scale, especially for rolling news and significant backlogs. For critical or premium content, MetadataIQ can route assets to Digital Nirvana’s human-curated transcription and translation services or your internal teams via TranceIQ, giving you a hybrid model: automation for volume, humans for precision.

Multilingual transcripts and translations are ingested as time-coded markers and sidecar files within Avid environments:

Editors can search inside Media Composer or MediaCentral by keywords, names, and phrases, regardless of original language.

Translated text can appear as additional metadata fields or markers, depending on your configuration.

This keeps everyone working in familiar tools, without switching between external apps.

Absolutely. Most customers start with a limited scope for example, one channel or one region with a subset of languages to prove time savings and validate quality. MetadataIQ’s architecture and licensing are designed to scale to more languages, channels, and markets without rethinking the entire workflow.

Pricing typically factors in the number of hours of media processed, the languages involved, and whether you use an automated-only or hybrid (AI + human) pipeline. Because MetadataIQ centralizes transcription, captioning, and translation workflows, many customers replace multiple scattered costs with a single, more predictable model. Your Digital Nirvana team would size a model based on channels, volume, and language mix.