When your channel is live, every second that goes on air either becomes an asset you can find and reuse, or it disappears into the noise.

The difference often comes down to one thing. Whether your live broadcast metadata is rich, accurate, and generated in real time, or whether it is scattered across spreadsheets, log sheets, and people’s memories.

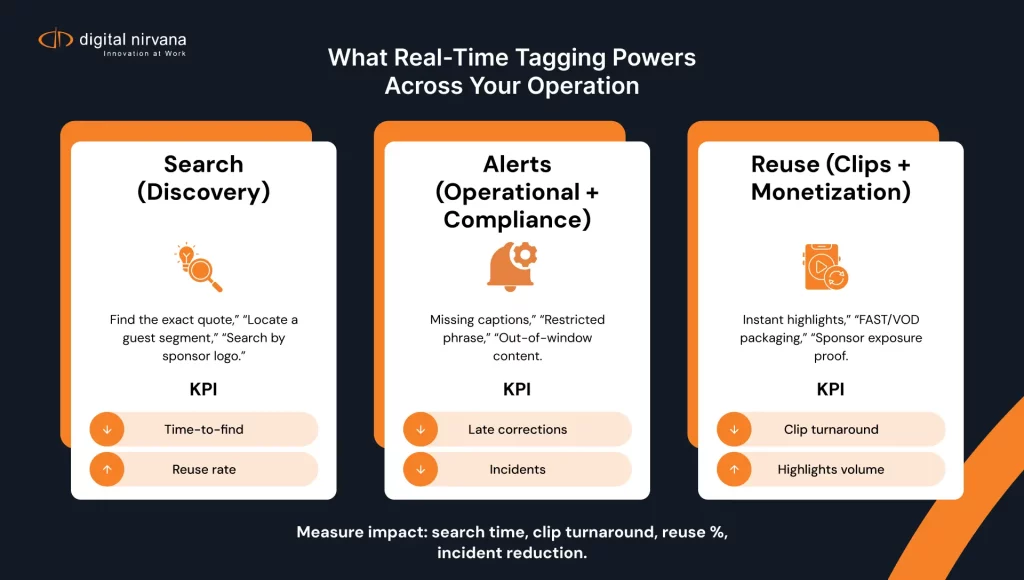

Live broadcast metadata and real-time tagging turn rolling feeds into structured, searchable, and actionable content. They make it possible to find the exact ten seconds you need, trigger alerts before something goes wrong, and repurpose live moments across every platform you manage. Timed metadata is already used across modern live streaming platforms to embed information at precise points in the stream, so it can be acted on during the event and long after it has ended.

In this article, we explore how live broadcast metadata works, how real-time tagging powers search, alerts, and reuse, and how Digital Nirvana’s MetadataIQ helps media teams operationalize all of this across PAM and MAM environments.

What Is Live Broadcast Metadata

Live broadcast metadata is the structured information that describes what is happening in your live feed at any given moment.

It can include:

- Descriptive metadata such as topics, segments, guests, teams, locations, and program names

- Technical metadata such as formats, audio configurations, languages, and SCTE markers

- Compliance metadata, such as restricted content, rights windows, territories, and age ratings

- Engagement metadata, such as key moments, highlights, or social-ready clips

The most important characteristic is timing. Live broadcast metadata is usually time-coded, meaning each tag or marker is aligned to a specific time position in the video or audio. This is similar to timed metadata in live streaming, where tags are embedded at precise timestamps to trigger graphics, interactions, or other experiences for viewers.

Unlike file-based or archival metadata, which may be applied once and rarely updated, live broadcast metadata is created on the fly as events unfold. Modern systems now generate that metadata automatically, rather than relying solely on human loggers.

How Real-Time Tagging Works In Live Broadcast Workflows

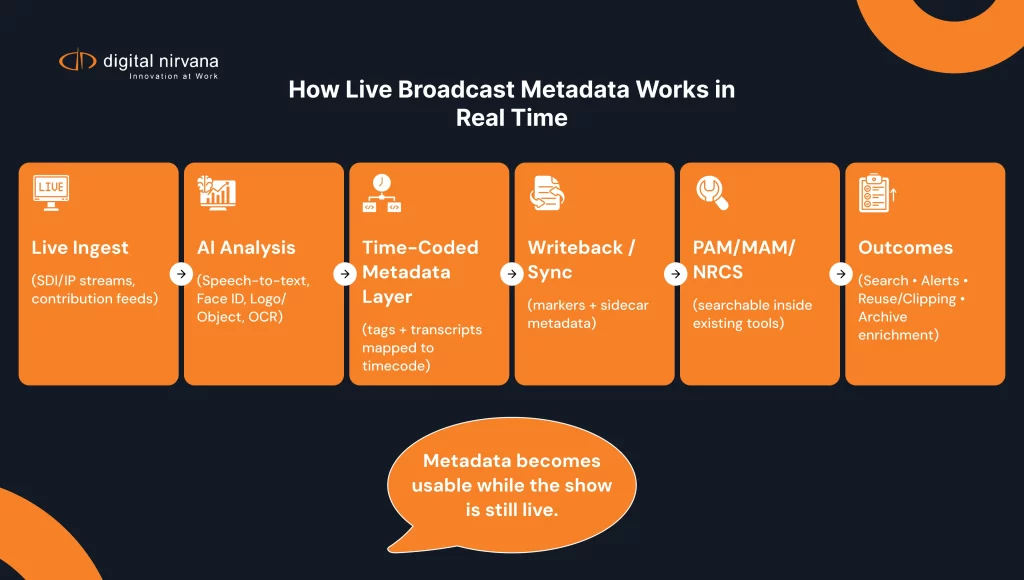

In a typical broadcast or streaming environment, real-time tagging sits between ingest and the tools used by editors, producers, and compliance teams.

A simplified view looks like this.

- Feeds arrive from studios, control rooms, satellites, contribution links, or IP streams.

- A metadata automation platform, such as MetadataIQ, receives live or near-live content.

- AI and machine learning models generate multiple layers of metadata, for example:

- Speech-to-text transcripts for dialogue and commentary

- Facial recognition for anchors, guests, players, or public figures

- Object and logo detection for sponsors, sets, and products

- On-screen text recognition for graphics and lower thirds

- These tags are written back as time-coded markers in PAM, MAM, and NRCS systems, so your teams can search for and use them in familiar tools.

- The same metadata can also be delivered as sidecar files or pushed into downstream systems that manage compliance, monitoring, or distribution.

Real-time tagging reduces the gap between what just happened on air and when it becomes searchable in your PAM or MAM. Instead of waiting for manual logs or after-the-fact processing, your live metadata is available as your producers are still in the rundown.

Why Real-Time Metadata Matters For Search

Search is often where the pain is felt most. If your live content isn’t properly tagged, your team spends more time hunting than producing.

Real-time metadata changes that.

- Editors can search by people, topics, teams, or specific phrases rather than guessing filenames or scrolling through timelines.

- Producers can find reusable moments from earlier in the day, week, or season and drop them into new packages.

- Archive teams can ingest live and fast-turn content with richer, more consistent metadata, which makes the entire library more valuable.

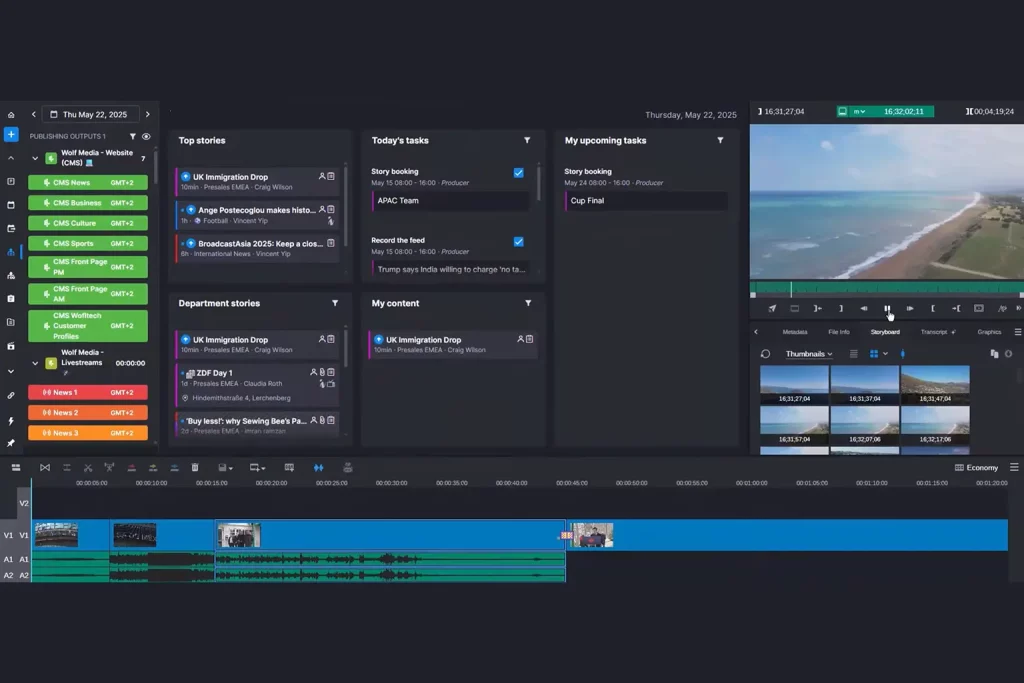

Modern metadata automation platforms are built to feed this intelligence back into your existing PAM and MAM tools, rather than forcing you into a new repository. MetadataIQ, for example, integrates via APIs with Avid and other leading media management platforms, and pushes time-coded metadata markers directly into familiar edit and production environments.

The result is a search experience that feels natural to storytellers. They type what they remember, and the system returns the exact moments that match.

Powering Automated Alerts With Live Broadcast Metadata

Live broadcast metadata is not only about discovery. It is also a powerful signal for real-time alerts.

When your metadata pipeline is capturing what is on screen and in audio, you can define rules that watch for:

- Compliance risks, such as restricted phrases, sensitive topics, or missing disclaimers

- Rights-related issues, such as out-of-window footage, territory constraints, or sponsor conflicts

- Operational gaps, such as missing captions, missing graphics, or problems signaled by SCTE markers

As more broadcasters adopt AI-driven captioning and metadata, they are using these signals for monitoring and quality control in addition to accessibility and search.

With a platform like MetadataIQ sitting alongside your compliance and monitoring stack, live metadata can help trigger alerts faster and with better context, so your teams can correct issues before they impact viewers or regulators.

Powering Reuse And Monetization With Live Broadcast Metadata

The third pillar is reuse.

Live content is expensive to produce. If metadata is thin, you only use a fraction of what you capture. When your live broadcast metadata is rich and time-coded, reuse becomes a normal part of the workflow instead of a separate project.

Real-time tagging enables:

- Instant highlights and recaps for sports, where key plays, goals, or penalties are tagged automatically and turned into clips within minutes.

- Rapid creation of social media versions from live news, where producers search for a quote or topic and export it instantly in vertical or platform-specific formats.

- Efficient packaging of live feeds into VOD libraries and FAST channels, with metadata used to synchronize content, personalize ads, and assemble themed channels.

This kind of reuse depends on being able to trust your metadata. That requires automation, governance, and systems designed to handle broadcast-scale operations.

Common Challenges With Live Broadcast Metadata

Many broadcasters and media teams know they should be doing more with live metadata, but run into familiar obstacles.

- Manual logging and inconsistent taxonomies

- Human loggers are expensive, hard to scale, and do not always use consistent tags across shows or regions.

- Important details get missed when shows are fast-paced or when multiple feeds arrive at once.

- Siloed systems across PAM, MAM, NRCS, and playout

- Metadata created in one system does not always make it into others.

- When editors, producers, and archive teams cannot see the same metadata, they duplicate effort and lose trust in the data.

- Quality and governance at scale

- As you add more channels, languages, and markets, keeping metadata consistent becomes a governance challenge rather than just a technical one.

- Without quality scoring and audit tools, it is hard to know which parts of your catalog are well described and which are not.

These are the gaps MetadataIQ is designed to address.

How MetadataIQ Simplifies Live Broadcast Metadata At Scale

MetadataIQ is Digital Nirvana’s AI-powered metadata automation platform, built specifically for broadcast-grade media workflows. It focuses on one job. Generating, enriching, and governing metadata for your audio and video content, and feeding that intelligence back into the systems you already use.

For live broadcast metadata, MetadataIQ provides several benefits.

- Automated, multi-modal analysis

- Speech-to-text converts speech into searchable transcripts.

- Computer vision identifies faces, logos, objects, and on-screen text.

- The system generates descriptive, technical, and compliance-oriented metadata in parallel.

- Time-coded metadata for live and fast turn

- Tags and transcripts are aligned with timecode and written as markers or sidecar metadata.

- This allows editors to jump directly to moments of interest inside Avid or other PAM and MAM tools.

- Integration with PAM, MAM, and NRCS

- MetadataIQ connects through APIs and modern interfaces to fit into newsrooms and production environments, including Avid ecosystems.

- Metadata stays in sync as content moves from live ingest to edit, archive, compliance, and distribution.

- Governance and quality management

- The platform tracks metadata quality, supports configurable taxonomies, and helps standardize metadata across channels and teams.

The key point is that MetadataIQ does this without forcing you to replace your existing PAM or MAM. It becomes the backbone of your metadata workflows rather than yet another repository.

Blueprint To Implement Real-Time Tagging For Your Live Feeds

Moving from manual logs to automated live metadata is easier when you take a phased approach.

- Map your use cases

- Identify where live metadata will have the biggest impact. For example, rolling news, sports highlights, compliance monitoring, or archive reuse.

- Select pilot channels or programs

- Choose a mix of high-value and representative shows or feeds.

- Define success criteria, such as faster search, reduced logging time, or quicker clip turnaround.

- Connect MetadataIQ to your live sources and PAM or MAM

- Set up ingest for live or near-live streams into MetadataIQ.

- Integrate with PAM, MAM, and NRCS systems so time-coded metadata flows back into existing tools.

- Design taxonomies, rules, and alert conditions

- Define the tags, entities, and categories that matter most for your operation.

- Configure alert triggers for compliance, rights, or operational events detectable from metadata.

- Build review and improvement loops

- Have editors and producers validate AI-generated tags as part of their normal workflows.

- Use their feedback to tune models, taxonomies, and rules.

- Scale across channels and content types

- Once the pilot delivers measurable gains, extend real-time tagging to more channels, languages, and markets.

Key Use Cases For Live Broadcast Metadata

Live broadcast metadata and real-time tagging are relevant across many types of media operations.

Newsrooms and rolling news

- Breaking news and rolling coverage require rapid turnaround. Real-time metadata lets teams locate quotes, reactions, and context from earlier in the cycle without rewatching entire segments.

Live sports and events

- In sports, metadata tagging of players, actions, and key events allows immediate highlight creation, deeper analysis, and better fan engagement.

OTT and FAST channels

- For OTT and FAST providers, live metadata is essential for dynamic ad insertion, playlist assembly, VOD packaging, and time shifting features.

Enterprise and government channels

- Corporations and public sector organizations running internal channels can use live metadata to archive town halls, legislative sessions, and events so that specific topics or speakers can be found later.

Measuring Success: KPIs For Live Metadata And Real Time Tagging

To prove the value of live broadcast metadata, it helps to define clear KPIs from the start.

Consider tracking:

- Average time to find footage for a story, package, or promo before and after automation

- Turnaround time for highlights, recaps, and social clips

- Percentage of archive or recent content reused in new productions

- Reduction in compliance incidents or late corrections triggered after the air

- Logging or metadata-related labor hours saved, redeployed to higher-value work

Research in metadata management shows that richer, well-governed metadata is directly linked to better discovery, reuse, and overall digital transformation outcomes. MetadataIQ is designed to make those benefits accessible to broadcast and media teams by automating the most time-consuming parts of the process and integrating with existing workflows.

FAQs

Live broadcast metadata is generated during or immediately after a live event. It focuses on making content searchable, alert-ready, and reusable while production is still underway. Archive metadata is often applied later and may be less granular or time-specific. Modern platforms blur this line by continuing to enrich metadata after live events while keeping the same time-coded spine.

No. MetadataIQ is designed to sit alongside your existing PAM and MAM systems and feed them with rich, time-coded metadata through APIs and integrations. It does not replace your asset repositories. That makes it easier to adopt in environments built around Avid and other established platforms.

AI-generated metadata has improved significantly, especially for speech-to-text, face recognition, and logo detection. A hybrid model works best in broadcast. Automation handles the volume and speed of live feeds, while human review focuses on high-value content, taxonomy tuning, and edge cases. Over time, feedback loops help models align with your preferred language, style, and tagging rules.

Yes. Platforms like MetadataIQ support multilingual transcription and translation workflows and return time-coded transcripts and tags to PAM and MAM, even in environments handling dozens of simultaneous live feeds in different languages.

Accurate live metadata supports monetization in several ways. It enables dynamic and targeted ad insertion, makes it easier to prove sponsor exposure and placements, and increases the volume of highlights and derivative content that can be sold, syndicated, or packaged for partners and platforms.

Conclusion

Live broadcast metadata and real-time tagging are no longer optional. They are becoming the foundation for search, compliance, and content reuse in modern media operations.

Without them, your teams spend time chasing clips and fixing problems after air. With them, your live feeds become structured data streams that drive faster storytelling, stronger governance, and better monetization.

MetadataIQ is designed to make that shift practical. It automates the creation of rich, time-coded metadata, integrates with your existing PAM and MAM stack, and provides editors, producers, and compliance teams with a shared source of truth for what is in your content.

If you are ready to move from manual logging to live metadata that powers search, alerts, and reuse, the next step is to define your pilot and see how much time and value you can unlock.

Key Takeaways:

- Live broadcast metadata turns live feeds into searchable, structured assets aligned to timecode.

- Real-time tagging powers three high-value outcomes: better search, smarter alerts, and higher reuse and monetization.

- Manual logging and siloed systems limit metadata’s impact, particularly at broadcast scale.

- MetadataIQ automates multi-modal, time-coded metadata and feeds it into PAM, MAM, and NRCS systems you already use.

- A phased rollout that starts with clear use cases, pilots, and KPIs helps media teams prove value and scale with confidence.