AI is now in almost every step of the media chain.

It listens to live feeds, generates captions, flags problematic content, tags logos and faces, summarizes shows, and even helps decide which clips go to social first. That’s the promise of AI in media monitoring: faster insight from oceans of audio and video that humans can’t keep up with.

But there’s a more complicated question underneath:

If AI is helping us watch the media, who is watching the AI?

Ethical concerns around bias, privacy, and transparency aren’t abstract anymore. UNESCO’s Recommendation on the Ethics of AI explicitly warns that AI systems can embed and amplify existing inequalities if they’re not designed and appropriately governed. And recent research on AI ethics keeps coming back to the same core themes: fairness, accountability, and transparency.

At Digital Nirvana, our Media Enrichment Solutions – captioning, transcription, subtitling, live captioning, translation, Subs & Dubs, and more – all leverage AI plus human expertise to make media more accessible and searchable. That also means we have to be very intentional about how AI is used in monitoring and analysis.

This article walks through:

- What we actually mean by “AI in media monitoring.”

- Where the ethical risks really sit (bias, privacy, transparency)

- Practical safeguards you can build into your workflows

- How Digital Nirvana’s Media Enrichment Solutions support responsible AI use

What do we mean by “AI in media monitoring”?

When people hear “media monitoring,” they often think of social listening or PR analytics. In broadcast and streaming, it goes much deeper.

AI in media monitoring typically includes:

- Speech-to-text and transcription

Turning live and recorded audio into searchable transcripts for compliance, accessibility, and editorial search. Tools like Digital Nirvana’s transcription and live captioning services use ASR plus human review to maximize accuracy. - Captioning and subtitling

Generating and checking closed captions and subtitles to meet accessibility regulations and localize content. Digital Nirvana combines advanced speech-to-text technology with expert captioners and subtitlers in 60+ languages. - Content and ad monitoring

Using AI to detect ads, music, logos, topics, or policy violations across live channels and archives – often feeding into compliance, ad verification, and brand-safety workflows. - Metadata tagging and summarization

Automatically tagging people, places, topics, and segments so content teams can find the right clip instantly, as described in Digital Nirvana’s AI metadata tagging and newsroom automation guides. - Sentiment and trend analysis

For some use cases, AI also classifies tone (positive/negative/neutral), detects sensitive topics, or identifies how often certain viewpoints appear.

All of that is powerful. It also creates new responsibilities: you’re not just monitoring content; you’re now also managing the risks that come with AI systems interpreting that content.

Where the ethical stakes are highest

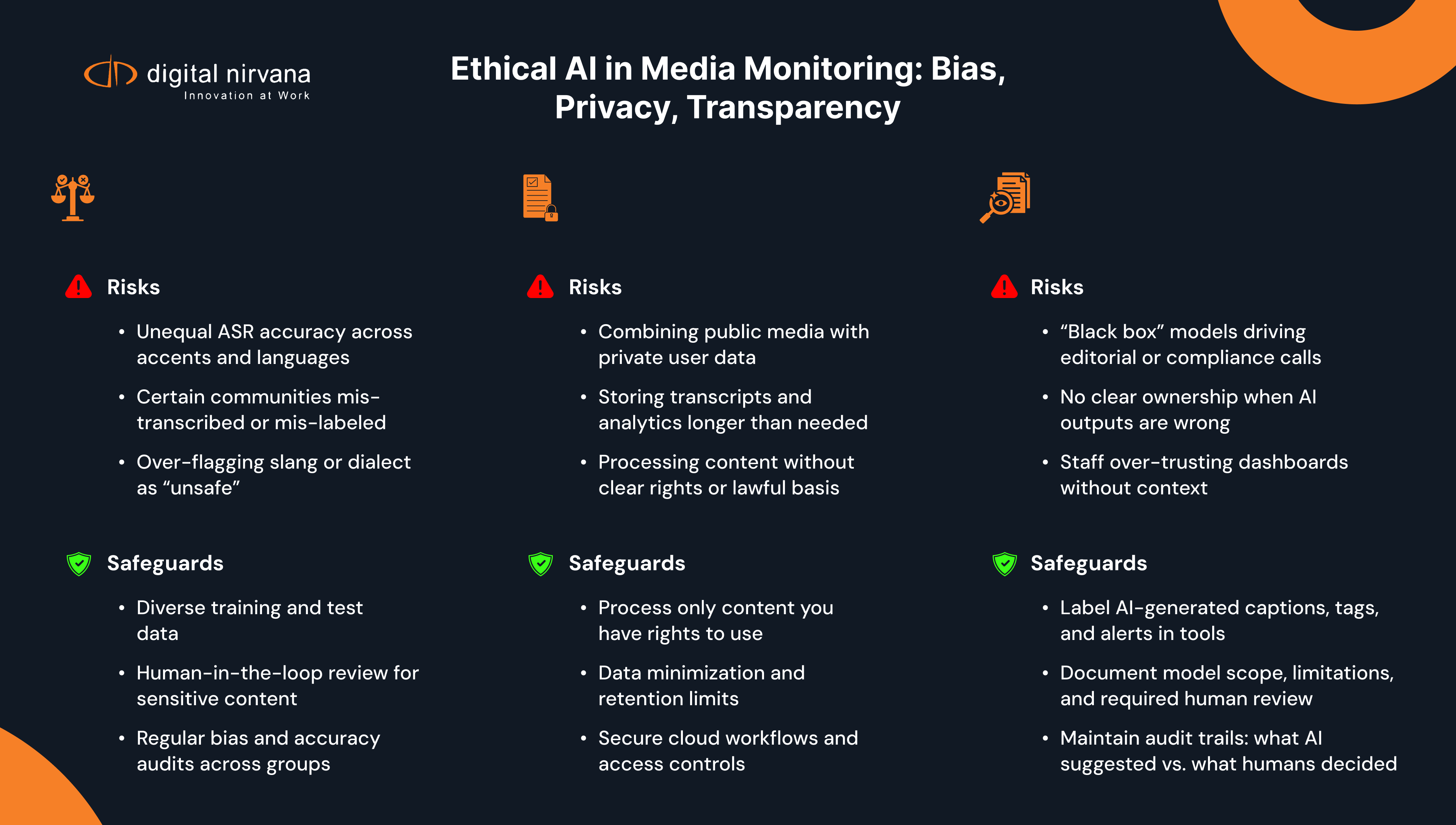

Across current research and policy frameworks, three ethical themes keep coming up for AI in media:

- Bias and fairness – Whose voices are mis-transcribed, mis-tagged, or under-represented?

- Privacy and consent – Who is being monitored, and what data are you allowed to keep and analyze?

- Transparency and accountability – Who understands how AI decisions are made, and who takes responsibility when they go wrong?

Let’s break those down in practical media workflow terms.

Bias: when AI quietly rewrites what we see and measure

Bias in AI isn’t just a conceptual problem; it shows up in very specific, media-centric ways.

1. Unequal accuracy across voices and languages

ASR and NLP systems tend to perform better on:

- “Standard” accents

- Heavily represented languages

- Clean audio conditions

They can struggle with:

- Regional or minority accents and dialects

- Code-switching and multilingual speech

- Noisy field reports, call-ins, or user-generated audio

Studies and regulators have warned that AI systems trained on non-diverse data can exacerbate existing inequalities – including differences in how accurately different groups are transcribed and analyzed.

In a media monitoring context, that might mean:

- Protesters or callers from specific communities are consistently mis-quoted

- Coverage in under-resourced languages is harder to search and verify

- Automated topic or sentiment metrics skew toward the speech patterns that are easiest to recognize

Digital Nirvana’s Media Enrichment Solutions explicitly lean on human reviewers and linguists to mitigate these issues – especially for live captioning, transcription, and translation in complex audio conditions.

2. Biased tagging, categorization, and “problematic content” flags

AI models used for content moderation, hate speech detection, or “brand safety” can inherit societal and dataset biases. Research across domains shows that systems trained on historical data often replicate harmful associations unless they’re actively corrected.

In media monitoring, biased tagging might:

- Label some political groups as “extremist” more frequently

- Over-flag specific slang or dialects as “toxic” or “unsafe”

- Mis-classify legitimate protest footage as “violence”

If editorial teams rely too heavily on these tags or dashboards, automation bias kicks in: people trust the machine more than their own judgment, even when the machine is wrong.

That’s why “AI-only” classification is risky for high-stakes editorial or compliance decisions. The safer design is AI-assisted, human-led.

Privacy: monitoring, but with boundaries

Media monitoring often involves recording and analyzing:

- Broadcast and cable channels

- OTT and FAST streams

- Online video and sometimes social clips

- In some cases, call-ins, town halls, or user submissions

From a pure compliance perspective, capturing aired content is straightforward – it’s already public. But once AI enters the mix, privacy questions grow:

- Are you combining public media with user or customer data to profile individuals?

- Are you storing derived data (transcripts, tags, sentiment) longer than necessary?

- Do your datasets contain sensitive attributes (political views, health information, etc.) that need special handling under privacy laws?

Regulators increasingly view AI and privacy together: opaque models trained on large, ungoverned datasets can easily cross ethical and legal lines.

Digital Nirvana’s stack is built for rights-respecting, enterprise use:

- Media Enrichment services are applied to content that customers already have rights to process (their own channels, licensed content, internal media).

- Cloud-based workflows emphasize secure transfer, controlled archival, and data security as a core part of the “Digital Nirvana advantage.”

The key principle: monitor media, not people, and when individuals are involved, apply explicit policies and safeguards.

Transparency: making AI a colleague, not a black box

Most AI ethics frameworks highlight transparency (or “explainability”) as non-negotiable.

In media monitoring, transparency has a few layers:

- Operational transparency

- Teams should know where AI is used: captioning, tagging, summarizing, alerting, etc.

- Dashboards should make it clear which outputs are AI-generated and the level of confidence.

- Methodological transparency

- At least high-level detail on training data sources, model limitations, and known edge cases.

- Clear statements like: “This classifier was not trained to make legal determinations; human review is mandatory for compliance decisions.”

- Governance transparency

- Documented policies for how AI outputs are reviewed, overridden, or escalated.

- Audit trails for major decisions that relied on AI-derived insights.

Research on AI transparency in regulated sectors shows that trust collapses when people feel AI is a black box that can’t be questioned or corrected.

For media organizations, that’s especially dangerous: if AI-driven monitoring quietly shapes editorial or compliance decisions, you want a clear record of what the system said, what humans decided, and why.

Designing an ethical “AI in media monitoring” program

Here’s a pragmatic checklist you can use internally.

1. Start with a risk map

List where AI is already in your media monitoring and enrichment workflows:

- Captioning & transcription

- Translation, subtitling, dubbing

- Content classification & policy checks

- Ad & logo detection

- Metadata tagging & summarization

For each use case, ask:

- What’s the worst harm if the AI is wrong?

- Who is affected (viewers, advertisers, vulnerable groups)?

- Is a human currently reviewing or is this fully automated?

High-impact areas (compliance, policy violations, politically sensitive topics) should get extra scrutiny and mandatory human oversight.

2. Pair AI with clear human roles

Define explicitly:

- What AI does automatically (first-pass transcripts, draft tags, suggestions)

- What humans must do (final checks on captions; review for sensitive content; editorial calls)

- When humans are allowed – or required – to disagree with the machine

Digital Nirvana’s Media Enrichment Solutions are deliberately built as AI + human workflows: advanced ASR and ML to scale, with expert captioners, translators, and QC teams to protect nuance and fairness.

3. Test for bias and uneven performance

Regularly spot-check:

- Transcription accuracy across accents, languages, and genders

- Tagging behavior on different political viewpoints or communities

- How often does specific groups’ content get flagged as “problematic” compared to others

Where you find issues:

- Adjust data and thresholds

- Update documentation

- Route those scenarios to human review by default

This aligns with broader fairness-aware AI practices highlighted in recent research: testing, auditing, and iterating to reduce unfair outcomes.

4. Bake transparency into your tools and training

- Label AI-generated outputs clearly in your UIs.

- Train editorial, compliance, and operations teams on how the AI works and where it fails.

- Provide basic documentation that non-technical staff can actually understand.

The goal isn’t to turn everyone into a data scientist – it’s to avoid “mystical AI.” People should feel comfortable questioning the tool.

How Digital Nirvana’s Media Enrichment Solutions support ethical AI

Digital Nirvana’s Media Enrichment Solutions sit at the intersection of AI and media ethics: they use advanced AI to transform audio and video into transcripts, captions, subtitles, translations, and localized audio – while making design choices that prioritize responsible AI use.

1. Human-in-the-loop by design

- Captioning & live captioning pair ASR with expert captioners and reviewers.

- Skilled linguists and subject-matter specialists support transcription & translation.

- Subs & Dubs uses AI speech technology plus human QC for natural, culturally appropriate dubs.

This reduces the risk that biased or error-prone AI output goes straight to air without human correction.

2. Rights-respecting enrichment

Media enrichment is applied to content that customers already have rights to use – their own broadcasts, licensed media, corporate content, etc. The focus is on accessibility and searchability, not on profiling individuals.

3. Security and governance baked in

Digital Nirvana emphasizes data security, reliability, and cloud best practices across its offerings: secure archival, controlled access, and enterprise-grade operations for broadcast and media clients.

That’s critical when AI is generating and storing rich, derived data at scale, such as transcripts, translations, and metadata.

4. Transparency and alignment with customer policies

Because Digital Nirvana works with broadcasters, streamers, and enterprises that operate in heavily regulated environments, the workflows are designed to:

- Integrate with existing compliance and editorial standards

- Support auditable logs and reproducible processes

- Be configurable to align with each customer’s risk appetite and governance model.

In short, the tools are not just “AI add-ons.” They are meant to sit inside a policy-driven, human-led media operation.

FAQ’S

“AI in media monitoring” usually refers to systems that listen to, watch, and analyze media—TV, radio, streams, and online video—to automatically generate transcripts and captions, assign tags for people, brands, topics, and events, trigger alerts for policy violations or technical issues, and produce summaries and reports. Digital Nirvana’s Media Enrichment Solutions focus specifically on the accessibility and enrichment layer, such as transcripts, captions, translations, and subs/dubs, which often serve as the foundation for broader monitoring and analysis stacks.

AI models learn from the data they’re trained on. If that data over-represents certain accents or languages, reflects historical stereotypes or imbalances, or contains skewed examples of what counts as “good” versus “bad” content, the AI can mis-transcribe, mis-tag, or over-flag particular voices and topics. Researchers and regulators have repeatedly warned that this can reinforce racism, sexism, and other forms of discrimination if it isn’t actively addressed. That’s why diverse training data, human review, and routine bias testing are essential components of any responsible AI media monitoring strategy.

When AI is used to create captions and transcripts for broadcast or published content, it generally enhances material that is already public. Privacy risks increase when media is combined with private or sensitive data (such as user accounts or medical information), when detailed transcripts and analytics are stored longer than necessary, or when you process content where individuals never reasonably expected to be analyzed—for example, internal calls or specific user submissions. The best practice is to have clear data-protection policies, limit data retention, and only apply AI to content you have the rights and a lawful basis to process.

To prevent AI from silently steering editorial outcomes, it should be treated as decision support rather than a decision maker. Human review should be required before acting on AI-generated tags, alerts, and summaries for high-impact decisions. Teams should be trained to understand where the models perform well and where they are likely to fail, and staff should be encouraged to question and challenge AI outputs instead of assuming they are always correct. This helps counter “automation bias,” in which people defer to machine recommendations even when those recommendations clash with their own judgment.

In practice, transparency means clearly labeling AI-generated captions, transcripts, and tags inside internal tools, and documenting in plain language what each AI service does, its limitations, and where human review is required. It also involves maintaining logs so the team can reconstruct “what the AI said” when investigating problems or responding to complaints. You don’t need to publish your source code, but you do need enough operational transparency that internal stakeholders and regulators can understand how the system works at a high level.

Digital Nirvana addresses ethics through several key practices: hybrid AI-plus-human workflows for captioning, transcription, subtitling, and dubbing; a focus on processing content that customers already have rights to use, which reduces privacy risk; secure, cloud-based processing with strong emphasis on data security and reliability; and configurable workflows that allow AI services to align with each customer’s internal compliance and editorial standards. If you’re designing an ethical AI framework for media monitoring, these are precisely the levers you’ll want to be able to control.

You don’t have to solve “AI ethics” all at once. A realistic starting point is to inventory where AI already operates in your media enrichment and monitoring flows—such as captions, transcripts, tags, and alerts—and then prioritize high-impact areas like compliance, policy checks, and politically sensitive content for human-in-the-loop review. From there, you can pilot clear policies with one product or channel and gradually roll them out across the organization. As you expand, partners like Digital Nirvana can help align Media Enrichment Solutions with your governance model so that accessibility, speed, and ethics advance together rather than in conflict.