The world’s biggest platforms are quietly proving a point. Dubbing is no longer a nice-to-have; it is how you reach real audiences across languages.

Streaming giants are experimenting with AI dubbing to localize catalogs at speed, while YouTube and other platforms are rolling out multi-language audio powered by AI that can mimic a creator’s voice. At the same time, localization studios and broadcasters know that one wrong line, one flat performance, or one cultural misstep can damage a brand in a new market.

That tension sits at the heart of AI dubbing services for broadcasters. Automation is now good enough to be part of the core workflow, yet humans still decide whether a dub feels authentic, safe, and on brand.

In this article, we look at where AI dubbing services and AI voiceover for broadcasters genuinely work, where human talent and editorial control remain essential, and how Digital Nirvana’s Subs N Dubs solution helps you build a hybrid model that respects both speed and quality.

What AI Dubbing Services Actually Do For Broadcasters

AI dubbing services use a set of technologies to turn an original audio track into localized versions in multiple languages. At a high level, they combine:

- Automatic speech recognition to transcribe the original dialogue

- Machine translation to convert the script into the target languages

- Text-to-speech or neural voice synthesis to generate new voices

- Timing and lip sync alignment so the localized audio matches the on-screen performance

For broadcasters and streamers, that translates into some clear advantages:

- Much faster turnaround than traditional dubbing

- Lower per-hour costs for large catalogs

- Easier testing of new languages and markets

Vendors across the localization space now highlight AI dubbing as a way to speed up audio localization, reduce cost, and scale multilingual delivery without adding equivalent studio capacity.

However, “AI dubbing services” can encompass a wide range of approaches, from self-service tools for creators to fully managed workflows with human directors and linguists. That difference is where “when automation works” and “when humans still matter” really come into play.

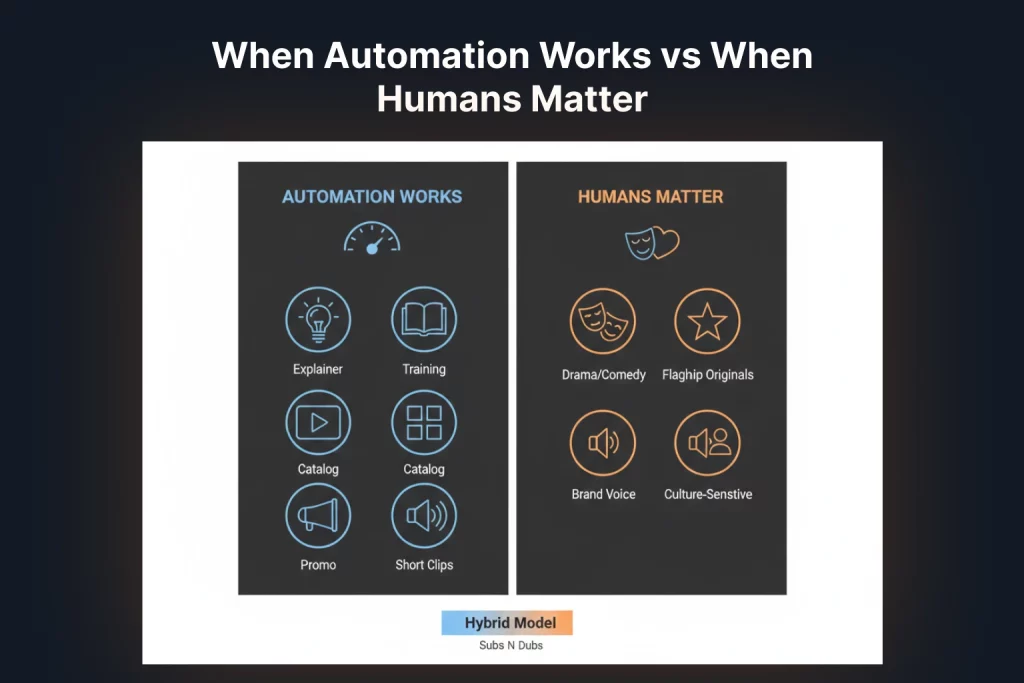

Where Automation Works: High-Volume, Low-Risk Localization

AI dubbing is at its best where the content and the requirements are forgiving. Typical sweet spots for automation include:

- Informational and factual content, such as explainers, how-to programs, or training

- Evergreen long tail titles where budgets do not justify full traditional dubbing

- Large archives that need basic localization to be discoverable and watchable

- Short-form digital content, such as clips, social videos, and promos, without heavy drama

In these scenarios, AI dubbing services can:

- Turn around multiple languages in hours rather than weeks

- Maintain consistent pronunciation of brands and key terms, once configured

- Help broadcasters and platforms test demand in new markets without full up-front investment

Case studies from AI dubbing providers show major gains in reach and viewership when content is made available in viewers’ native languages, even when the dub is produced by AI with human review rather than entirely traditional workflows.

For broadcasters, this automation works especially well when:

- The catalog is extensive, and only a subset has historically been localized

- You need to support more languages for OTT and FAST than you can with studio time alone

- You can accept a slightly more neutral performance in return for coverage and speed

When Humans Still Matter: Emotion, Culture, And Brand Voice

AI voices have improved significantly, yet they still struggle with qualities that make great dubbing memorable. Studies and industry commentary consistently highlight limitations in emotional nuance, comedic timing, and subtle cultural references.

Humans still matter when:

- The content is flagship, drama-heavy, or comedy-driven

Long-form scripted series, reality formats with strong personalities, and prestige documentaries rely on performances that feel lived in, not just intelligible. - Cultural adaptation is as important as translation

Local writers often need to rework jokes, idioms, and references so they land in the target language. That requires human judgment and, usually, collaboration with voice talent. - Brand and talent relationships are at stake

For on-air talent with established personas, or for premium brand partnerships, synthetic voices may raise contractual, creative, or reputational questions that still need to be worked through. - You are operating under tight broadcast standards

Broadcasters must consider loudness, mix quality, regulatory rules, and union or guild expectations that AI voices alone do not address.

Thoughtful vendors acknowledge this, recommending hybrid AI dubbing services in which AI handles the heavy lifting, while professional linguists and voice talent correct, direct, or replace segments that require a human touch.

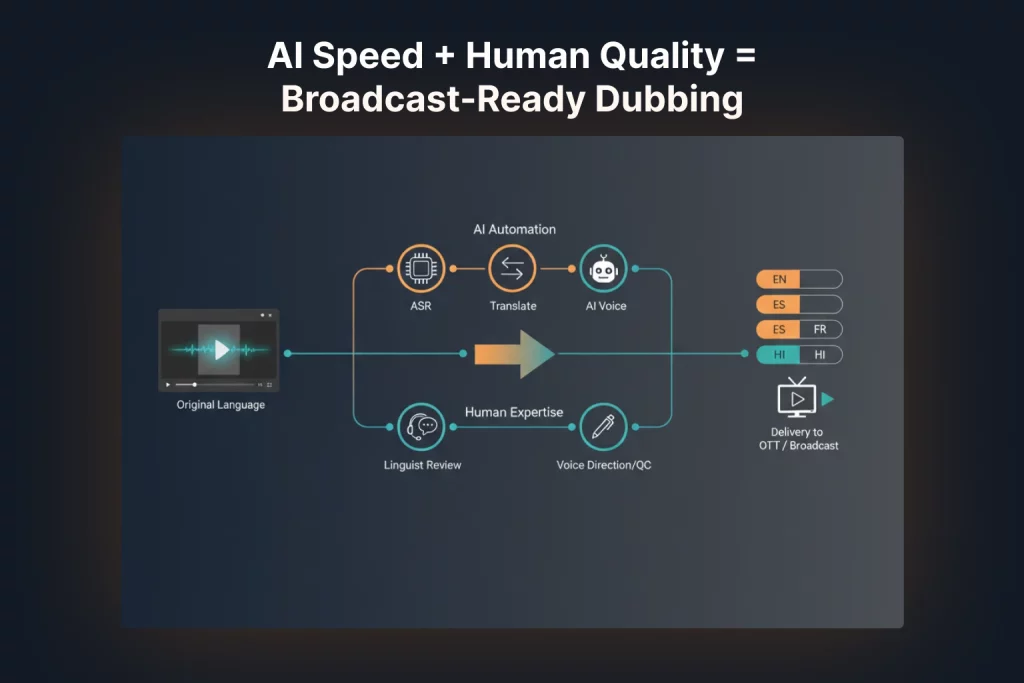

Designing A Hybrid AI Dubbing Workflow For Broadcast

A hybrid AI dubbing workflow is usually what “works in the real world” for broadcasters. Instead of choosing between “all AI” and “all human, you tune the mix based on content type and risk profile.

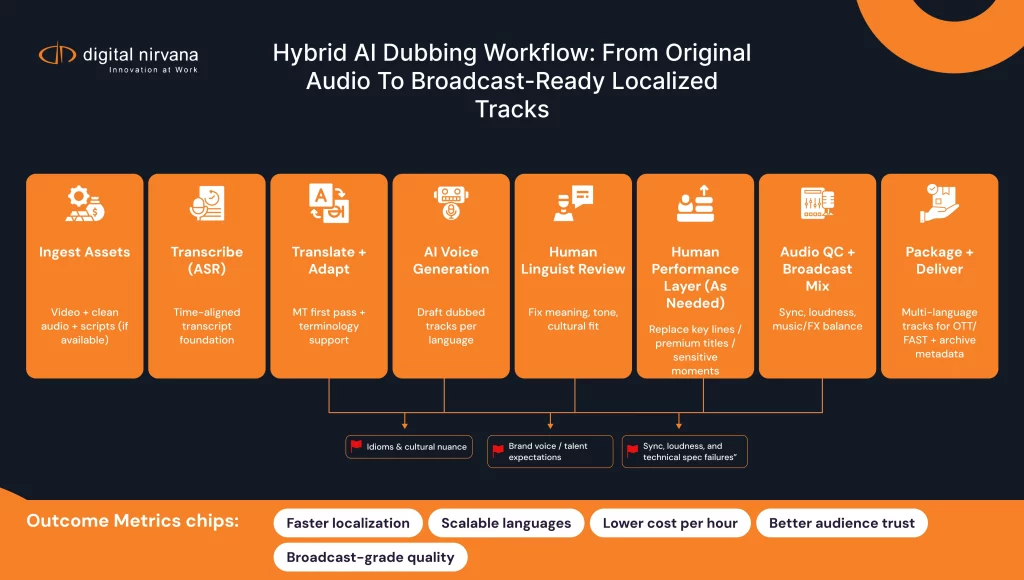

A typical hybrid flow looks like this:

- Script and asset preparation

- Original scripts, audio, and reference materials are ingested

- Terminology lists and brand names are added for consistent pronunciation

- AI first pass

- AI generates translated scripts and a dubbed audio draft

- AI voiceover for broadcasters is used to cover multiple languages quickly

- Human linguistic review

- Native linguists check translation accuracy, tone, and cultural fit

- Lines are adapted where literal translations would sound unnatural

- Human performance and QC were needed

- For premium titles, human actors re-record key roles or entire tracks

- Audio engineers and QC teams review sync, mix, and technical quality

- Packaging and delivery

- Final mixes are delivered per platform, format, and loudness specifications

- Assets are archived with dubbing metadata for future reuse

This kind of workflow acknowledges the efficiency of automation, while keeping humans where they add the most value. It also mirrors the hybrid AI approaches now used by major streaming platforms that combine AI with local professionals to maintain quality and cultural appropriateness.

AI Voiceover For Broadcasters: Promos, Continuity, And Beyond

AI voiceover for broadcasters is a specific and fast-growing use of AI dubbing technology. Instead of fully replacing all dialogue in a program, you use AI-generated voices for:

- Channel continuity announcements in multiple languages

- Theming and branding stings that require frequent updates

- Simple promos and menu voiceovers for VOD and FAST channels

- Internal and B2B explainers, training content, or product demos

Here, the trade-off often favours automation:

- Scripts change frequently and must be turned around quickly

- Emotional nuance is useful, yet not as critical as in drama

- Cost and speed matter more than unique vocal performance

Even in these cases, broadcasters benefit from a central partner who can standardize voice choices, manage pronunciations, and ensure that AI voiceover outputs conform to house style and technical requirements.

Risk, Compliance, And Brand Safety In AI Dubbing

As AI dubbing services mature, questions about risk and governance are becoming more prominent. Broadcasters need to think about:

- Accuracy and misrepresentation

If an AI dub misstates a fact, mispronounces a name, or mistranslates sensitive content, who is accountable? - Bias and cultural insensitivity

AI trained on biased data may produce problematic outputs that require human review, especially in news, sports, and factual content. - Talent rights and consent

Using AI voices that mimic existing talent or reusing voices across brands and markets raises emerging legal and ethical questions. - Viewer trust

Viewers may react differently if they discover that a voice is synthetic, especially in news or serious content.

Human-in-the-loop workflows, clear editorial guidelines, and vendors who treat AI as one tool among many, rather than a black box, are essential to managing these risks. Digital Nirvana has publicly emphasized human oversight as a design choice in its AI-driven media services, including Subs N Dubs.

How Subs N Dubs Supports Broadcast-Grade AI Dubbing

Digital Nirvana’s Subs N Dubs solution sits inside its broader media enrichment portfolio and is designed specifically to blend AI speed with human precision for subtitling and dubbing.

For broadcasters, that translates into several advantages:

- AI-powered subtitling and dubbing to handle scale and turnaround

- Human linguists and QC specialists to ensure natural, culturally appropriate dubs

- Support for multiple languages and content types, from broadcast shows to digital originals

- Integration with broader captioning, transcription, and translation workflows

Rather than offering a one-size-fits-all AI dubbing service, Subs N Dubs supports a hybrid model, where:

- Automation handles repetitive and high-volume localization

- Human experts review, correct, or fully replace tracks that require nuance

- Technical teams ensure mixes, levels, and formats align with broadcast requirements

This means broadcasters can use AI dubbing services confidently, knowing that the pipeline includes both machine intelligence and human judgment.

Implementation Blueprint For Broadcasters

To operationalize AI dubbing services and AI voiceover for broadcasters, a phased, use-case-driven approach is helpful.

- Define content tiers

- Segment content into categories, for example, premium originals, flagship sports, catalog shows, clips, and promos.

- Decide which tiers can rely primarily on AI with review, and which require full or partial human dubbing.

- Choose languages and platforms

- Prioritize languages based on audience data, advertiser demand, and strategic growth plans.

- Align dubbing priorities with OTT, FAST, and social distribution strategies.

- Integrate a hybrid AI dubbing service

- Connect your media pipelines to a solution like Subs N Dubs that delivers both AI and human localization.

- Standardize terminology lists, pronunciation guides, and style rules across languages.

- Establish review and QC rules

- Define which content is auto-approved with spot checks and which requires full human review before air.

- Build QC criteria for audio quality, sync, translation accuracy, and cultural appropriateness.

- Connect dubbing outputs to the archive and search

- Store dubbing metadata alongside original and localized assets in your MAM.

- Use subtitles and transcripts generated during the process to improve internal search and content reuse.

- Iterate based on audience and partner feedback

- Monitor viewer responses to AI dubbed vs human dubbed content by market and genre.

- Adjust the automation-to-human ratio as technology, expectations, and regulations evolve.

KPIs To Measure Your AI Dubbing Strategy

To understand whether AI dubbing services are delivering value, track KPIs in three clusters:

- Operational metrics

- Turnaround time per hour of localized content

- Cost per localized hour compared to fully traditional dubbing

- Number of languages and territories supported per title

- Quality and compliance

- Rejection or rework rate in QC due to dubbing issues

- Incidents related to mistranslation, cultural missteps, or technical quality

- Adherence to loudness and broadcast standards across localized tracks

- Audience and business impact

- Growth in viewing hours from non-primary language markets

- Performance of dubbed vs subtitled content by region and genre

- Incremental revenue or advertiser demand tied to localized versions

These metrics help you decide where automation is working, where more human oversight is needed, and where to invest next.

FAQs

AI dubbing services for broadcasters use speech recognition, machine translation, and synthetic voices to generate localized audio tracks in multiple languages, usually as part of a managed workflow. For professional use, they are often combined with human linguists and audio engineers to meet broadcast standards.

AI voiceover typically covers shorter or simpler elements, such as promos, continuity announcements, and explainers, rather than replacing every line of dialogue in a program. It is a focused use of AI speech technology that can deliver speed and consistency where deep emotional performance is less critical.

Technically, yes, but it is rarely advisable for broadcast-grade content. Industry experience and early deployments show that the most reliable approach is hybrid, where AI handles much of the work, and humans review translations, performance, and cultural context, especially for high-visibility titles.

Digital Nirvana’s Subs N Dubs solution provides AI-powered subtitling and dubbing with human-in-the-loop quality control and integrates with broader media enrichment services, such as transcription, captioning, and translation. That lets broadcasters use AI dubbing services at scale while still meeting quality, compliance, and brand expectations.

Most broadcasters begin with lower-risk content, such as catalog shows, factual programming, or digital-only series, in a small number of target languages. Working with a partner like Digital Nirvana, you can pilot hybrid AI dubbing services, refine quality thresholds, and expand once you have confidence in the process and the results.

Conclusion

AI dubbing services have moved from experimental to essential for broadcasters seeking to reach multilingual audiences without multiplying costs or production time. Automation can now create credible dubs and AI voiceovers for broadcasters at a speed and scale that traditional workflows cannot match.

Yet the decision is not “AI or humans”. It is about using AI where it delivers clear value, and keeping humans where performance, culture, and brand reputation depend on nuance. Hybrid models, where AI handles the heavy lifting and specialists review and refine the results, are already proving themselves across streaming, broadcast, and digital platforms.

Digital Nirvana’s Subs N Dubs offering is built around that reality. By blending AI speed with human precision and integrating dubbing with captioning, transcription, and translation under a unified media enrichment strategy, it gives broadcasters a practical path from experimentation to reliable, broadcast-grade AI dubbing.

Key takeaways:

- AI dubbing services are ideal for high-volume, lower-risk localization where speed and coverage matter most.

- Human expertise remains critical for emotionally rich, culturally sensitive, or high-profile content where performance and adaptation drive audience impact.

- Hybrid AI dubbing workflows that blend automation with human review are emerging as the broadcast-ready standard.

- AI voiceover for broadcasters is a powerful tool for promos, continuity, and informational content when governed by clear style and quality rules.

- Subs N Dubs from Digital Nirvana helps broadcasters operationalize AI dubbing and voiceover within a wider media enrichment strategy, so localization becomes a growth driver rather than a bottleneck.