AI content moderation anchors healthy communities, shields brands and meets global regulations. The craft blends machine learning, natural language processing, computer vision and human insight to spot and remove risky posts at the speed of modern conversation.

Introduction to AI Content Moderation

Our connected world produces torrents of text, images and video every second. Manual review alone cannot keep pace. Automated systems catch toxic language, violent imagery and illegal transactions before they cause harm, while human supervisors fine-tune edge cases.

The Rise of Digital Content and Moderation Demands

Billions of uploads flood platforms daily, covering every language and culture. Without automation, policy teams drown in user reports, press inquiries and regulator deadlines. AI tools scan each asset in milliseconds, flagging problems so humans can step in where nuance matters.

Why Automation Matters in User-Generated Environments

The gap between upload and review is where reputations sink or soar. When a hateful post or scam listing slips through, trust erodes fast, advertisers flee and legal threats multiply. Automated filters slash response times, curb liability and protect brand partnerships without throttling creativity.

Striking the Balance Between Safety and Freedom of Expression

Healthy debate thrives on clear rules and fair enforcement. Good systems enforce standards yet leave room for disagreement, satire and cultural expression. Transparent policies, detailed notices and an accessible appeal path keep users informed and reduce accusations of censorship.

Our Services at Digital Nirvana

Digital Nirvana offers comprehensive automated ad detection solutions that integrate seamlessly into broadcast workflows. Our services deliver robust monitoring and compliance tools that index every ad with frame-level accuracy. By combining AI-driven fingerprinting and metadata parsing, we capture a detailed view of when and where ads run. We also help ensure your operations adhere to any relevant regulations, whether local, federal, or international.

If you need a deeper dive or want to explore how our automated solutions could align with your business goals, visit our Digital Nirvana resource library for case studies and technical insights. Our agile cloud architecture scales with demand, so you can monitor multiple channels without sacrificing performance. Our engineering team is ready to help you integrate ad detection with your existing media asset management, traffic, and billing systems.

Understanding AI Content Moderation

A shared vocabulary smooths the road to adoption.

What Is AI Content Moderation?

AI moderation is the automated review of user uploads using algorithms that learn from labeled examples. Classifiers sort posts as acceptable or risky, then route uncertain items to human reviewers who apply context, policy and empathy to a final call.

How AI Differs From Manual Moderation

Humans excel at nuance, sarcasm and cultural references but fatigue quickly. Algorithms stay vigilant 24 hours a day, handling volume while humans handle the gray areas. Together they form a complementary defense that scales with traffic spikes and global events.

The Role of Machine Learning and NLP in Content Review

Text models train on massive corpora, learning sentiment, detecting slurs and scoring toxicity. Feedback loops update these models weekly so they recognize new slang and meme language while ignoring benign pop-culture phrases.

Core Mechanisms Behind AI Moderation

Natural Language Processing for Text Analysis

NLP parses syntax, semantics and sentiment across dozens of languages. Transformer architectures map context to reveal policy violations masked by clever wordplay. Our recent breakdown of automated metadata generation shows how richer tagging feeds these language models and sharpens detection accuracy.

Computer Vision for Image and Video Moderation

Convolutional neural networks scan pixels for nudity, extremist symbols and graphic violence. Teams that plug the MetadataIQ engine into their pipelines gain frame-level context that lifts precision across varied scenes. Temporal models add sequence awareness so a video is judged on narrative, not isolated frames.

Audio Analysis and Transcription Tools

Automatic speech recognition converts spoken words to text. Sound classifiers pick up gunshots, hate slogans and self-harm cues in live streams, closing gaps in multimodal oversight and protecting viewers from traumatic material.

Real-Time Scanning and Flagging Systems

Low-latency pipelines run in memory and push alerts within seconds. Integrated monitoring suites capture live feeds, detect compliance breaches and surface violations without delaying broadcast schedules or user streams.

Types of AI Content Moderation

Pre-Moderation: Blocking Before Content Is Live

High-risk platforms intercept uploads and hold them until reviewers approve them. This safety buffer prevents prohibited content from reaching audiences, stops viral spread and reduces takedown headaches after the fact.

Post-Moderation: Reviewing After Publishing

Lower-risk forums publish instantly, then scrub out violations. Continuous AI scans minimize disruption while preserving real-time conversation. Review teams can still remove flagged posts quickly and transparently.

Reactive Moderation: Responding to User Reports

Community flags route questionable posts to triage teams that rely on AI suggestions. This hybrid flow leverages crowd wisdom for new abuse tactics and reassures users that reporting leads to tangible results.

Proactive Moderation: Identifying Violations Automatically

Always-on scanning patrols feed, chat and comment sections before complaints surface. Shrinking exposure windows for hate speech, scams and extremist propaganda keeps brands safe and regulators satisfied.

Hybrid Moderation: Combining Automation With Human Judgment

Platforms rarely stick to one style. Dynamic workflows switch between pre- and post-moderation based on event-related risk, regional laws or traffic surges, keeping safety high without throttling engagement.

Benefits of AI Content Moderation

Scalability Across Platforms

Elastic compute clusters handle surges during global tournaments, elections or breaking news. This elasticity reduces downtime and maintains user confidence when volumes peak.

Faster Response to Harmful or Illegal Content

Real-time detection drops exposure from hours to seconds, limiting victim impact and headline risk. Fast action also weakens virality by clipping share chains before they explode.

Reduction in Operational Costs

Automation lowers headcount required for first-pass review, freeing talent for policy design, training data curation and wellness programs that fight moderator burnout.

Consistent Enforcement of Guidelines

Models follow policy matrices to the letter, curbing bias that creeps in when humans tire. Consistency makes quarterly transparency reports easier and supports fair-use arguments in court.

Enhanced Brand Safety and Advertiser Trust

Advertisers pay premiums for clean neighborhoods. Robust moderation unlocks higher CPMs and longer contracts, powering revenue without compromising ethics.

Risks and Challenges

Misinterpretation of Cultural or Contextual Language

Models can flag harmless idioms if training data skews Western or urban. Routine linguistic audits and native-speaker reviews recalibrate thresholds and protect local expression.

AI Bias and Fairness Concerns

Imbalanced datasets bake bias into predictions. Balanced sampling, synthetic augmentation and third-party audits ensure decisions treat all demographics equally and withstand regulatory scrutiny.

False Positives and Over-Censorship

Aggressive thresholds protect users but hurt creators when innocent posts disappear. Continuous tuning and a swift appeal mechanism restore balance without swinging the door wide open for abuse.

Underreporting Harmful Content

Conversely, lenient models can miss sophisticated threats like doctored images or coded slurs. Cross-modal fusion and periodic retraining broaden detection so bad actors have fewer hiding spots.

Legal Compliance Across Jurisdictions

Privacy, speech and child-protection laws vary by region. Configurable policy modules let platforms honor local statutes without building one-off code for every country.

Ethical Considerations

Transparency in Moderation Processes

Published guidelines, strike systems and clear explanations build legitimacy. Users who know the rules are less likely to feel silenced unfairly.

Ensuring Accountability in Algorithmic Decisions

Humans must remain in the loop for appeals, overrides and edge-case reviews. Detailed logs show regulators how each verdict was reached.

Managing User Appeal and Dispute Mechanisms

Simple appeal buttons and timely human review soften the sting of false takedowns. Public statistics on reversal rates foster confidence that the system works.

Privacy Concerns With Automated Review

Platforms encrypt data at rest and in transit, purge personal info after analysis and follow best practices from the Partnership on AI to safeguard civil liberties.

Use Cases of AI Moderation

Social Media Platforms

Networks rely on AI to filter harassment, organized disinformation and non-consensual imagery across billions of posts daily, keeping public squares safer and advertisers happier.

Online Marketplaces and Product Listings

Computer vision flags counterfeit luxury goods, banned weapons and restricted pharmaceuticals before checkout, while NLP spots suspicious pricing and scam patterns.

Video Sharing and Streaming Platforms

Scene-change detection and audio fingerprinting identify copyright abuse and graphic violence in seconds, reducing expensive takedowns and legal claims.

Gaming and Metaverse Environments

Low-latency voice and chat filters block slurs before they hit other players, while sentiment analysis identifies grooming attempts and extremist recruiting in virtual hangouts.

Forums, Chat and User-Generated Content Sites

Lightweight API plug-ins give niche communities affordable oversight, preserving hobbyist jargon while blocking harassment that drives newcomers away.

Integrating AI Content Moderation Into Platforms

Assessing Platform Needs and Content Risk

Start with a threat map covering user demographics, regional laws, content formats and likely abuse vectors. This blueprint prevents buying features you will not use.

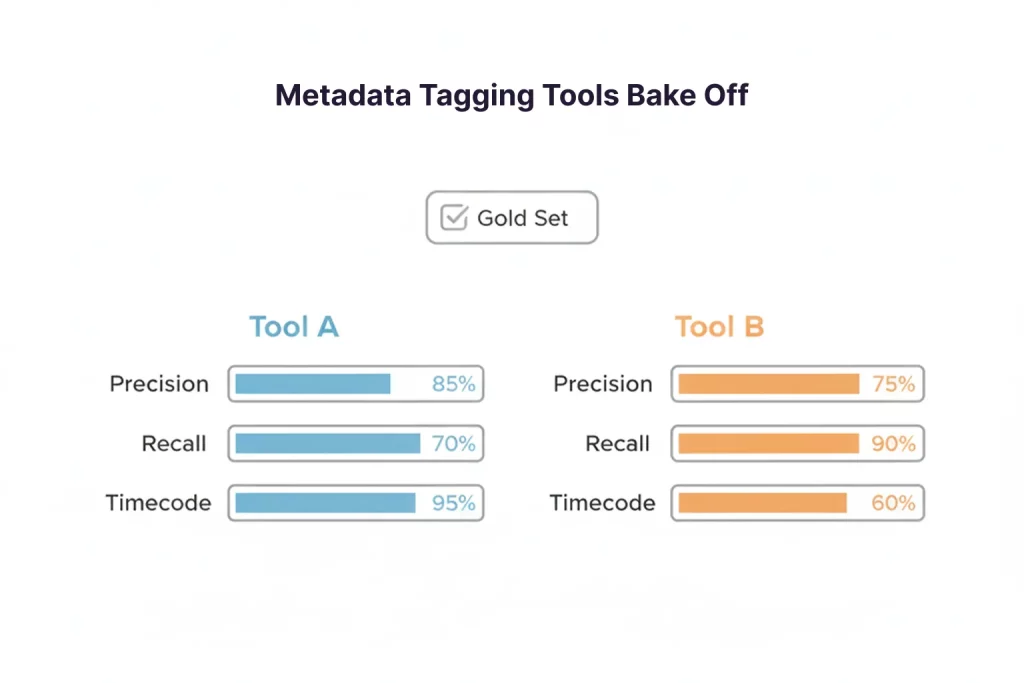

Choosing the Right AI Moderation Tools

Compare vendors on model accuracy, language support, inference latency, total cost and roadmap stability. Proof-of-concept pilots reveal hidden integration pains early.

APIs and Cloud-Based Integration Approaches

RESTful endpoints and WebSocket streams shorten deployment cycles. Serverless options scale with demand and minimize DevOps overhead for lean teams.

Defining Moderation Rules and Thresholds

Draft a policy matrix that ranks severity, assigns penalty tiers and specifies appeal timelines. Continuous data review keeps that matrix aligned with evolving community norms.

Human-in-the-Loop Moderation

When to Involve Human Reviewers

Satire, artistic nudity and political speech need cultural context. Flag complex cases for specialist teams that understand history, nuance and regional sensitivities.

Reducing Moderator Burnout With AI Support

Algorithms triage the most graphic or repetitive material, letting humans focus on nuanced calls. Wellness breaks, counseling and rotation schedules round out a sustainable program.

Building Trust Through Oversight

Dashboards with random sampling, quality-score targets and peer review keep error rates visible, so leadership can address problems before users notice.

Monitoring and Improving AI Systems

Our field report on AI-assisted content workflows shows feedback loops slashing error rates by double-digit points in six months.

Feedback Loops and Continuous Learning

Every takedown, reversal or user report adds labeled data that sharpens future predictions. Scheduled retraining turns that data into measurable gains.

Measuring Effectiveness Through Precision and Recall

Monthly confusion-matrix audits reveal drift toward over- or under-enforcement. Balanced metrics prevent chasing one goal at the expense of another.

Regular Audits and Model Retraining

External audits confirm compliance and fairness. Quarterly retrains on fresh data keep pace with new slang and abuse tactics.

Handling Edge Cases and Unknown Content Types

Fallback routes send novel threats to rapid-response engineers for rule updates rather than leaving gaps attackers can exploit.

AI Moderation Across Languages and Cultures

Multilingual NLP Capabilities

Modern models cover 100-plus languages with dialect sensitivity, using shared subword embeddings that reduce training data needs for smaller tongues.

Region-Specific Slang and Idioms

Fine-tuning on local corpora prevents false flags on benign phrases and ensures real threats in regional slang do not slip through.

Cross-Border Regulatory Implications

Europe’s Digital Services Act, India’s IT Rules and California’s Age-Appropriate Design Code set different duties. Region-locked rule sets let platforms comply without slowing global rollouts.

Industry Trends and Innovations

Context-Aware Moderation Models

Graph-based architectures weigh user reputation, post history and reply context to rank violations more accurately than content alone.

Use of Generative AI to Understand Intent

Large language models simulate conversation threads to spot coded harassment or coordinated raids hidden in apparently benign posts.

AI Moderation in Real-Time Live Streams

Edge inference on GPUs processes video and audio frames under one second, making mid-stream takedowns realistic at scale.

Moderation in AR/VR and Immersive Environments

Spatial audio, gesture tracking and 3-D avatars introduce new vectors for abuse, so researchers build multimodal detectors that read body language along with speech.

Compliance and Regulatory Frameworks

Aligning With GDPR, COPPA and Local Laws

Data minimization, parental consent and right-to-explain rules shape feature roadmaps and logging practices.

Content Moderation Laws in the US and Globally

Section 230 debates and Europe’s DSA add explicit duties for “very large” platforms. The European Commission offers technical guidance that informs engineering sprints.

Preparing for Audits and Policy Enforcement

Document data lineage, model versions and decision logs to simplify regulator reviews and bug hunts alike.

Transparency Reporting Best Practices

Quarterly reports on volume, category and geographic distribution of takedowns build public confidence and satisfy watchdog groups.

Metrics for Moderation Performance

Accuracy, Recall and False-Positive Rates

Balanced benchmarks reveal whether models lean strict or lenient. Publishing topline numbers invites constructive oversight and deters claims of hidden bias.

Time to Detect and Act on Harmful Content

Detection under 60 seconds for images and two minutes for video keeps platforms ahead of the news cycle and preserves user trust.

User Satisfaction With Moderation Decisions

Surveys, sentiment analysis and appeal outcomes expose policy pain points before they translate into churn.

Building Trust With Users

Explaining Moderation Decisions

Plain-language notices detail which rule was triggered and how to fix the issue, turning frustration into learning.

Offering Appeal and Review Mechanisms

Fast, easy appeals show respect for user rights and surface blind spots in the policy matrix.

Creating Fair and Inclusive Policies

Advisory councils with gender, cultural and accessibility representation reduce blind spots and keep the rulebook in tune with real-world speech.

Future of AI Content Moderation

Next-Gen Models and Real-Time Capabilities

Edge inference on user devices could cut latency further and lower server costs. Privacy-preserving quantization keeps personal data on-device.

Greater Personalization and Contextual Understanding

Adaptive scoring may one day tailor thresholds to an individual’s community reputation while still protecting privacy through differential techniques.

Autonomous Moderation Systems

Self-calibrating pipelines will tweak thresholds in real time as threat landscapes shift, guided by reinforcement-learning rewards aligned with policy goals.

Collaboration Between Platforms on AI Standards

Shared abuse signature databases and open-source evaluation harness collective intelligence for a safer internet without duplicating effort.

Digital Nirvana: Purpose-Built Solutions for Responsible Moderation

Digital Nirvana combines decades of broadcast compliance with cutting-edge AI moderation. The MetadataIQ engine tags spoken words, scenes and on-screen text with frame-level precision, enriching downstream classifiers. The MonitorIQ suite captures live feeds, flags violations in near real time and archives footage for legal discovery. Managed review services blend these tools with seasoned analysts, letting media leaders meet strict standards without slowing production.

Conclusion

AI content moderation moves faster than bad actors and keeps digital neighborhoods welcoming. Combine accurate models with expert oversight, publish transparent rules and refine systems through user feedback. To see how tailored AI and expert workflows can secure your platform today, explore Digital Nirvana’s solutions or reach out for a demonstration.

FAQs

What makes AI content moderation different from keyword filters?

AI systems analyze context, sentiment and visuals, so they catch disguised insults, coded language and image-based threats that simple word lists miss.

How accurate are modern AI moderation tools?

Leading deployments reach precision and recall above 90 percent, though figures vary by language, content type and freshness of training data.

Can small startups afford AI content moderation?

Cloud APIs offer pay-as-you-go pricing, letting early-stage companies start small and scale safeguards alongside user growth.

Does AI moderation violate user privacy?

Responsible vendors anonymize data, encrypt transactions and discard personal information after analysis, aligning with GDPR and similar statutes.

Will AI replace human moderators entirely?

Humans remain essential for satire, regional nuance and appeals. AI handles the heavy lifting, while people bring context and accountability.