Hybrid AI dubbing drives Video Dubbing at scale with Artificial Intelligence Accuracy, clear lip-syncing rules, and tight audio tracks. Teams publish more languages with less hassle and keep storytelling intact. You choose the dubbing option that matches scene’s needs and route work through an AI dubbing tool where it saves time. Human oversight guards translation accuracy, prosody, and intonation so viewers in Spain and Mexico feel native care. Upload sources once, run batch processing where it fits, and ship platform-ready packages with integrity.

Hybrid AI dubbing for 2026 budgets without cutting quality

A hybrid plan blends AI Dubbing Technology with expert review so you reduce cost without losing Localization Quality. Models handle first passes on transcription, draft adaptation, alignment, and voice translation while people shape performance. Producers track time to publish, acceptance rates, and cost per minute, not just studio hours. Editors move faster with clean stems, consistent room tone, and labeled audio tracks. The result keeps viewer feedback high across OTT, YouTube, and websites.

Neodubbing: LiveDubbing speed with VoiceOver Accuracy

Neodubbing delivers a LiveDubbing style that pairs fast synthesis with a human VoiceOver director for accuracy and emotional accuracy quality. You set timing targets, then a reviewer tunes prosody and intonations on the lines that carry meaning. This method lifts translation for trailers, promos, and product intros. It turns around same day cuts without losing tone. Treat it as a game changer for short-form Video Localization.

What hybrid AI dubbing means in practice

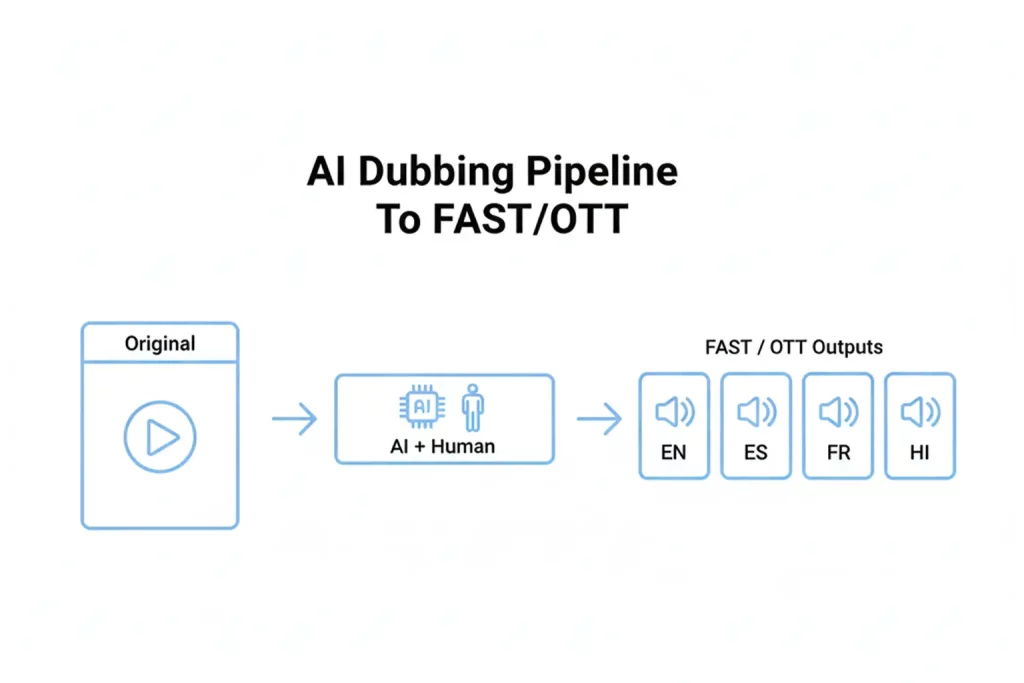

Hybrid means you run an AI Dubbing Process first, then apply focused human fixes. Linguists tighten meaning, directors coach energy, and engineers lock loudness and peaks. Legal teams confirm consent for synthetic voices and maintain tight access controls. Project managers use APIs to feed assets into Localization Workflows and return exports to CMS and MAM. You build a stack that supports rapid syndication and versioning.

AmazonDub playbook: Localization and Translation at scale

Think of an AmazonDub playbook as a benchmark for scale across the Content Localization Market. You use batch processing for catalogs, reuse voices, and route reviews by risk. You feed AI Metadata, glossaries, and timing rules into the pipeline so models start smart. You shape delivery for FAST and OTT partners without custom scramble for every title. That approach cuts cost and protects relevance.

Our services at Digital Nirvana for hybrid AI dubbing

Our services at Digital Nirvana pair advanced AI with expert review to deliver natural Voice-Over and tight Lip-Syncing without budget shock. For end to end dubbing and subtitling, our Subs N Dubs service blends AI Dubbing Solutions with Human Oversight so localized audio feels native. When your workflow needs accurate scripts and captions, TranceIQ brings Translation Accuracy, Transcription, and Accessibility into one tool. Teams that enrich assets with scene tags and searchable markers use Media Enrichment Solutions to keep Collaboration smooth. Broadcasters that require caption compliance can also engage our Closed Captioning Services for broadcast-ready Subs with audit trails.

Human-in-the-loop workflow that pairs models with expert review

People anchor quality. Reviewers check dialects, accents, and cultural references. Directors punch up humor and pause placement for emotional beats. Engineers clean clicks and breaths and confirm Lip-Syncing on close-ups. Producers control review rounds so Collaboration stays efficient. This human layer turns raw outputs from an AI dubbing studio into broadcast-ready audio.

LiveDubbing checkpoints for Storytelling

Add checkpoints where humans judge Storytelling and emotional clarity. A director approves hero lines before final mix. A linguist confirms formal registers, honorifics, and regional quirks. An engineer audits plosives and sibilants that matter for strict sync. This flow improves Localization Quality without runaway cost.

Where AI-only tracks miss nuance, timing, or cultural cues

Full automation stumbles on sarcasm, rhyme, and idioms that carry tone. Models may force tight timing that crushes breaths and natural rhythm. Some voices flatten prosody and intonations in fast scenes. Region-specific slang in Spain or Mexico can drift from intent. A hybrid plan fixes misses fast and keeps Translation Accuracy intact. For a deeper take, see our blog on hybrid AI dubbing services.

Where human-only dubbing overspends on time and talent

Human sessions run long and book premium rooms for simple lines. Talent picks up repetitive bumpers and legal reads that an AI Dubbing Tool can handle. Logistics eat timelines when you juggle cast and studio calendars. Rates climb for late pickups even on low-stakes scenes. Hybrid shifts repetition to automation and keeps experts on the moments that sell.

Plan a 2026 dubbing budget with a hybrid model

Plan with your slate and targets by region. Tie languages to expected watch time and retention, not guesswork. Mark scenes that demand strict sync and scenes that allow natural read. Allocate director time to hard scenes and set a cap on passes elsewhere. Track every assumption inside a budget file you can defend.

VoiceOver and Accuracy targets by genre

For drama and docu-series, chase VoiceOver and Accuracy that respect character arcs. For explainers and eLearning, favor natural rhythm and clear diction. For reality, set lighter sync and push speed. For trailers, tighten plosives and mouth closures. Document targets so teams read the same playbook.

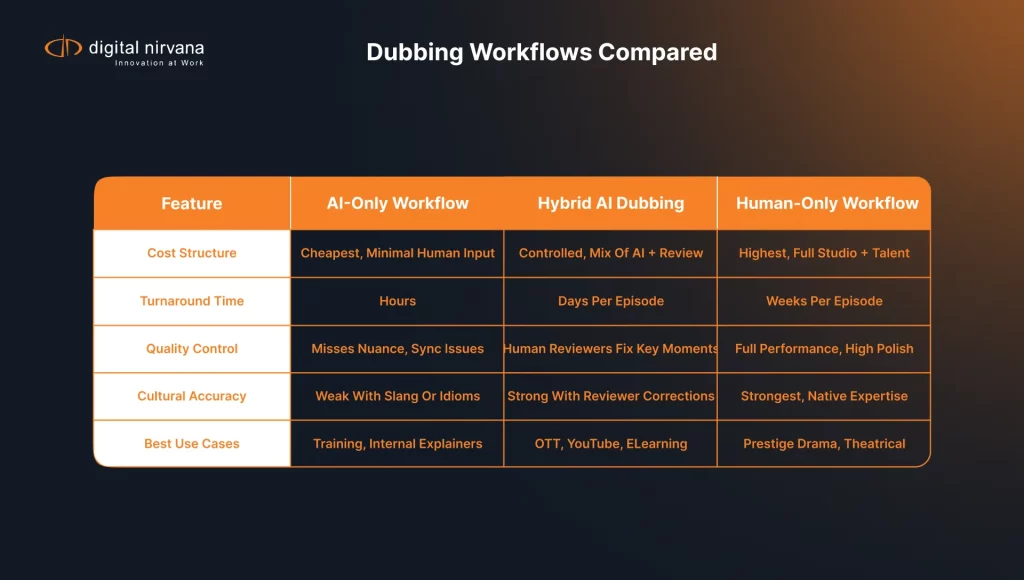

Per-minute cost ranges by workflow and content type

Model-only tracks sit at the low end for internal explainers and simple social clips. Hybrid ranges cover mid-tier budgets for long-form courses and episodic content with control points. Human-only dubs rise when you cast celebrities, demand strict lip match, or mix theatrical. Animation and children’s titles add time for character voices and extra checks. Always pair a range with languages, passes, and delivery specs.

Translation ranges for Neodubbing vs human reads

Neodubbing supports fast Translation on news cuts, trailers, and promos with one focused review. Human reads win for close-up drama and heavy comedy. Use pilots to lock your true numbers per title type. Keep a scorecard for forecast versus actual so finance trusts the model.

Cost drivers you can actually control

You control script clarity, glossary depth, and review rounds. You can standardize Lip-Syncing targets per genre. You can reuse approved voices and pronunciation rules across franchises. You can automate ingest, review routing, and Export Formats with APIs. You can plan Batch Processing windows to lower setup cost. For practical tips, read our post on AI metadata tagging.

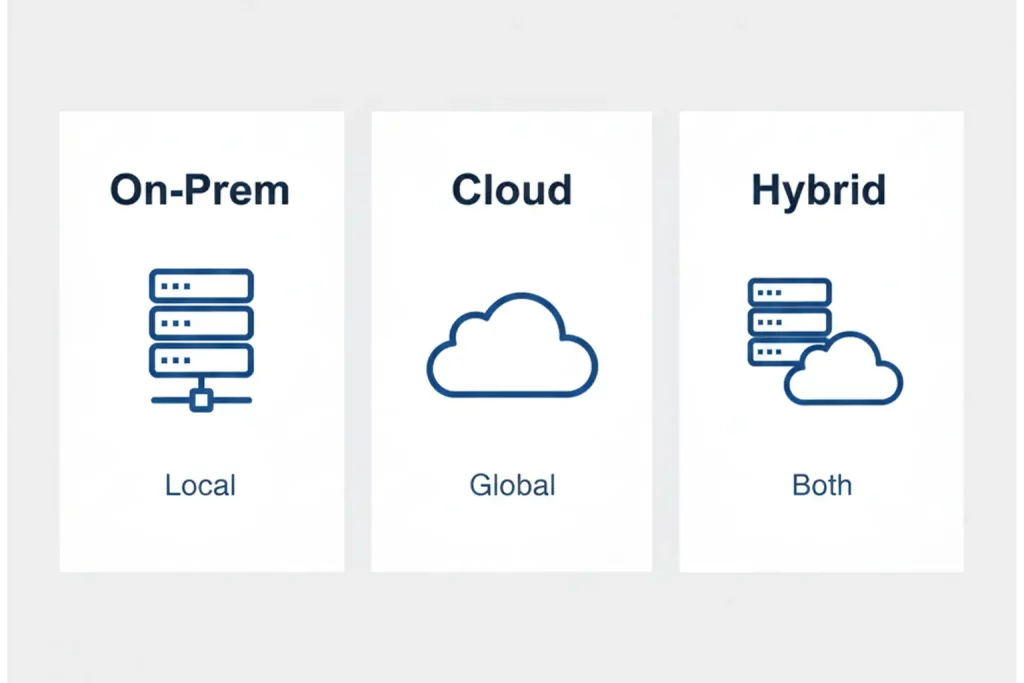

Dubbing Technology levers inside your Stack

Treat your Dubbing Technology Stack as levers, not a black box. Pick a Dubbing Tool that supports Access Control, role-based review, and clean Export Formats. Add chatbots for quick reviewer notes and line approvals. Use AI Metadata to tag scenes for risk so humans spend time where it matters. Keep Collaboration in one system to reduce hassle.

When to invest in premium human talent

Spend on marquee scenes, sensitive topics, and brand voices. Book senior directors for comedy timing, fast cross-talk, and high emotion. Hire language supervisors for Dialects that need local insight. Cast recognized voices for trailers in markets that respond to celebrity cues. Put senior mixers on theatrical or flagship OTT releases.

Compare AI dubbing services vs hybrid vs human-only

Compare services with the same asset and assumptions. AI-only wins on speed and price for internal training and evergreen explainers. Hybrid wins on balance for OTT, streaming, YouTube, and eLearning. Human-only wins on craft for prestige drama and brand films. If a vendor forces one Dubbing Method on every title, your budget pays the price. Our blog on digital broadcast monitoring shows how quality metrics surface issues early.

AmazonDub vs LiveDubbing vs traditional sessions

AmazonDub emphasizes scale and catalog throughput. LiveDubbing emphasizes speed on tentpole moments. Traditional sessions emphasize performance for high-stakes scenes. Use each mode where it fits and stop forcing one shape onto every episode.

Quality, speed, and price tradeoffs by use case

Training videos need clarity, timing, and consistent voices. Product demos need accurate technical terms and confident tone. Reality TV needs fast turns and flexible pickups. Drama needs emotion, character continuity, and delicate sync. Children’s content needs safe language, steady character voices, and clear prosody.

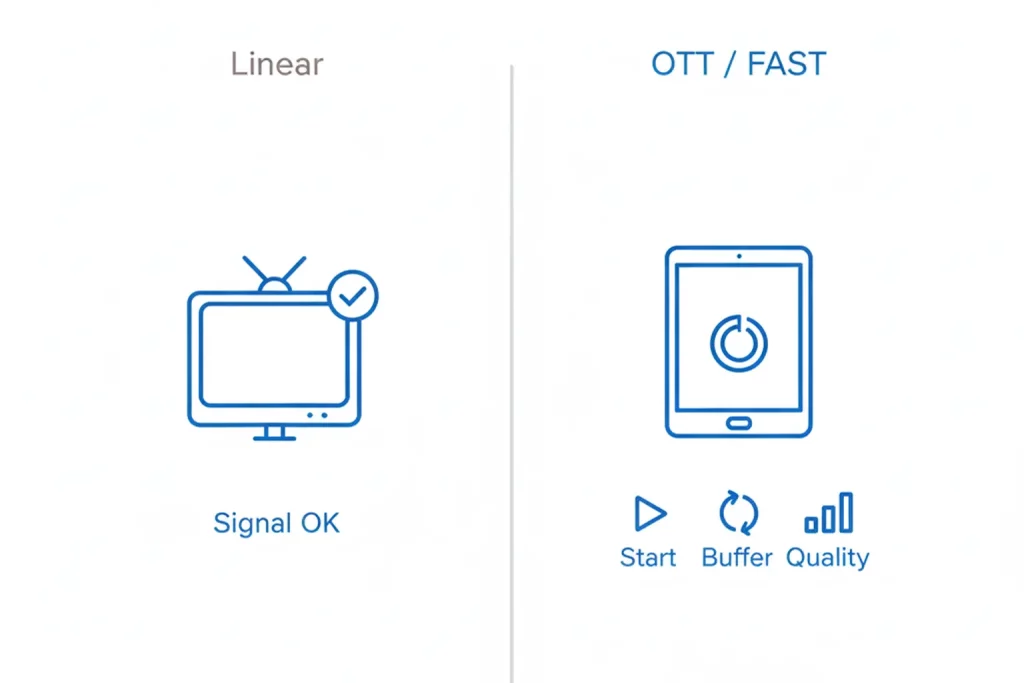

OTT, streaming, YouTube, and eLearning scenarios

OTT and FAST channels require strict packaging, stems, and loudness targets. YouTube rewards speed, thumbnail tests, and social cadence. eLearning platforms want consistent voices across modules and simple revision paths. Corporate streams need privacy and Access Control. Learn how multilingual captioning supports reach in our captioning guide.

Localization on Netflix and specialty streamers

Use platform specs as guardrails. Netflix sets tight bars for loudness, captions, and descriptive audio. Specialty platforms like MHZ Choice lean into Niche Content where Dialects and Accents matter. Reuse voices across seasons so fans trust continuity. Validate sample packages before you ship a season.

Choose languages, voices, and tone that convert

Pick languages from analytics and the Video Translation Market, not hunches. Match voices to audience age and region. Define tone in plain English that directors and models can follow. Build pronunciation dictionaries from product names and local slang. Test a few options, then lock baselines for the season.

Neodubbing and LiveDubbing choices for trailers

For trailers, mix strict sync on hero shots with natural reads on montage lines. Keep energy high and land taglines with confidence. Run quick A/B tests on voice timbre. Lean on Viewer Feedback to confirm hook strength. Protect character integrity across marketing and episodes.

Build a production-ready hybrid dubbing workflow

Start with clean source and end with platform packages. Capture transcripts and timecodes, adapt scripts, and set sync targets by scene. Generate draft reads, review timing and emotion, and replace weak lines with human takes. Deliver stems, M&E, and labeled exports. Log decisions so season two moves faster. For deeper ops insight, see our post on media organizing upgrades.

Script prep, glossaries, and brand voice guides

Lock a source script with timecodes. Build glossaries for names, acronyms, and product terms. Keep a voice guide with do and do not examples. Share references so reviewers hear the target. Update docs every episode to avoid drift.

Lip-sync targets and timing rules

Set targets per scene. Comedy and high drama need tight sync on plosives and mouth closures. Narration can favor natural reads with lighter sync. Note fast cross-talk, crowd beds, and on-screen text that dictates timing. Keep rules short and clear so teams follow them.

Strict lip-match vs natural-read options

Use strict match on close-ups and dialogue-heavy scenes. Use natural read on wide shots, B-roll, and graphics. Strict match needs more pickups and director time. Natural read speeds instructional content and saves budget. Document the choice by scene.

Audio post, mix, and delivery specifications

Lock deliverables before record. Define stems, multitracks, and an M&E that matches the platform. Set loudness targets and true-peak limits for OTT and broadcast. The FCC’s CALM Act rules explain loudness expectations for broadcast and cable spots. Review the FCC guidance at the CALM Act page. Specify file names, channel order, and container types. Deliver sample files early to confirm specs.

Stems, M&E, multitracks, and loudness targets

Provide separate tracks for dialogue, music, effects, and ambience. Keep a clean M&E for future dubs and mix updates. Track loudness with standard meters and fix true-peak overs before export. Use consistent channel layouts to reduce mistakes. Archive sessions and notes so future edits move fast.

Safeguard quality without blowing the budget

Quality control starts at intake. Add light checks after each automated stage to catch drift early. Use reviewers who know the genre and region. Track issue patterns and fix root causes. Hold short passes that add value and keep momentum.

Checkpoints for reviewers and linguistic QA

Create checkpoints for meaning, tone, and sync. Reviewers score each pass and flag critical fixes. Linguists watch registers and honorifics that matter in culture. Directors judge energy and character consistency. Engineers check noise, breaths, clicks, and levels.

Pronunciation dictionaries and style notes

Compile dictionaries with phonetic hints and sample audio. Note preferred pronunciations for names and borrowed words. Store style notes for contractions and energy levels. Share examples of strong and weak deliveries. Keep one master copy and push updates to all teams.

Review rounds that stay on schedule

Limit passes and timebox reviews. Use light passes for fixes and one heavy pass for sign off. Escalate only the lines that affect story or legal risk. Close with a final listen that confirms corrections. Keep a changelog so teams learn.

Protect rights, privacy, and ethics in AI dubbing

Cloned or synthetic voices require explicit consent and secure storage. Contracts must define usage, term, and markets. Encrypt training data and voice assets and limit export. Remove personal identifiers and set deletion windows. Credit talent and reviewers to honor their work. To meet Accessibility obligations for captions and transcripts, follow the U.S. Section 508 guidance.

Secure consent and usage for cloned or synthetic voices

Use opt in consent for any captured or cloned voice. Define where, how long, and in what contexts you can use the voice. Provide a kill switch for contract changes. Track each use in an auditable ledger. Share a plain-English summary with talent.

Guard data sets and personal information

Store training data in access controlled systems. Rotate keys, log access, and limit exports. Strip personal data that models do not need. Set retention windows and delete on schedule. Test backups and recovery so production keeps moving.

Credit talent, reviewers, and local language teams

List voice actors, directors, linguists, and engineers in metadata and credits. Respect local norms for name order. Share wins and highlight clips with teams. Recognition helps you retain talent and stabilize quality.

Measure ROI for AI dubbing services

Measure watch time, completion, and retention by language and platform. Compare time to publish and cost per minute across workflows. Run A/B tests on scripts, voices, and sync targets. Report on fixes per pass and acceptance rates to show gains. Tie metrics to business results for a clear story.

KPIs: watch time, completion rate, and retention

Watch time shows whether viewers stick with the story. Completion rate matters for training and support. Retention graphs reveal drop offs where timing or tone slips. Segment by market to see local behavior. Connect KPI movement to script and voice choices.

A/B test models, voices, and scripts

Test one variable at a time and run long enough to trust results. Try alternate voices for key characters. Test script lines for clarity and humor in each market. Check versions with different sync targets and study Viewer Feedback. Keep a test log that informs the next season.

Track time-to-publish and per-minute cost

Measure end to end. Count waiting time for reviews and pickups. Track cost per minute by language, show type, and platform. Compare AI only, hybrid, and human only on the same title. Publish a quarterly scorecard for stakeholders.

How Digital Nirvana delivers hybrid AI dubbing at scale

Digital Nirvana blends AI Dubbing Solutions with skilled reviewers to ship consistent dubs quickly. Our teams prepare scripts, timing, and pronunciation rules, then drive focused reviews. Engineers deliver clean stems, M&E, and platform mixes with clear naming and loudness compliance. Producers maintain audit trails for voice rights, data handling, and approvals. You get one pipeline that supports weekly drops and catalog backfills.

Human-led QC on top of advanced AI models

We assign reviewers who know your genre and regions. They confirm meaning, tone, and sync at each checkpoint. Directors step in for hero lines and tricky beats. Engineering logs catch noise, pops, and alignment issues before export. This stack raises quality without slowing delivery.

Security, compliance, and audit trails built in

We secure voice assets and training data with encryption, access controls, and logging. Consent and contracts live in a system that ties usage to episodes and markets. Our audit trails help privacy teams answer questions fast. We set retention windows and delete on schedule. Your content ships with the right protections.

Integrations with captioning, metadata, and monitoring

Digital Nirvana links dubbing with captioning, Subs, and AI Metadata. Our tools align transcripts, tag scenes, and carry style notes into post. We monitor output against loudness and platform specs to prevent rejections. We push deliveries into CMS or MAM with status updates. The pipeline supports Syndication across platforms.

Choose the right partner for AI dubbing services

Pick a partner who proves quality with a pilot and offers flexible options. Ask for human control points and transparent pricing. Check language coverage, casting depth, and director bench strength. Review security posture for voice assets and personal data. Demand references from your genre.

2026 RFP checklist and scoring tips

Score proposals on script handling, timing control, voice library depth, and review design. Weigh security, consent workflows, and audit readiness. Compare integrations for CMS, MAM, APIs, and Export Formats. Ask for per minute pricing with assumptions and review rounds. Run a weighted score so price does not drown out quality.

Questions about models, data handling, and support

Ask which models they use and why. Ask how they fine tune without overfitting to personal data. Ask how they store voices and who can access them. Ask about support hours and escalation during weekly drops. Ask for owners for script, timing, and mix decisions.

Run a pilot that proves quality fast

Pick one title, two languages, and a mix of strict sync and natural reads. Set success metrics for cost per minute, time to publish, and retention. Share scripts, glossaries, and style notes and see how the vendor improves them. Run a short A/B test on voices or sync levels. Bake lessons into season plans.

Budget templates and sample scenarios

Templates help teams plan and defend budgets. Use a sheet that toggles workflow by scene type and language. Include review rounds, casting, and audio post. Bake in fixed costs for setup and delivery. Use the scenarios below to test your ranges.

Short social clip under two minutes

Plan a light script adaptation, quick timing check, and a natural read. Run one review pass and a simple stereo mix. Expect quick turnaround for social cadence. Reuse the same voice across a series for brand consistency. Export Formats should match platform guidance.

Fifteen-minute training or product module

Budget a hybrid workflow with a human final read on key sections. Add a glossary pass and two review rounds for clarity. Deliver stems, an M&E, and a clean mix to your LMS or CMS. Track completion and quiz scores to measure impact. Reuse approved voices across modules.

Thirty-minute documentary or OTT episode

Plan strict sync for close ups and natural reads for narration. Cast two primary voices and add a director pass for emotional scenes. Budget three review rounds and careful audio post. Deliver full stems, M&E, and platform specific packages. Track watch time and retention by territory after launch.

Avoid common pitfalls that waste money

Teams often skip prep and pay later. They ignore glossaries and tone guides and end up with pickups. They underestimate language quirks and get awkward phrasing. They overlook audio post and delivery specs and bounce at ingest. They invite too many reviewers and stall momentum.

Skipping scripts, glossaries, and tone guides

A weak script or missing glossary creates confusion. Reviewers argue over phrasing instead of performance. Voices drift in tone across episodes. You pay for pickups and rush fees. Prep saves money and time.

Underestimating language-specific challenges

Some languages require different pacing, formality, or honorifics. Others handle gender or politeness in ways that change word choice. Mouth shapes and phonetics make strict sync harder for certain pairs. Local idioms may need rewrites to sound natural. Plan per market and save budget.

Ignoring audio post and platform delivery needs

Platforms reject files that miss specs on loudness, peak, or channel order. Social clips fail when you ignore aspect ratios and captions. OTT requires clean M&E for future mixes. Without clear delivery rules, teams waste time repackaging. Lock specs early and validate with samples.

Scale multilingual dubbing across platforms

Scale without losing quality. Standardize scripts and timing rules so teams move in parallel. Set watch folder or API handoffs into CMS, MAM, and delivery platforms. Reuse voices, style notes, and mix templates. Track status in one dashboard and clear blockers fast.

OTT and FAST channels: packaging and specs

Prepare full packages with stems, M&E, captions, and metadata. Match loudness and peak targets per platform. Name and label assets so ingest systems accept them. Include territory rights and voice usage in metadata. Validate a sample before you push a season.

YouTube and social distribution cadence

Publish fast and test thumbnails and hooks. Keep consistent voices across a series to build trust. Deliver mixes that travel well on mobile speakers and earbuds. Post variants with hard burned captions for silent autoplay. Study retention and adjust scripts where drop offs spike.

CMS, MAM, and API integrations for automation

Connect the dubbing pipeline to your content systems. Automate ingest, review assignments, and delivery. Keep status visible to producers and legal teams. Store approvals and rights in the same record as the audio. This automation removes manual steps and saves budget.

Our services at Digital Nirvana to prove ROI

At Digital Nirvana, we help you hit quality bars while controlling spend with proven AI Dubbing Solutions. Producers start with Subs N Dubs for Video Dubbing that balances speed and nuance, then fold in TranceIQ to strengthen scripts and Subs. Teams that need richer search and scene intelligence add Media Enrichment Solutions. Broadcasters that prioritize compliance and Accessibility can tie in Closed Captioning Services for audit ready outputs and clean handoffs to platforms.

In summary…

A hybrid dubbing plan trims cost and keeps quality strong across channels. You mix AI Dubbing Technology with expert review and use clear rules. You protect rights and privacy while you scale. You measure what matters and adjust based on data. Use the playbook below to align teams and output.

- Budget design

- Map languages to goals by platform and region in the Video Translation Market.

- Reserve human craft for high impact scenes and trailers.

- Set targets for cost per minute and time to publish.

- Map languages to goals by platform and region in the Video Translation Market.

- Workflow

- Lock scripts, glossaries, and timing rules.

- Use strict sync where the camera demands it.

- Deliver stems, M&E, and platform ready mixes.

- Lock scripts, glossaries, and timing rules.

- Quality

- Add light reviews after each automated step.

- Track fixes per pass and acceptance rates.

- Maintain dictionaries and style notes.

- Add light reviews after each automated step.

- Rights and security

- Collect consent for cloned or synthetic voices.

- Vault data sets and log access.

- Credit talent and local teams.

- Collect consent for cloned or synthetic voices.

- Measurement

- Watch time, completion, and retention by market.

- A/B test voices, scripts, and sync targets.

- Report cost per minute and time to publish.

- Watch time, completion, and retention by market.

Choose a partner who can prove results with a pilot and who builds a repeatable pipeline. If you want a quick path, talk with Digital Nirvana about a two language test on a title from your slate. We will guide script prep, timing rules, and delivery so you can measure gains quickly.

FAQs

How do hybrid AI models cut costs without losing quality?

Models handle first passes and Batch Processing. Humans tune meaning, timing, and performance. Reviewers catch cultural risks before on air use. Engineers shape the mix to platform targets. This split lowers cost per minute and keeps quality high.

What projects still call for human only dubbing?

Prestige drama, theatrical releases, and celebrity campaigns benefit from full performance. Close up comedy leans on human timing. Children’s series need character acting that current models cannot match. Sensitive topics or regulated markets may require manual control. You can still run hybrid for narration and promos around those titles.

How fast can a hybrid team turn around episodes?

Turnaround depends on language count, review rounds, and sync targets. Many teams complete a half hour episode in a few days once the pipeline runs smoothly. Draft reads can land within hours, then reviews and pickups finalize tracks. Batch deliveries and consistent voices keep speed high. A clear calendar prevents idle time.

Can I reuse voices and models across projects?

Yes. Reuse brings consistency and speed. Lock voice profiles, dictionaries, and style notes by brand or show. Store rights and consent so you can roll voices into new seasons. Refresh voice choices if tone or character needs change.

How do I estimate costs before kickoff?

Build a short brief for one or two scenes. Set sync targets, voice needs, and review counts. Ask vendors for per minute pricing with those assumptions. Run a pilot to confirm timing and quality. Use results to drive annual plans and vendor scorecards.