AI metadata tagging powers modern content workflows. When algorithms label photos, footage, and audio with precise descriptors, creative teams locate material faster, meet compliance, and turn dormant archives into fresh revenue.

Metadata guides users straight to the frame they need. Manual logging cannot keep pace with terabytes uploaded each hour, but artificial intelligence closes that gap by describing media at machine speed. In newsrooms, e-commerce studios, and streaming libraries across the United States, smart tagging works nonstop so people focus on ideas rather than data entry. Early adopters report search time dropping by 70 percent after deployment.

Explore practical tagging tips in our AI postproduction workflows guide and see how broadcasters sharpen search in this analysis of autometadata. Both articles reveal AI at work inside complex media pipelines.

Our Services at Digital Nirvana

Digital Nirvana offers comprehensive automated ad detection solutions that integrate seamlessly into broadcast workflows. Our services deliver robust monitoring and compliance tools that index every ad with frame-level accuracy. By combining AI-driven fingerprinting and metadata parsing, we capture a detailed view of when and where ads run. We also help ensure your operations adhere to any relevant regulations, whether local, federal, or international.

If you need a deeper dive or want to explore how our automated solutions could align with your business goals, visit our Digital Nirvana resource library for case studies and technical insights. Our agile cloud architecture scales with demand, so you can monitor multiple channels without sacrificing performance. Our engineering team is ready to help you integrate ad detection with your existing media asset management, traffic, and billing systems.

Understanding AI Metadata Tagging

What Is Metadata and Why It Matters

Metadata explains the who, what, when, where, and why behind every asset. Accurate tags turn a chaotic drive into a navigable library, raise content visibility on search engines, and support copyright, licensing, and accessibility tasks. A well-structured tag set can boost on-platform engagement by double-digit percentages because consumers find relevant clips without abandoning the search.

How AI Learns to Tag Content Automatically

Developers feed thousands of labeled samples into supervised models. The algorithm detects patterns in color, motion, or language, then predicts tags for new media. Continuous feedback and fine-tuning improve precision as the library grows. Advanced systems use active learning, requesting human input only on edge cases to speed improvement.

Difference Between Manual and AI-Driven Tagging

Humans grasp nuance but tire after hours of repetitive work. AI processes thousands of clips per hour, never slows, and applies rules consistently. Pairing human oversight with automated speed delivers rich metadata without backlog, freeing staff for creative tasks and investigative storytelling.

Benefits of AI Metadata Tagging

Faster Indexing at Scale

An AI engine can log an hour-long broadcast in minutes. Editors bypass frame-by-frame slogging and meet tight air dates even during breaking-news cycles. One U.S. sports network cut highlight-package turnaround from 30 minutes to under five after automation.

Improved Discoverability and Search Performance

Search bars return deeper, more accurate results. Producers surface forgotten gems, while recommendation engines use dense tags to assemble binge-worthy playlists. Better discoverability increases watch time, which translates into bigger ad inventory.

Enhanced Workflow Automation

New tags can trigger rights checks, rough-cut assembly, or social posts. The metadata layer becomes a command center that propels projects forward without manual intervention, shaving days off postproduction calendars.

Consistency Across Asset Libraries

Uniform rules keep “soccer” separate from “football” and eliminate alternate spellings. Harmonized tags produce clean analytics, fuel accurate reporting, and enable global search across dozens of repositories.

Lower Operational Costs

Automating bulk tagging trims overtime, reduces freelance invoices, and frees cash for acquisition or creative development. Teams typically recoup investment within one budget cycle.

Core Technologies Behind AI Tagging

Natural Language Processing for Text

NLP engines convert transcripts, scripts, and descriptions into structured data. Named-entity recognition extracts people, places, and topics, while sentiment analysis gauges tone for marketing units eager to map audience emotion.

Computer Vision in Image and Video

Vision models detect objects, actions, and logos. Bounding boxes map each instance to exact timecodes so editors jump straight to a critical moment instead of scrubbing hours of footage. Recent models even detect brand-safety risks such as violence or nudity.

Audio Signal Processing for Sound and Speech

Automatic speech recognition transforms dialog into searchable text. Separate classifiers identify applause, sirens, or crowd noise, giving highlight editors a fast way to pull crowd-pleaser moments.

Machine Learning Models for Contextual Accuracy

Domain-specific fine-tuning teaches engines industry jargon, slang, and proprietary terminology. As the system ingests corrections, its accuracy climbs with each batch, making it more reliable over time.

Hardware Acceleration and Deployment Models

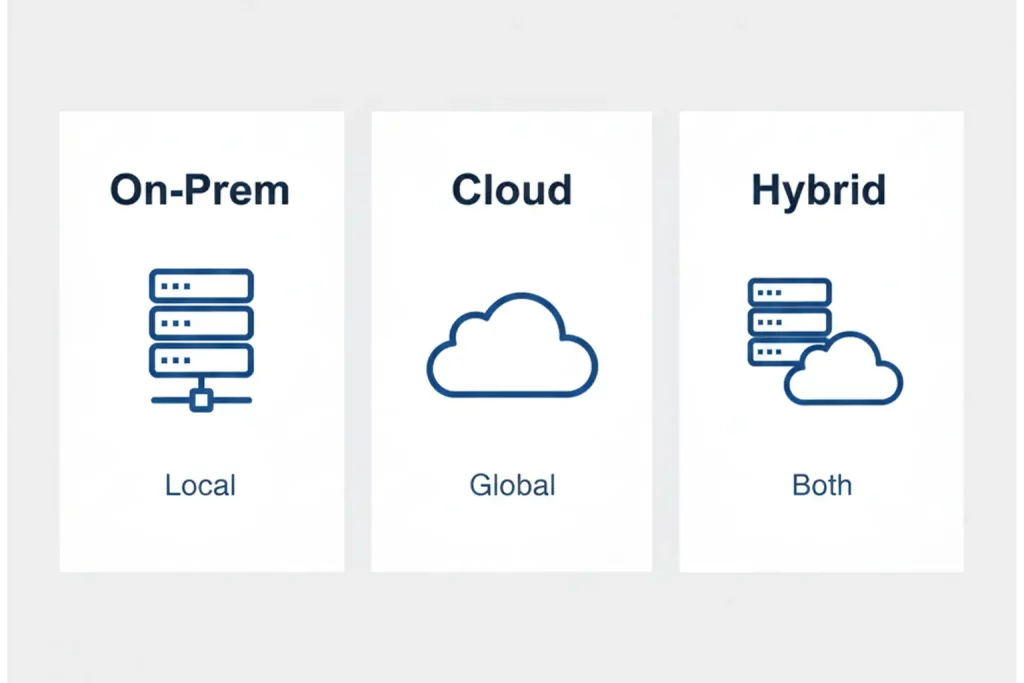

Cloud GPUs and TPUs chew through heavy vision and language tasks, while on-prem clusters handle sensitive material behind a firewall. Smart load balancing pushes peak demand to the cloud but retains steady-state tasks on local gear to control costs.

Key Use Cases in America

Media and Broadcast Archives

Networks migrate decades of tape to digital. AI tags every shot, enabling clip sales to documentary crews and fueling highlight reels for free ad-supported television channels. The Smithsonian Channel monetized two-thousand unused hours of archival footage within the first year of deployment.

E-Commerce Product Cataloging

Retailers photograph thousands of SKUs weekly. AI labels color, pattern, size, and brand, then publishes listings before trends expire, giving merchants a competitive edge and higher conversion rates.

Museum and Digital Archives

Curators capture artifacts in ultra-high resolution. AI tags era, artist, medium, and provenance, allowing scholars worldwide to locate objects in seconds and license images for publications.

Educational Institutions and Online Libraries

Universities record lectures every day. Tagging marks each slide, reference, and topic so students jump to exam material instead of replaying full classes, boosting study efficiency.

Enterprise Video Content Repositories

Large firms produce training, town halls, and product demos. AI tags compliance rules, safety modules, and region-specific policies so employees watch the correct clip the first time.

How AI Metadata Tagging Works

Input Types: Text, Image, Video, Audio

Each media type flows through a custom pipeline. A narrated slide deck touches vision, OCR, and audio models before tags merge into one JSON record that your DAM ingests in real time.

AI Models: Pre-Trained vs Custom

Pre-trained models ship quickly and cover common categories. Custom engines learn brand mascots, internal product codes, or niche sports, driving higher recall in specialized libraries.

Tag Generation and Confidence Scores

Every prediction carries a probability. Teams often set thresholds such as 0.85; clips below that route to human review, preserving accuracy and keeping throughput high. Dynamic thresholds adjust based on content criticality.

Metadata Output Formats and Storage

Results export as JSON, XML, or CSV or live in sidecar files. Embedded metadata travels with the asset, while sidecars keep raw files untouched. Connectors push tags into DAM, PAM, or MAM databases so search functions in any interface.

Best Practices for Deployment

Start With a Defined Tagging Taxonomy

Bring editors, marketers, and archivists together to agree on spelling, hierarchy, and depth. A clear vocabulary prevents synonym overload and guarantees clean dashboards.

Audit and Clean Existing Metadata

Purge duplicates, outdated terms, and misspelled tags. A tidy baseline helps the model learn accurate associations from day one and avoids false positives later.

Integrate AI Into DAM Systems

REST APIs and webhooks send new uploads to the engine and push tags back when processing completes, eliminating manual import steps. Tools such as MetadataIQ provide ready-made connectors that shorten this phase to days, not months.

Continuous Learning From Human Corrections

Reviewers mark false positives in a browser dashboard. Overnight retraining reinforces correct labels, raising precision for the next batch without code changes.

Version Control and Metadata Updates

Track every tag, edit, or rollback. Version history protects against accidental deletion and satisfies auditors during legal discovery. Some teams store metadata snapshots in Git for transparent diffs.

Challenges in AI Metadata Tagging

Ambiguity in Visual and Textual Contexts

A jaguar may be a big cat or a sports car. Context models read surrounding frames or narration, while reviewers resolve any remaining confusion to keep tags trustworthy.

Ethical and Legal Risks in Automated Classification

Labeling personal traits without consent breaches privacy law. Governance teams configure engines to skip sensitive attributes and create red-flag alerts for unusual classifications.

Metadata Overload or Redundancy

Too many low-value tags slow search. Confidence thresholds, category limits, and periodic tag-health reports keep libraries lean and high-signal.

Alignment With Regulatory Compliance

Rules like the GDPR and the CCPA restrict personal data storage. Hash or redact identifiers and schedule purges in line with retention policies.

Ensuring Inclusivity in Tagging Terminology

Language changes fast. Quarterly taxonomy reviews replace outdated labels and incorporate community-preferred terms to keep metadata respectful.

Evaluating an AI Tagging Solution

Accuracy Benchmarks and Tagging Precision

Request F1 scores on assets similar to yours, then run a pilot with real footage. Compare AI output to a human-graded baseline before signing a contract.

Compatibility With DAM or CMS

Native connectors or open standards shorten implementation and cut custom development costs.

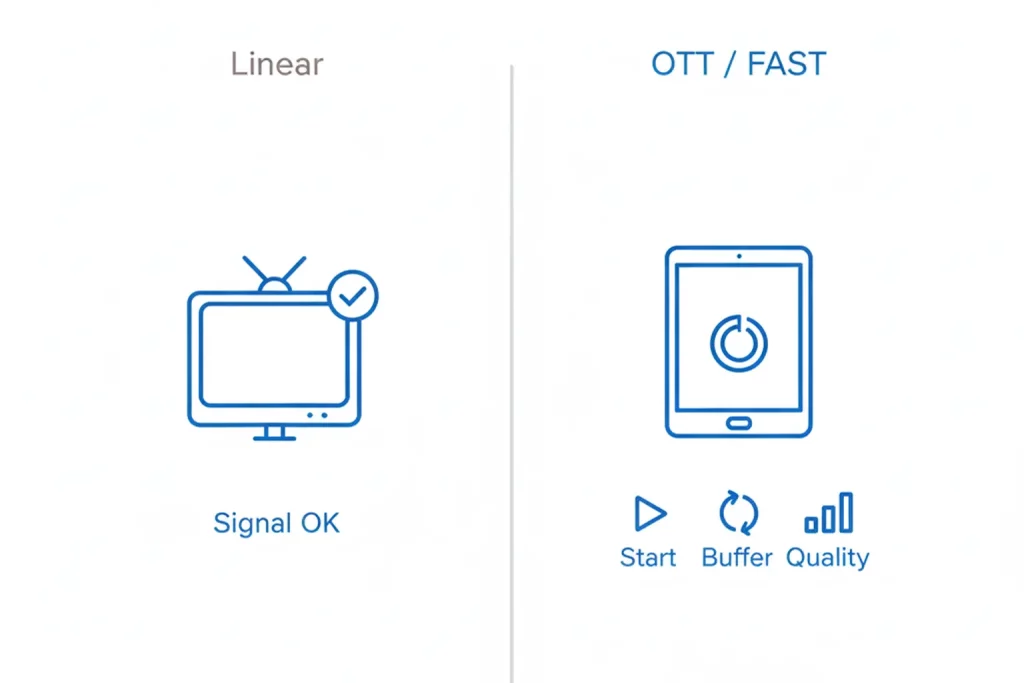

Real-Time vs Batch Tagging Capabilities

Live sports need sub-second latency, while film archives run overnight jobs. Pick a platform that meets your deadline requirements and can grow with demand.

Training Data Transparency

Ask vendors where they source annotations and how they protect intellectual property. Transparent processes reveal bias risks and inform governance.

Support for Multilanguage Metadata

Global teams require Spanish, French, Mandarin, and more. Automatic language detection routes assets to the correct speech model to preserve accuracy worldwide.

Future of AI Metadata Tagging

Predictive and Behavioral Metadata

Algorithms will tag pause points, rewind loops, and watch-time curves, giving producers insight into viewer engagement and guiding edits that boost retention.

Personalized Metadata for Audience Segments

Streaming apps will add mood, genre blend, and difficulty level tags, producing hyper-personalized feeds that satisfy viewers faster and cut churn.

Voice-to-Metadata Tagging in Live Streams

Real-time speech recognition will insert keywords into live feeds, letting digital teams publish highlights within seconds of on-air moments.

Integration With Generative AI Tools

Generative models will write alt text, social synopses, and thumbnail captions from tag data, trimming delivery steps to a single click.

AI-Driven Rights and Licensing Metadata

Smart contracts will link ownership data to tags, automating royalty payments and alerting producers when licenses near expiration.

AI Metadata Tagging in Post-Production Workflows

Enhancing Frame-Level Search

Frame-accurate markers guide editors to action shots without manual scrubbing, saving hours during highlight assembly.

Syncing Tags With Editing Timelines

Plugins drop tags onto timeline markers automatically, so assistants focus on creative cuts, not data entry.

Automating Closed Captioning and Compliance

Speech tags feed caption engines while profanity classifiers flag issues, keeping content accessible and regulation compliant. Tools like TranceIQ merge these steps in one interface.

AI Tagging and Content Monetization

Creating Sellable Archives With Auto-Tagging

Detailed tags make obscure footage discoverable on licensing marketplaces, generating revenue from material once gathering dust.

Linking Metadata to Licensing and Distribution

Rights tags specify territories, windows, and contract details, preventing accidental releases in restricted markets.

Contextual Advertising Through Metadata Insights

Ad servers match scene mood, location, and theme to brand campaigns, raising click-through rates without manual targeting.

Performance Monitoring and Quality Assurance

Manual Review Panels and Override Protocols

Low-confidence tags appear in dashboards where reviewers confirm, reject, or edit. The loop protects accuracy and trains the model.

Tag Confidence Scoring and Revalidation

Scheduled audits compare current predictions with a gold standard. Low-performing categories receive extra training data during the next sprint.

Regular Accuracy Audits and Feedback Loops

Monthly scorecards report precision, recall, and coverage. Clear metrics keep executives informed and budgets justified.

Integrating Metadata AI Across Departments

Editorial and Content Teams

Writers locate footage in seconds and repurpose evergreen material for fresh stories. Faster discovery means richer narratives across channels.

Legal and Compliance Teams

Automated tags surface restricted content before publication, helping organizations avoid fines and damaged reputation.

Marketing and SEO Teams

Structured data feeds schema markup that search engines reward with higher visibility, drawing qualified traffic to owned platforms.

Product and Engineering Teams

Developers build recommendation engines and analytics dashboards on structured metadata, improving the user experience and shaping product roadmaps.

Implementation Roadmap

Week 1: Discovery and Taxonomy Design

Workshops define goals, sources, and vocabulary in partnership with stakeholders.

Week 2-3: Integration and Pilot

Developers plug APIs into the DAM, ingest a pilot batch, and generate initial tags for review.

Week 4: Accuracy Review

Stakeholders evaluate tags, adjust thresholds, and fine-tune taxonomies for maximum relevance.

Week 5-6: Training and Rollout

Editors receive hands-on training. Automation scales to the entire library once benchmarks pass.

Ongoing: Measure and Refine

Monthly reports track precision, coverage, and revenue gains, informing continual improvement.

Parallel Testing Environment

A sandbox mirrors production so you can A/B test AI tags against legacy manual tags and measure search-time savings.

KPI Alignment Workshops

Product owners and analysts set precision, recall, and search-time targets, keeping every team focused on measurable success.

Change Management and Training

Video tutorials, virtual office hours, and quick quizzes reinforce best practices and drive adoption.

Post-Launch Optimization

Quarterly reviews retire obsolete tags, add new model classes, and align taxonomy updates with changing business goals.

Measuring ROI on AI Metadata Tagging

Direct Cost Savings

Track the drop in logging hours and compare it with subscription fees. Many teams break even in a single quarter.

Revenue Uplift

Rich tags unlock archival footage for syndication, social clips, and brand partnerships. Measure new dollars tied directly to tagged assets.

Risk Reduction

Compliance tags catch policy violations before distribution. Avoided fines count toward tangible return.

Productivity Gains

Monitor the reduction in search time per asset and the increase in finished projects per editor. Redeploy saved hours to creative development.

Data Governance and Security Best Practices

- Encrypt files at rest and in transit with TLS and AES 256.

- Apply role-based access controls so only cleared staff view restricted tags.

- Log every tag creation, edit, or delete to satisfy audit teams.

- Store off-site metadata backups in resilient zones.

- Review vendor SOC 2 and ISO 27001 certificates every year.

Cross-Industry Adoption Trends

News and Sports

Live clipping crews rely on instant player and logo detection. Rapid tags feed on-screen tickers and social media inside minutes.

Healthcare and Life Sciences

Hospitals tag imaging data so researchers find rare cases while anonymization features protect patient privacy.

Government Agencies

Public records offices digitize sessions and generate searchable transcripts that improve transparency for citizens.

Automotive and Manufacturing

Engineers review thousands of hours of test-track video. Vision models tag every lane change, collision-avoidance moment, and pedestrian interaction, speeding safety validation.

Social Media Platforms

User uploads flow around the clock. AI filters harmful content, tags musical genre, and feeds personalization engines that keep audiences engaged.

Cost Models and Budget Planning

Vendors usually price AI tagging by hours processed or API calls. Enterprise licenses often bundle unlimited throughput with an annual commitment. Plan budgets by considering:

- Ingest Volume: Measure archive size and monthly acquisition.

- Peak Concurrency: Live events spike usage. Some plans charge premiums for real-time service-level agreements.

- Storage Footprint: Rich tags add kilobytes per asset, but transcripts and sidecars can triple storage needs.

- Review Labor: Even at ninety-percent precision, allocate reviewer time for edge cases.

- Change Management: Budget for training, taxonomy workshops, and integration development.

Clear financial forecasts avoid surprises and speed leadership approval.

Conclusion

AI metadata tagging moves assets from hidden to high value. It trims manual hours, accelerates compliance, and opens new revenue streams. Begin with a single collection, measure the lift, then expand. Digital Nirvana can guide the journey. Reach out and discover how quickly your library performs when every file speaks a common language.

A well-tagged archive grows in worth daily. The earlier you start, the faster you benefit.

Our Services at Digital Nirvana

Digital Nirvana weaves AI metadata tagging into your existing stack without disrupting daily production. MetadataIQ connects to Avid, Adobe, or any standards-based DAM, creates speech-to-text and visual markers, and writes them back as searchable locators. Editors jump straight to the money shot.

When captions or multilingual subtitles matter, TranceIQ automates transcription and captioning across thirty source languages and more than one-hundred targets. Both SaaS tools share one secure cloud platform, one API, and role-based permissions, so your team manages a single workflow instead of many.

Because MetadataIQ and TranceIQ share a common data layer, any tag, transcript, or caption created once becomes discoverable across every department, boosting reuse and accelerating monetization.

FAQs

How accurate is AI metadata tagging?

Leading engines reach roughly ninety-percent precision on clean content. Accuracy climbs higher after custom training on domain footage.

What file formats work with AI tagging?

Most platforms ingest MP4, MOV, MXF, WAV, and standard image formats. Check vendor sheets for specialty codecs.

Does AI tagging replace human catalogers?

No. AI handles repetitive work, while humans resolve edge cases, refine taxonomies, and add creative context.

How long does deployment take?

Cloud integrations often finish in weeks. On-prem installs may add time for security reviews.

Is AI metadata tagging secure?

Reputable vendors encrypt data end to end, hold SOC 2 certificates, and offer private-cloud options for sensitive projects.