Introduction

Modern newsrooms are asked to do more with less: more platforms, more formats, more live hits, more on-demand content, and more pressure to publish first without sacrificing accuracy. The bottleneck is rarely the journalists or the gear; it is the friction between finding the right material and getting it to air or online in time.

That friction is a metadata problem.

Newsroom automation used to mean rundowns and playout control. Today, it is equally about AI indexing for news: automatically turning every feed, file, and live segment into structured, searchable metadata that drives your production tools. When your media is richly indexed, your journalists and producers stop scrubbing and start storytelling.

Digital Nirvana’s MetadataIQ is built for exactly this world: AI-driven metadata, real-time indexing, and newsroom-ready integrations that let you automate the boring parts of news production while keeping humans firmly in charge of the story.

What newsroom automation really means in 2025

Newsroom automation has evolved far beyond simple device control or MOS playlists. In leading organizations, it now covers:

- Ingest and logging of live and file-based sources

- Automatic creation of captions, transcripts, and metadata

- Story-centric workflows that follow a piece from idea to multi-platform output

- Assisted editing and highlights for fast-turn news and social content

AI plays a growing role, but not to “write the news” for you. Instead, it:

- Automates repetitive, low-value tasks like tagging and basic formatting

- Surfaces relevant clips and context faster for reporters

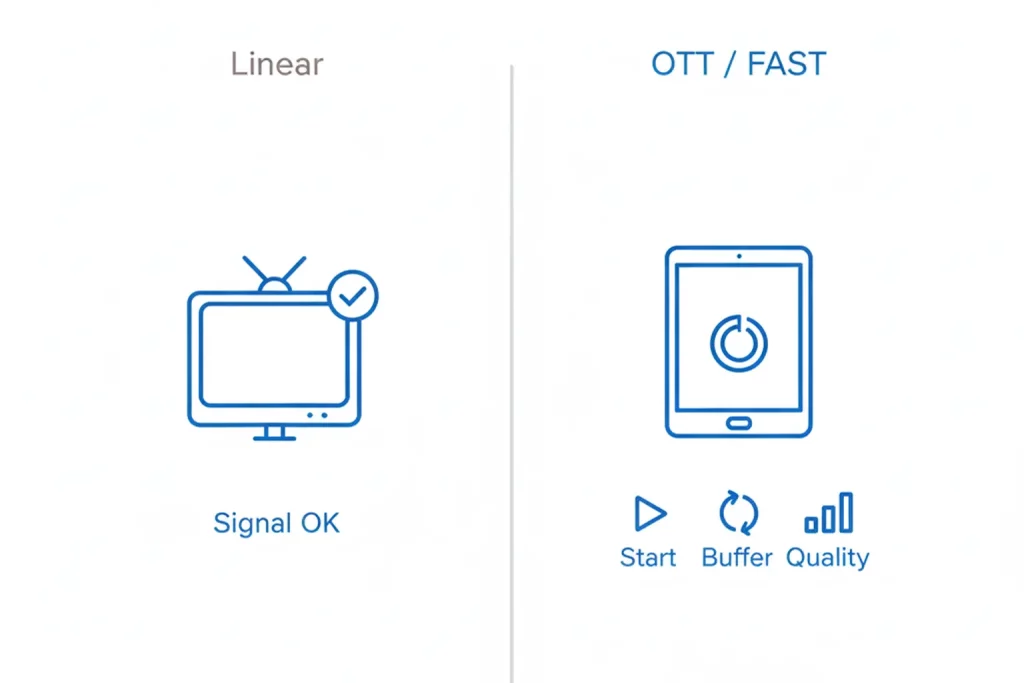

- Keeps content organized as volume explodes across linear, OTT, and digital,

In this landscape, automation driven by weak metadata is just faster chaos.

Why metadata is the backbone of newsroom automation

Metadata is the control layer of a modern newsroom:

- It tells your NRCS and MAM what each asset is, who is in it, and what can be done with it

- It drives search in your PAM/MAM and cloud platforms

- It informs which clips are safe to reuse, where rights apply, and what needs extra review

- It powers recommendation, personalization, and archive monetization downstream

Without good newsroom metadata:

- Rundowns are harder to build because producers cannot quickly find the right soundbite

- Journalists recreate packages because they cannot locate previous coverage.

- Compliance and legal teams rely on manual spot checks instead of time-coded evidence

Newsroom automation only pays off when the underlying metadata is rich, accurate, and kept up to date automatically.

AI indexing for news: what it is, and how it works

AI indexing for news means using machine learning to monitor and analyze your content, then generate structured metadata that humans and systems can use.

For newsrooms, that typically includes:

- Speech-to-text: time-coded transcripts for every clip, and live feed

- Speaker identification: who is talking, when they start, and when they stop.

- Visual detection: faces, logos, lower-thirds, and on-screen text

- Topic, and entity extraction: people, places, organizations, issues, and keywords

- Rules-based tagging: political ads, br, anded content, sensitive categories, and region-specific flags,

Once generated, this metadata is pushed into your PAM/MAM and newsroom systems so producers and reporters can:

- Search across feeds and archives by topic, name, quote, or event

- Jump straight to relevant moments on the timeline.

- Build packages faster by using pre-indexed content rather than manually scrubbing feeds.

In short, AI indexing turns your newsroom’s raw video and audio into a living, searchable knowledge base.

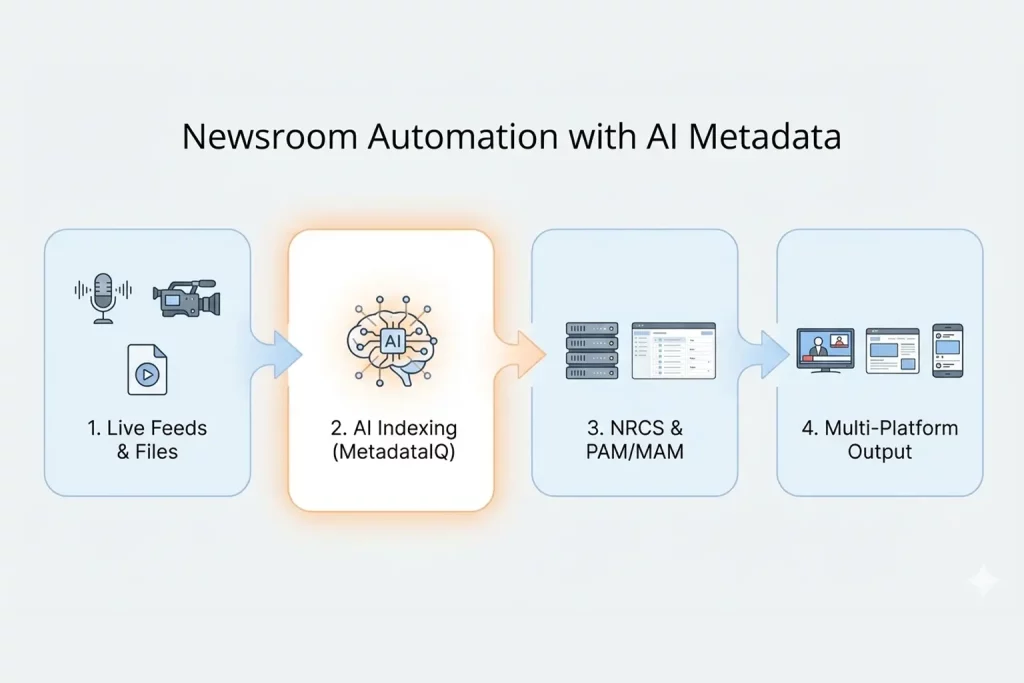

Where AI metadata automation fits in the newsroom workflow

To understand how AI metadata automation transforms newsroom automation, it helps to map it against the typical news chain.

- Ingest and acquisition

- As live feeds, agency wires, and remote contributions arrive, AI generates time-coded transcripts and baseline tags.

- MOS-connected systems and MAM platforms receive descriptive metadata alongside the essence, keeping NRCS views in sync with servers and cloud systems.

- Logging and story prep

- Instead of traditional manual logging, producers get pre-indexed feeds with speakers, topics, and key quotes already marked.

- Planning editors can quickly assess angles, compare related material, and reporters’ targeted source clips.

- Writing, editing, and versioning

- Editors assemble packages using AI-generated markers to find the exact bite they need.

- Digital teams can create web and social versions in parallel by searching the same indexed pool. ,

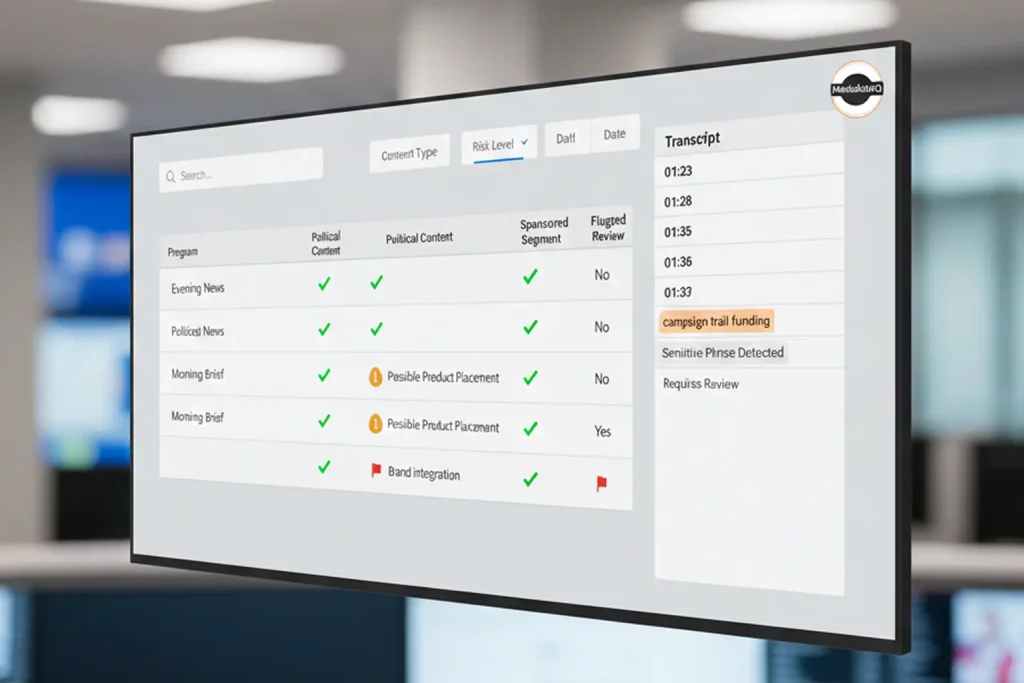

- Compliance and standards

- Time-coded tags identify political content, paid segments, sponsorships, and sensitive material.

- Standards teams work from a filtered list of candidate segments instead of watching every minute.

- Archive, and re-use

- When AI indexing is applied to archives, years of historic reporting become discoverable by theme, person, or event.

- Long-tail stories, explainers, and anniversaries become easier to produce because footage is no longer “lost” in storage.

At every stage, newsroom automation becomes more “intelligent” as the content itself drives decisions.

From manual logging to AI-first: impact on search and storytelling

Moving from manual metadata to AI-first indexing delivers three tangible benefits.

Faster search, and clip turns

- Journalists and editors can search for exact quotes, names, and topics within your PAM/MAM without having to guess timecodes.

- Producers turn breaking clips and explainers around faster because they start from an indexed pool, not a pile of unlogged feeds. ,

Smarter story development

- AI indexing automatically surfaces related coverage, background interviews, and archive materials, helping teams build deeper, more contextual stories.

Lower operational drag

- Manual logging hours drop dramatically, freeing up staff for higher-value editorial work.

- Automation reduces repetitive tasks while keeping humans in control of framing, verification, and ethics.

The result is newsroom automation that drives better journalism instead of just faster throughput.

Inside MetadataIQ: AI indexing designed for newsrooms

MetadataIQ from Digital Nirvana is purpose-built to bring AI indexing for news into real newsroom environments without forcing a rip-and-replace project.

Key capabilities for newsroom automation include:

AI-driven metadata generation

- Broadcast-tuned speech-to-text that delivers time-coded transcripts for live and recorded news.

- Detection of faces, logos, lower-thirds, and on-screen text to identify guests, anchors, brands, and locations.

- Topic detection and keyword extraction aligned to your newsroom’s beats and style.

Seamless PAM/MAM and NRCS integration

- Deep integrations with Avid-based environments and APIs for broader PAM/MAM stacks.

- Metadata and markers are written directly back into your existing systems, so editors work in familiar tools with richer context.

Real-time and batch indexing

- Real-time indexing for breaking news, live events, and rolling coverage.

- Batch processing for archive libraries and legacy content that still drives today’s storytelling.

Compliance-aware metadata

- Rules-based tagging to help track political advertising, sponsored content, brand, and mentions, and regulatory categories alongside your existing compliance logging tools.

Operational dashboards

- Dashboards that show metadata coverage, processing status, and quality across channels and workflows, giving ops and editorial leaders real visibility into how automation is performing.

In practice, MetadataIQ lets you plug AI metadata automation into your newsroom stack and see value quickly, without asking journalists to change the way they write, edit, or publish.

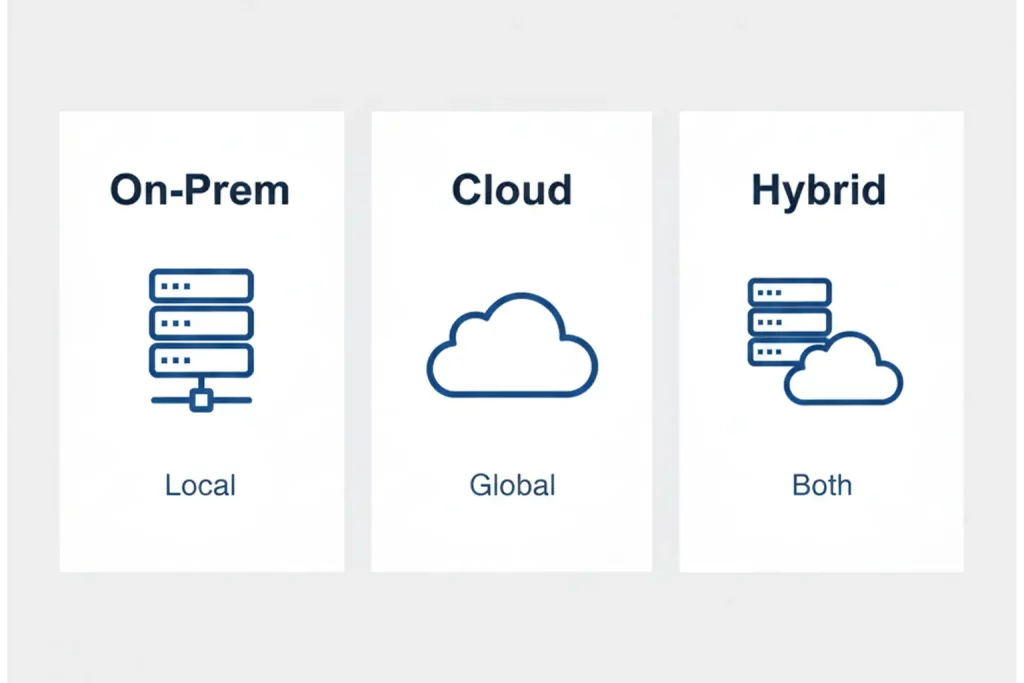

Implementation roadmap: rolling out AI metadata in your NRCS, and PAM/MAM

To turn newsroom automation from a buzzword into a measurable improvement, it helps to follow a structured rollout.

- Clarify your newsroom goals.

- Faster breaking news turnarounds

- More reuse of archive footage

- Better compliance logging and auditability

- Multi-platform publishing efficiency

- Map your current metadata reality.

- How are feeds ingested and logged today?

- Where are the biggest search and retrieval bottlenecks?

- Which systems hold the “source of truth” for news video and audio?

- Start with a focused AI indexing pilot

- Choose one or two high-impact workflows, such as breaking news or a daily show.

- Integrate MetadataIQ with your PAM/MAM and NRCS for those workflows, and benchmark search time, clip turnaround, and reuse.

- Tune models and rules for your newsroom

- Align AI tags with your editorial vocabulary and beats.

- Configure rules for political, br, and sensitive content to support standards and legal teams.

- Scale across desks and platforms

- Extend AI indexing for news to more channels, regional bureaus, and archives.

- Use dashboards and analytics to prove ROI, and continuously refine metadata quality.

Digital Nirvana typically engages as a workflow partner rather than a simple vendor, helping broadcast and digital newsrooms align MetadataIQ to their specific production realities.

FAQs

No. AI indexing for news is focused on automating repetitive tasks like logging, tagging, and search, not on writing your stories. Journalists still handle reporting, verification, editorial judgment, and storytelling. AI ensures they spend less time scrubbing feeds and more time doing journalism. ,

Generic tools usually stop at a text transcript. MetadataIQ is built for newsrooms and broadcasters: it generates time-coded transcripts, visual tags, topics, and rules-based markers, then pushes all that metadata back into your PAM/MAM, and NRCS, so it is actually usable in production.

Yes. MetadataIQ supports real-time metadata generation for live news and events, allowing producers to search and clip from in-progress feeds, not just archived files. This is critical for competitive breaking news and rolling coverage.

MetadataIQ is designed for Avid-centric newsrooms, and also connects via APIs to a wide range of PAM, MAM, compliance logging, and cloud platforms, so metadata flows across your entire news chain rather than being trapped in a single system.

Most newsrooms see early wins within an initial pilot, measured in faster search, reduced manual logging, and improved clip turnaround, before scaling to additional shows, bureaus, and archives. Because MetadataIQ plugs into existing tools, time-to-value is driven more by workflow decisions than by technology deployment.

Conclusion

Newsroom automation is no longer just about device control and playlists; it is about how efficiently your teams can find, understand, and reuse what you have already captured. In that world, metadata is not a side project. It is the backbone of your entire operation.

AI indexing for news turns that backbone into a living system. Every feed, every package, every archive reel becomes searchable by the way journalists actually think: people, places, topics, quotes, events. Instead of burning time on manual logging and guesswork, your teams can focus on crafting stronger stories across every platform.

Digital Nirvana’s MetadataIQ is built to make that shift real. It brings AI-driven metadata automation directly into your newsroom’s PAM/MAM and NRCS stack, giving you faster search, more brilliant storytelling, and more resilient compliance, without asking your staff to abandon the tools they rely on every day.

If your newsroom is ready to move from manual logging to brilliant newsroom automation, MetadataIQ is the logical next step.