“AI-powered transcription” sounds the same on every vendor website, but if you’re running a real broadcast operation, you already know the truth:

- It’s not just about turning speech into text.

- It’s about turning hours of content into instantly searchable, production-ready assets inside Avid and your PAM/MAM.

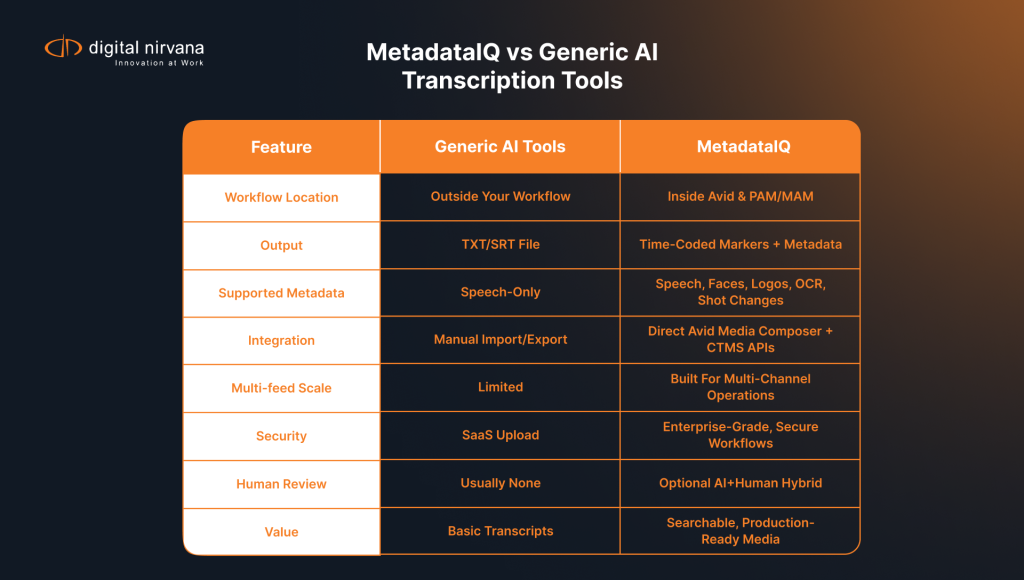

Most generic AI tools stop at a downloadable transcript file. Useful for meetings and podcasts; not nearly enough for live news, sports, or promo-heavy channels.

MetadataIQ, from Digital Nirvana, was built specifically for broadcasters. It uses AI and machine learning to auto-generate speech-to-text and video intelligence metadata. It injects it directly into your Avid workflows as time-coded markers, so your team spends less time scrubbing and more time creating content.

Let’s break down what that means in practice and why MetadataIQ beats generic AI-powered transcription tools for broadcasters.

What “AI-Powered Transcription” Should Mean in a Broadcast Environment

For a broadcaster, AI transcription must do more than spit out a text file. At minimum, it needs to:

- Understand broadcast audio – anchors, field reporters, crowd noise, overlapping speech

- Stay locked to timecode – so text and video stay in sync on your timeline.

- Feed your NLE and PAM/MAM – not just your downloads folder.

- Scale to multi-channel, multi-feed operations.

- Support compliance, ad sales, and archive teams, not just editorial.

Digital Nirvana’s MetadataIQ is designed to address these needs precisely: it automates extracting media from Avid, generating AI-powered transcripts and video intelligence, and ingesting that data back as markers tied to your assets. Generic tools aren’t built for that. They’re built for “upload a file, get a transcript.”

Where Generic AI Transcription Tools Fall Short for Broadcasters

1. They Live Outside Your Workflow

With generic tools, you typically:

- Export or upload media

- Wait for transcription

- Download a text or caption file

- Manually import or copy/paste into Avid or your MAM

Every step adds friction. None of it is time-coded metadata living natively in Media Composer or MediaCentral.

MetadataIQ difference: it integrates with Avid Media Composer and MediaCentral through CTMS APIs to extract media and send back speech-to-text and video intelligence metadata as markers directly into your timeline, no manual moves, proxies, or sidecar juggling.

2. They Don’t Understand “Metadata” the Way Broadcasters Do

Generic services see transcription as the end product. Broadcasters see it as the first layer of metadata.

MetadataIQ goes further by generating:

- Speech-to-text

- Facial recognition (who appears)

- Logo and object detection (which brands/products show)

- OCR (on-screen text)

- Shot changes and other video-intelligence cues

All of this is written back as time-indexed markers so your team can search, filter, and jump exactly where they need.

3. They Don’t Live “Inside Avid”

Most generic tools end at an SRT or TXT file. It’s still your team’s job to make that worthwhile.

MetadataIQ was designed for the Avid ecosystem:

- Off-the-shelf integration for Avid-based PAM/MAM users

- Support for Avid CTMS APIs, so even non-Interplay environments can use it

- Automatic ingest of speech-to-text and other metadata as color-coded markers inside Media Composer and MediaCentral

Editors can type a search term in Avid, find the right clip, and cut without any external tools or scripts.

4. They Don’t Combine AI with Broadcast-Grade Services

Many generic tools only produce machine output. For consumer use, that’s fine. For broadcast, it’s risky.

Digital Nirvana pairs MetadataIQ with Trance and other in-house services to offer:

- AI-generated transcripts for speed and scale

- Human-curated transcripts, captions, and translations for premium or compliance-critical content

- Unified workflows for transcripts, captions, and translations in all industry-supported formats

You choose where you need “good enough” automation and where you need “broadcast-grade” accuracy.

5. They Don’t Address Security and Compliance Requirements

Media files often carry rights restrictions, embargoes, and regulatory risk. Shipping raw content to generic SaaS tools can raise internal flags.

MetadataIQ is documented as a secure, enterprise-grade solution:

- Designed to sit on customer infrastructure and manage low-res proxies or audio-only versions as needed

- Temporary, encrypted storage in the cloud with TLS-secured transfers and controlled retention

- Proven deployments across news, sports, and entertainment customers with compliance logging and monitoring workflows via MonitorIQ and related tools

In short, it’s built around broadcast IT and legal expectations, not casual usage.

How MetadataIQ Delivers AI-Powered Transcription that Actually Fits Broadcast Workflows

1. Automates the Whole Chain: From Avid to Metadata and Back

MetadataIQ:

- Automatically extracts media from Avid Media Composer / MediaCentral using APIs.

- Generates speech-to-text transcripts and video intelligence metadata using AI/ML.

- Ingests that metadata back into Avid as customizable markers (color, duration, type) and/or sidecar files.

No manual file exports. No spreadsheet of timecodes. Just metadata appearing in the tools your editors already use.

2. Makes “All Media. Every Scene. Instantly Searchable.”

Digital Nirvana’s positioning is precise: help customers make all media, every scene, instantly searchable using AI and metadata.

In practice, that looks like:

- Editors typing a player name, sponsor, or phrase in Avid and seeing all matching moments.

- Producers jumping straight to relevant segments in live and historical feeds.

- Archive and ad-sales teams use logo detection and OCR metadata to verify brand exposure in seconds.

Generic tools might give you a transcript you can search in a browser. MetadataIQ provides timelines you can search for in your NLE.

3. Speeds Up Pre-, Live, and Post-Production

Broadcast workloads aren’t one-size-fits-all. MetadataIQ supports:

- Pre-production: mining archives for past segments, quotes, and b-roll

- Live & near-live: generating transcripts and markers quickly so highlights and digital clips can be cut while the show is still on air

- Post-production: automating transcription, captions, and translations directly from existing Avid workflows.

The outcome: faster time-to-air, faster digital publishing, and fewer late nights scrubbing through timelines.

4. Extends Beyond Transcription to Full Metadata Automation

Instead of being a “transcription tool,” MetadataIQ is part of Digital Nirvana’s broader media enrichment ecosystem, alongside MonitorIQ and MediaServicesIQ.

That means it can:

- Trigger summarization, topic segmentation, and ad-spot identification.

- Share metadata with compliance logging systems and analytics.

- Support VOD packaging, FAST channel workflows, and OTT distribution.

Generic tools focus narrowly on “getting you a transcript.” MetadataIQ focuses on making your content more discoverable, compliant, and monetizable.

Real-World Scenes: Where MetadataIQ Wins

Scenario 1: Breaking News

- Multiple feeds are coming in from the field, agencies, and partners.

- A generic tool can transcribe one file at a time, but you still have to manage uploads and downloads.

- With MetadataIQ, media is pulled directly from Avid, transcribed, and marked up. Producers search for key names or phrases inside Avid and build packages without leaving the interface.

Scenario 2: Sports & Sponsorship

- You need to prove on-screen logo exposure across multiple games.

- Generic transcription won’t help you see logos, graphics, and scorebugs.

- MetadataIQ’s video intelligence (logos, objects, OCR) adds time-coded markers for brand appearances, making it easy for ad-sales teams to quantify exposure.

Scenario 3: Archive Mining for Special Programming

- You’re building a season recap or anniversary special and need historic moments fast.

- Generic tools may have transcripts on a shared drive, but they may not be indexed in your MAM.

- MetadataIQ’s markers live with the media assets, letting you search across years of content directly in Avid or your PAM/MAM.

When Are Generic Tools Still Fine?

Generic AI transcription tools can still be helpful when:

- You need a rough transcript of a meeting, internal video, or one-off interview

- You don’t need tight timecodes or NLE integration.

- There are no regulatory, brand, or rights implications.

But if the content is going to air, hits your compliance systems, or feeds monetized platforms, the risk and friction of generic tools quickly outweigh the savings. If it touches broadcast operations, it’s a strong candidate for MetadataIQ.

Ready to See MetadataIQ vs Generic AI in Your Own Workflow?

If your current “AI-powered transcription” stack still involves:

- Exporting files

- Dragging and dropping into third-party sites

- Copying text into Avid

- Manually tagging logos and faces

…then you’re not getting the full value of AI in your production chain.

MetadataIQ gives broadcasters:

- AI-powered transcription built for Avid and PAM/MAM

- Time-coded markers with speech, logos, faces, and more

- Hybrid AI + human workflows for broadcast-grade accuracy

- Secure, scalable deployment backed by a company focused on media, metadata, and compliance.

FAQs: AI-Powered Transcription with MetadataIQ

MetadataIQ is Digital Nirvana’s AI-powered metadata platform built specifically for broadcasters. Instead of just giving you a text file, it:

Pulls media directly from your production environment

Generates speech-to-text and video intelligence (faces, logos, objects, OCR, etc.)

Writes everything back as time-coded markers and sidecar metadata inside your Avid / PAM / MAM workflows

Generic tools stop at “here’s your transcript.” MetadataIQ makes all media, every scene, instantly searchable where your editors actually work.

In most cases, no significant changes are required. MetadataIQ is designed to plug into existing Avid-based and PAM/MAM workflows via APIs and off-the-shelf integrations. You continue using the same NLE and media management tools; the difference is that your assets now come in enriched with AI-generated, time-coded metadata from MetadataIQ.

Yes. MetadataIQ doesn’t just create transcripts; it can also route media to captioning and subtitling workflows and manage the return of caption and subtitle files to your environment. That means you can support accessibility and multilingual delivery (broadcast, OTT, VOD, social) from the same pipeline that generates your editorial metadata.

Accuracy depends on factors like audio quality, language, and domain terminology, but MetadataIQ is tuned for broadcast-grade content anchors, reporters, sports commentators, crowd noise, and live environments. For high-visibility or compliance-critical programming, you can combine AI output with human review and correction, using Digital Nirvana’s services or your internal teams, to reach the accuracy level you require.

Yes. MetadataIQ is designed for enterprise broadcast environments with strict security and compliance requirements. It can work with low-res proxies or audio-only derivatives, respects retention policies, and uses secure transfer methods. The goal is to accelerate your workflows without increasing risk for rights-sensitive, embargoed, or regulated content.

Pricing typically considers:

The volume of media processed (hours/feeds)

The mix of AI-only vs AI+human workflows

The number of channels, users, or locations involved

Most broadcasters start with a pilot or limited rollout, so Digital Nirvana can align pricing with real-world usage and demonstrate ROI before scaling.

A simple way is to run a side-by-side pilot:

Take a representative sample of shows or feeds (news, sports, promos).

Run them through your current workflow and through MetadataIQ.

Compare: Time from ingest to searchable, time-coded metadata in the NLE.

Editor/producer time saved per story or highlight.

Quality and depth of metadata (speech, faces, logos, graphics, etc.).

Most teams notice the difference quickly once they experience search and edit directly in Avid, rather than juggling external tools.