If you run a news, sports, or entertainment channel today, you’re not short on content; you’re short on time.

Every hour, control rooms push out live shows, breaking news cut-ins, studio discussions, and on-location reports. The challenge isn’t recording it all. The challenge is finding the right moment again when you need it in seconds, not hours.

That’s where broadcast transcripts and time-coded metadata come together.

At Digital Nirvana, we build AI-powered tools that make all media, every scene, instantly searchable for busy media teams. Broadcast transcripts are the first layer of that visibility, and MetadataIQ is how we turn those transcripts into rich metadata directly inside your PAM/MAM and Avid environments.

What Is a Broadcast Transcript?

A broadcast transcript is the complete text version of your program, every word spoken on-air, converted from audio to text, usually with timecodes.

A modern broadcast transcript typically includes:

- Spoken dialogue from anchors, reporters, commentators, and guest

- Speaker labels so you know who said what

- Timestamps or timecodes aligned to your video or audio timeline.

- Optional contextual notes (laughter, applause, crowd noise, etc.)

Think of it as the searchable “text twin” of your show.

How It Differs From Scripts, Captions, and Show Notes

- Script vs. transcript

- Script: Written before air; the planned version.

- Transcript: Generated after air; what actually happened ad-libs, corrections, guests going off-script.

- Captions vs. transcripts

- Captions: On-screen text for accessibility and regulatory compliance.

- Transcripts: Back-end asset used for search, verification, editing, and analytics.

- Show notes vs. transcripts.

- Show notes: Summary or rundown.

- Transcripts: Complete, time-coded record.

In large newsrooms and post facilities, the time-coded transcript serves as the index for the entire video library.

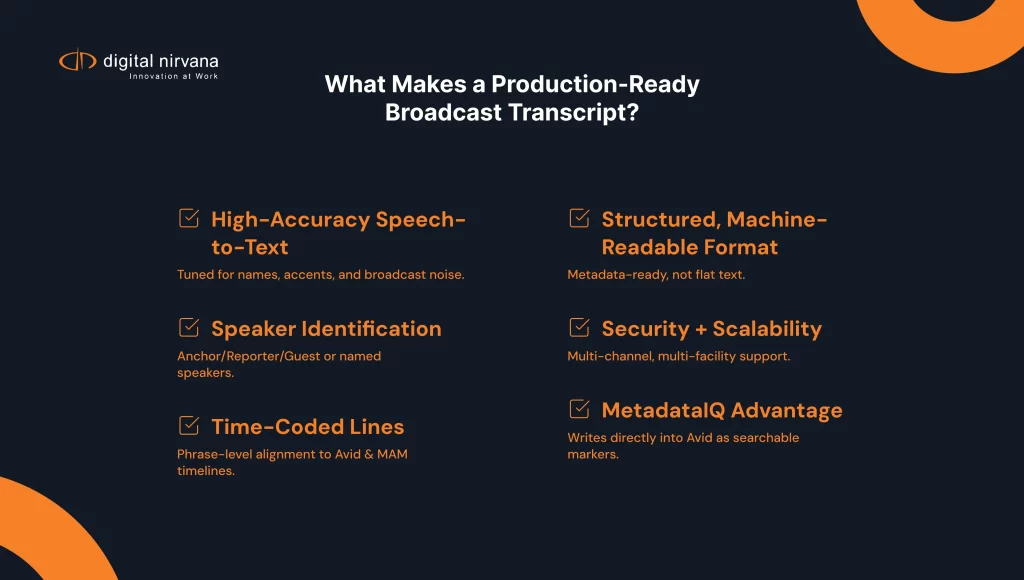

What Makes a Broadcast Transcript “Production-Ready”?

From a Digital Nirvana point of view, a transcript is only valuable if it can move with your media and plug into your real workflows. That means it needs:

- High-Accuracy Speech-to-Text

AI-driven transcription tuned for broadcast speech, noisy environments, and domain-specific terms (teams, personalities, sponsors). - Speaker Identification

Labels like ANCHOR, REPORTER, GUEST, or named speakers, so producers can quickly lift accurate quotes. - Time-Coded Lines

Line-by-line or phrase-level timecodes so your transcript can drive search and jump-to-frame behavior inside Avid Media Composer, MediaCentral, or other MAM/PAM tools. - Structured, Machine-Readable Format

Clean, consistent formatting that your PAM/MAM and automation tools can ingest as markers and metadata, not just a flat text file. - Security and Scalability

A workflow that protects original content, supports cloud processing, and scales with big archives and multi-channel operations.

This is precisely the gap MetadataIQ was built to fill: turning speech-to-text and video intelligence into time-coded, ingest-ready metadata that editors can actually use.

Where Broadcast Transcripts Fit in the Media Workflow

1. Live and Near-Live News & Sports

During live or same-day turnarounds, teams don’t have time to scrub through full shows.

With time-coded transcripts flowing into systems like Avid via MetadataIQ:

- Producers search for a keyword (“injury,” “breaking,” “overtime”) and jump straight to relevant clips.

- Editors use transcript-driven markers to build highlight reels and packages faster.

- Social and digital teams grab the exact quote, clip it, caption it, and push it to OTT and social platforms.

2. Post-Production and Long-Form

For documentaries, reality TV, and factual series, you often have hundreds of hours of raw footage:

- Transcripts help story producers find “needle in a haystack” soundbites.

- Editors can search by name, topic, or phrase and jump into the correct bin or timeline segment.

- Transcripts accelerate scripting, compliance edits, and alternative cuts for different platforms or regions.

3. Archive, Research, and Reuse

Once the show is off-air, transcripts become the key to getting value from your archive:

- Archive teams tag and index content by people, topics, and brands.

- Research teams answer questions like “Show me every on-air mention of Sponsor X this season.”

- Marketing and promos teams build themed compilations, anniversaries, and recap packages.

4. Compliance and Audit

Digital Nirvana has deep roots in compliance logging and monitoring. When you pair broadcast transcripts with tools like MonitorIQ, you get:

Verifiable records of what aired, when, and on which channel

- Faster responses to complaints or regulatory requests

- Easier tracking of political coverage, ad placements, and language guidelines

Manual vs. Automated Broadcast Transcription

Most media organizations today use a hybrid model.

Manual Transcription

- Pros: Human nuance, better handling of complex names and accents

- Cons: Slow and expensive, not scalable for 24/7 feeds

AI-Driven Transcription

- Pros: Fast, scalable, ideal for large volumes and backlogs

- Cons: Needs tuning and, for critical shows, human review

Digital Nirvana’s approach with MetadataIQ and our captioning/transcription services is to let AI handle the heavy lifting, then give your teams or our experts the tools to review and refine where accuracy is non-negotiable for flagship news, marquee sports, and premium originals.

How MetadataIQ Turns Broadcast Transcripts into Time-Coded Metadata

Broadcast transcripts are powerful. But on their own, they’re just text files.

MetadataIQ is Digital Nirvana’s AI-driven platform that:

- Automatically generates speech-to-text transcripts for live, pre-production, and post-production content

- Enriches those transcripts with video intelligence (faces, logos, objects, on-screen text, shot changes)

- Writes time-coded markers directly into Avid and other PAM/MAM systems, including MediaCentral and CTMS API-based workflows

- Makes media instantly searchable by person, topic, brand, and more right inside the editor’s timeline

What That Looks Like for Your Team

Inside Avid Media Composer or MCCUX, an editor can:

- Type a word, phrase, player name, or sponsor.

- See markers across all relevant clips and shows.

- Jump straight to the precise frame where it was said or shown.

- Cut the sequence without ever leaving their familiar interface.

Behind the scenes, MetadataIQ is handling:

- Secure transfer of media or proxies

- AI transcription and video analysis in the cloud

- Automatic marker creation and ingestion

- Optional routing to TranceIQ and other Digital Nirvana services for captions, subtitles, and translations.

Why Broadcasters Choose Digital Nirvana for Broadcast Transcripts

1. Built on Broadcast and Compliance DNA

Digital Nirvana has spent over two decades serving broadcasters with monitoring, compliance, and media intelligence solutions. That history shows up in how we design MetadataIQ:

- Frame-accurate markers

- Clean, consistent logs

- Workflows that respect your caption, regulatory, and QC chains.

2. Deep Integration with Avid and Modern PAM/MAM

MetadataIQ is built to live where editors work, with:

- Direct API-based integration with Avid Media Composer and MediaCentral UX

- Automatic ingest of speech-to-text and video intelligence as customizable markers

- Support for workflows that no longer rely on legacy Avid Interplay

3. AI + Human Expertise

We combine AI automation with human-curated services:

- Machine-generated transcripts and metadata for scale and speed

- Human review and captioning for premium content, regulated environments, and high-visibility events.

4. End-to-End Media Enrichment

MetadataIQ is part of a broader ecosystem that includes:

- MonitorIQ for compliance logging

- MediaServicesIQ for summarization, ad tracking, and licensing

- TranceIQ for transcripts, captions, and translations

Together, they help you automate workflows, streamline operations, comply with regulations, and drive insights with AI across the content lifecycle.

Bringing It All Together

Broadcast transcripts aren’t just a back-office artifact anymore. For leading media companies, they are:

- The index to every second of content you create

- The engine behind fast editing, accurate compliance, and more imaginative archive reuse

- The bridge between raw media and AI-driven insights

With MetadataIQ, Digital Nirvana turns those transcripts into actionable, time-coded metadata that lives right where your teams work in Avid and your PAM/MAM systems.

If your producers and editors are still scrubbing timelines to find the correct quote, it’s time to change that.

Ready to See It in Your Own Workflow?

Share a live use case from your newsroom, sports operation, or post facility, and we’ll walk you through how MetadataIQ can:

- Cut search time and clip turnaround

- Strengthen compliance and audit readiness.

- Unlock new value from your archives.

Book a demo with Digital Nirvana and bring your toughest clip or segment. We’ll run it through MetadataIQ and show you what broadcast transcripts can really do when they’re connected to your entire workflow.

FAQs: Broadcast Transcripts & MetadataIQ

A broadcast transcript is a text version of everything spoken during a TV, radio, or streaming program. It usually includes the whole dialogue, speaker labels, and time codes that align the text with the exact point in the video or audio.

Captions are displayed on-screen for viewers, usually in short, timed chunks. Broadcast transcripts are typically used behind the scenes. They’re more detailed, often line-by-line and time-coded, and optimized for search, editing, compliance, and archive workflows.

Because they make content searchable and reusable, with transcripts, teams can find quotes and segments in seconds instead of scrubbing through hours of footage. They also support compliance, fact-checking, accessibility, localization, and monetization of archive content.

A production-ready transcript should offer high speech-to-text accuracy, clear speaker identification, reliable timecodes, consistent formatting, and a structure that can be ingested into PAM/MAM systems and NLEs as metadata or markers.

MetadataIQ automatically generates or ingests time-coded transcripts, enriches them with AI-driven video intelligence (faces, logos, objects, on-screen text), and writes them back into Avid and other PAM/MAM systems as searchable markers. Editors can then search for words, phrases, or entities and jump directly to the exact frame.

In most cases, no. MetadataIQ is designed to plug into existing Avid and MAM environments, feeding time-coded transcripts and metadata into the tools your teams already use, rather than forcing a new front-end or editorial interface.

Yes. MetadataIQ can process live, near-live, and archived content. For live/near-live, it accelerates highlight creation and same-day edits. For archives, it transforms legacy media libraries into searchable, monetizable assets.

Accuracy depends on audio quality, speakers, and subject matter. Our AI engines are tuned for broadcast environments, and Digital Nirvana offers human review and captioning services on top of machine-generated transcripts.

No. Automation is meant to augment your teams, not replace them. By letting AI handle repetitive transcription and metadata tasks, your compliance, editorial, and captioning teams can focus on higher-value review, refinement, and decision-making.

Transcripts create a verifiable record of what was aired, when, and on which channel. Paired with solutions like MonitorIQ, this makes it easier to respond to regulatory queries, manage political and advertising rules, and maintain detailed audit trails.

Absolutely. Once your archives are transcript- and metadata-rich, you can quickly surface clips for sponsors, build themed collections, repurpose older footage into new packages, and license content more efficiently.

Most customers start with a focused use case, such as a single channel, show, or event, and run a pilot to measure time saved in search, editing, or compliance workflows. From there, the deployment can be scaled across channels, departments, and archives.